Introduction

LLMOps is an innovative methodology that has emerged as a result of the convergence of AI and operations in the constantly evolving technology landscape. This convergence has sparked a revolution, bringing forth new possibilities and advancements.. The frontiers of productivity and creativity have exploded with the introduction of Generative AI Platforms. This blog delves into the world of LLMOps and investigates the ways in which generative AI platforms are influencing technology and creativity in the future.

Imagine a world in which machines are able to think, feel, act, and create on their own. At the front of this paradigm change is LLMOps, a state-of-the-art integration of AI with DevOps techniques. It presents a methodical technique that smoothly incorporates AI models into the operational workflow, opening doors to previously unheard-of potential and efficiencies across industries.

Generative AI Platforms

The landscape of Generative AI platforms, especially concerning Large Language Models (LLMs), is evolving due to the operationalization of scaled-up generative AI. This process seamlessly integrates potent AI models into various business operations, unleashing tangible advantages across diverse industries. Foundation Models' advent has sparked transformative changes, revolutionizing sectors like entertainment, healthcare, finance, and marketing with its impact.

Potentials of LLM

LLMOps stands at the intersection of advanced AI technology and practical application. A set of strategic procedures and methods are employed to effectively navigate the intricacies linked with LLMs.. This specialized domain focuses on ensuring that these models are not just theoretically proficient but are also viable, efficient, and effective in real-world scenarios. LLMOps is about bringing structure and control to the otherwise chaotic and intricate world of Generative AI.

Leveraging Language Model (LLM) capabilities within a platform's development unleashes a spectrum of unparalleled potential. Here are five elaborative points highlighting the unlocked potential of LLMs within the realm of platform building:

1. Natural Language Understanding and Generation

-

Contextual Understanding:

LLMs excel in understanding the nuances of human language, enabling platforms to comprehend user queries or inputs with contextual relevance.

- Dynamic Content Creation:

2. Intelligent Decision Support

-

Data Analysis and Insights: LLMs can analyze vast datasets, extracting meaningful insights and patterns, empowering platforms to provide valuable recommendations and support for decision-making processes.

-

Personalized Recommendations: By interpreting user preferences, LLM-powered platforms offer personalized recommendations, enhancing user engagement and satisfaction.

3. Efficient Automation and Optimization

-

Workflow Streamlining: LLM integration automates tasks within the platform, optimizing workflows, reducing manual efforts, and enhancing operational efficiency.

-

Adaptive Learning: These models continually adapt and learn from new data, ensuring the platform evolves and improves over time, adapting to changing user behaviors and trends.

4. Tailored User Experiences

-

Enhanced Interactions: LLM-driven interfaces provide more intuitive and human-like interactions, fostering improved user experiences and engagement.

-

Content Customization: Platforms leverage LLMs to customize content delivery, catering precisely to individual user preferences, increasing user satisfaction.

5. Innovation and Creativity Catalyst

-

Exploration of New Ideas: LLMs serve as a tool for exploration, encouraging innovation by providing a platform to experiment with new concepts, designs, or solutions.

-

Creative Problem Solving: By generating novel and context-aware outputs, LLMs aid in creative problem-solving, contributing to out-of-the-box solutions within the platform's functionality.

By harnessing the capabilities of LLMs within platform development, businesses can unlock enhanced user experiences, streamline operations, make informed decisions, and foster innovation, thus reshaping the landscape of technology and functionality in transformative ways.

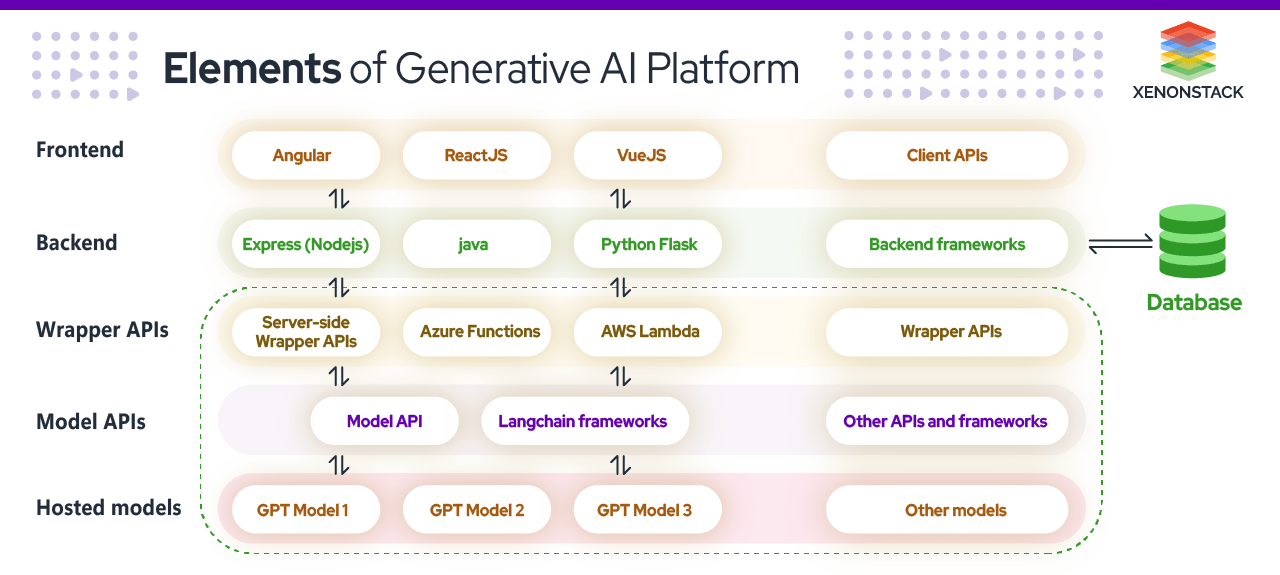

Elements of Generative AI Platform

Generative AI Platforms encompass various essential elements that contribute to their functionality and versatility in creating diverse content, solutions, and experiences. These platforms leverage sophisticated AI models like Generative Pre-trained Transformers (GPT) and similar architectures to generate content, understand context, and perform various tasks.

Some key elements of a Generative AI Platform include:

| Components | Tech Stack |

|---|---|

|

Data Collection |

Web Scraping Frameworks (Scrapy, BeautifulSoup)

|

|

Deep Learning Frameworks |

|

|

Gen AI Models |

|

|

Training and Optimization |

|

|

Model Deployments |

|

|

Evaluation Metrics |

|

|

Reinforcement Learning |

|

|

User Interface |

|

|

Infrastructure |

|

Developing the Gen AI Platform

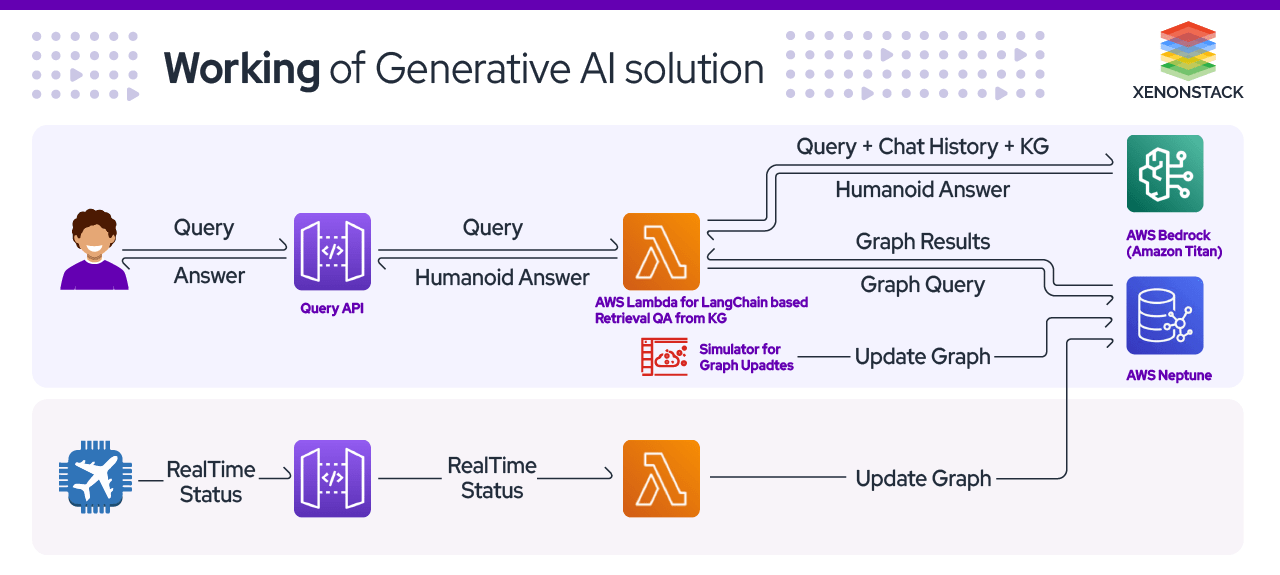

Developing a Generative AI Platform involves a series of steps and considerations to create a robust environment capable of leveraging AI models to generate diverse content, solve problems, and address specific use cases. The key aspects of the development process provide a fundamental understanding of its overall framework. This solution leverages knowledge graphs and LLMs to offer users instantaneous and accurate information about flights and related terminals. Let's delve into the key components of the development process, providing a comprehensive understanding of how a Generative AI Platform is built.

-

Define Objectives and Use Cases: Before initiating development, define the specific goals and applications your Generative AI Platform will serve. Identify the precise use cases across various industries or domains where the platform's capabilities will be harnessed. The objective of our chatbot platform was to provide real-time flight updates, including details on departure, arrival, delays, gate changes, and related terminal information.

-

Data Collection and Preparation: Gather diverse datasets relevant to your use cases. Ensure rigorous preprocessing: clean, label, and format data appropriately. This ensures that your AI models are fed with quality data for optimal training. We generated synthetic data for this use case development and preprocessed and structured this data to feed into your knowledge graph, ensuring it's clean, labeled, and formatted for effective knowledge representation. We developed a robust knowledge graph using collected and structured data, mapping relationships between flights, terminals, delays, and other relevant entities

-

Select and Train Generative Models: Choose suitable pre-trained Generative AI models (e.g., GPT) for your platform. Fine-tune these models using the collected and preprocessed data to cater to your specific tasks or domains. For our use case we fine-tuned the textual prompt given to our LLMs, utilizing this knowledge graph to understand and generate contextually relevant queries and accurate results.

-

Platform Architecture Design: Design a robust technical architecture encompassing frontend, backend, and AI model integrations. Define how your AI models will interact seamlessly within the platform's ecosystem. Designed the technical architecture of your chatbot platform, integrated the knowledge graph, LLMs, and backend systems. Defined how users will interact with the chatbot, extracting and presenting real-time flight updates conversationally and intuitively.

-

Frontend and Backend Development, Integration and Testing: Developed a user-friendly chatbot interface enabling users to query and receive real-time flight updates. Constructed a backend infrastructure capable of processing user requests, querying the knowledge graph, and utilizing LLMs to generate accurate responses. Rigorously tested the platform to ensure seamless functionality, accuracy, and security.

-

Deployment and Optimization: Deployed the chatbot platform onto a suitable environment, considering factors such as scalability and reliability. Optimize the platform's performance to ensure swift responses and accuracy in delivering real-time flight updates. Here a data simulator was created to update the data in realtime in the Graph DB (here Neptune) and Chatbot returned the results based on recent updates in the database.

-

Continuous Improvement and Ethical Compliance: Implement mechanisms for continual learning and enhancement of AI models. Regularly update your platform with new data. Address ethical considerations by mitigating biases and ensuring user privacy and compliance with relevant regulations. Implement mechanisms for continual learning and improvement, updating the knowledge graph and retraining LLMs with new data regularly. Address ethical considerations, ensuring user privacy and bias mitigation within the chatbot's functionalities.

-

Documentation and Support: Provide comprehensive documentation for users, developers, and administrators, offering extensive support and training materials to facilitate seamless interaction with your Generative AI Platform.

Utilize this guide as a roadmap for the development of your GenAI-based chatbot platform, empowering users to effortlessly access accurate and real-time flight information using knowledge graphs and LLMs. This structured approach ensures the seamless integration of AI capabilities while delivering a user-centric and efficient solution for flight-related updates.

Key Components of the solution

The key components of the solution are:

-

Amazon Bedrock LLM: We leverage Amazon Bedrock models to create a conversational AI interface that can seamlessly interact with users, understand their queries about flight status, timing, pricing, and accommodation, and provide real-time, contextually relevant responses. Based on pricing and the use case one can select a foundational model and use its api for the tasks.

-

Amazon Neptune Graph Database: Amazon Neptune is used as the underlying data store to efficiently manage and retrieve structured and unstructured travel data. It ensures data integrity and supports complex queries for delivering accurate, real-time information.

-

AWS Lambda: Serverless application for execution of API. Lambda is well known for its robustness, scalability, extensibility and cost-effectiveness.

How Generative AI Platforms Function?

In this Gen AI based platform, when user ask any query say flight details or its recent running status then this query is fetched using API gateway trigger and sent to the AWS Lambda. This lambda will send the user’s query to the amazon bedrock model and this model will convert this query to a cypher query (kind of a SQL query) that will then be run in the graph DB(AWS Neptune here). The results of this query are then given again to the LLM and it will generate a humanoid response based on the results and will be sent back to the user.

Business values of Generative AI Platfroms

Generative AI Platforms offer a multitude of business values across various industries due to their capabilities in content generation, problem-solving, and personalized interactions. Here are several key business values provided by Generative AI Platforms:

-

Operational Efficiency and Cost Savings: Generative AI Platforms automate repetitive tasks, optimizing workflows, and reducing the need for manual intervention. By generating content, responses, or performing tasks, these platforms save time and human resources, consequently reducing operational costs. For instance, in content creation or customer support, automation reduces labor-intensive efforts, resulting in significant cost savings.

-

Enhanced User Experience and Engagement: The personalization capabilities of Generative AI Platforms enable tailored recommendations, content, or interactions based on user preferences. By delivering personalized experiences, these platforms significantly contribute to user satisfaction, ultimately leading to increased retention rates and enhanced engagement.. For example, personalized product recommendations in e-commerce platforms enhance user experiences, leading to higher conversion rates.

-

Innovation and Competitive Edge: Facilitates innovation by generating novel solutions, designs, or content, giving businesses a competitive advantage. For instance, in advertising or design, AI-generated creative concepts can differentiate brands and products from competitors, fostering innovation.

-

Data-Driven Decision Making: Generative AI Platforms analyze vast datasets to extract valuable insights, aiding in informed decision-making and strategic planning. By processing and interpreting data, these platforms provide businesses with actionable intelligence, enabling better predictions, optimizations, and informed strategies across various domains like marketing, finance, or product development.

- Scalability and Adaptability: Generative AI Platforms offer scalable solutions adaptable to changing business needs. These platforms efficiently handle increased workloads, catering to a growing user base or evolving market demands without compromising quality. For instance, in customer service or content generation, these platforms scale seamlessly, meeting increased demand without significant resource allocation.

Use cases of the Generative AI Platforms

1. Content Generation and Personalization

Generative AI Platforms excel in creating diverse content types, such as articles, product descriptions, or social media posts. LLMOps can leverage these capabilities to automate content creation, ensuring personalized and contextually relevant outputs. This use case streamlines content workflows, saving time and resources for content creators and marketers. Eg: AlphaCode, a revolutionary coding assistant, leverages generative AI to empower developers.

2. AI-Powered Customer Support and Chatbots

Integrating Generative AI Platforms into LLMOps enables the creation of AI-driven chatbots for customer support. These chatbots offer immediate and accurate responses to user queries, enhancing customer service experiences. Through natural language understanding, these bots assist users in troubleshooting issues or providing information 24/7.

Revolutionize Customer Support with Generative AI

3. Personalized Recommendations and Marketing

Generative AI Platforms analyze user data to deliver personalized recommendations for products, services, or content. LLMOps can utilize this capability to tailor marketing strategies, suggesting relevant products or content to users based on their preferences and behaviors. This use case significantly improves user engagement and conversion rates.

4. Predictive Analytics and Forecasting

Generative AI Platforms equipped within LLMOps can analyze extensive datasets to predict trends, behaviors, or market insights. This use case assists businesses in making informed decisions, forecasting market demands, and strategizing resource allocation based on data-driven insights.

Leverage Predictive Analytics for Improved Decision-Making

5. Workflow Automation and Process Optimization

Integrating Generative AI Platforms into LLMOps allows for automating repetitive tasks and optimizing internal workflows. This use case enhances operational efficiency by streamlining processes, reducing manual efforts, and ensuring standardized outputs across various operations.

These use cases highlight the practical implementations of Generative AI Platforms in LLMOps, showcasing their versatile functionalities and their ability to transform operational workflows, customer interactions, marketing strategies, data analytics, and overall business efficiency into a new era of excellence.

Explore more about the Generative AI Use Cases

Conclusion

A recap highlighting the pivotal role of LLMOps and Generative AI Platforms in shaping the future of technology. Emphasizing their transformative potential and the immense possibilities they offer across various industries.

By exploring these sections, readers can gain comprehensive insights into integrating LLMOps and Generative AI Platforms, understanding their functionalities, prerequisites, development process, business values, and real-world applications across diverse sectors.

- Know more about Generative Adversarial Network Architecture

- Deep dive into the Introduction to Foundation Models