How do generative AI models work?

Generative AI models function by scrutinizing patterns and information within extensive datasets, employing this understanding to create fresh content. This process encompasses various stages.

1. Data gathering

When training a generative AI model, the first step is clearly defining the objective. The objective should specify the kind of content that the model is expected to generate. A clear goal, whether images, text, or music, is crucial. The developer can tailor the training process by defining the objective to ensure the model produces the desired output.

2. Preprocessing

To create a quality Generative AI model, collect a diverse dataset that aligns with the objective. Ensure the data is preprocessed and cleaned to remove noise and errors before feeding it into the model.

3. Choose the Right Model Architecture

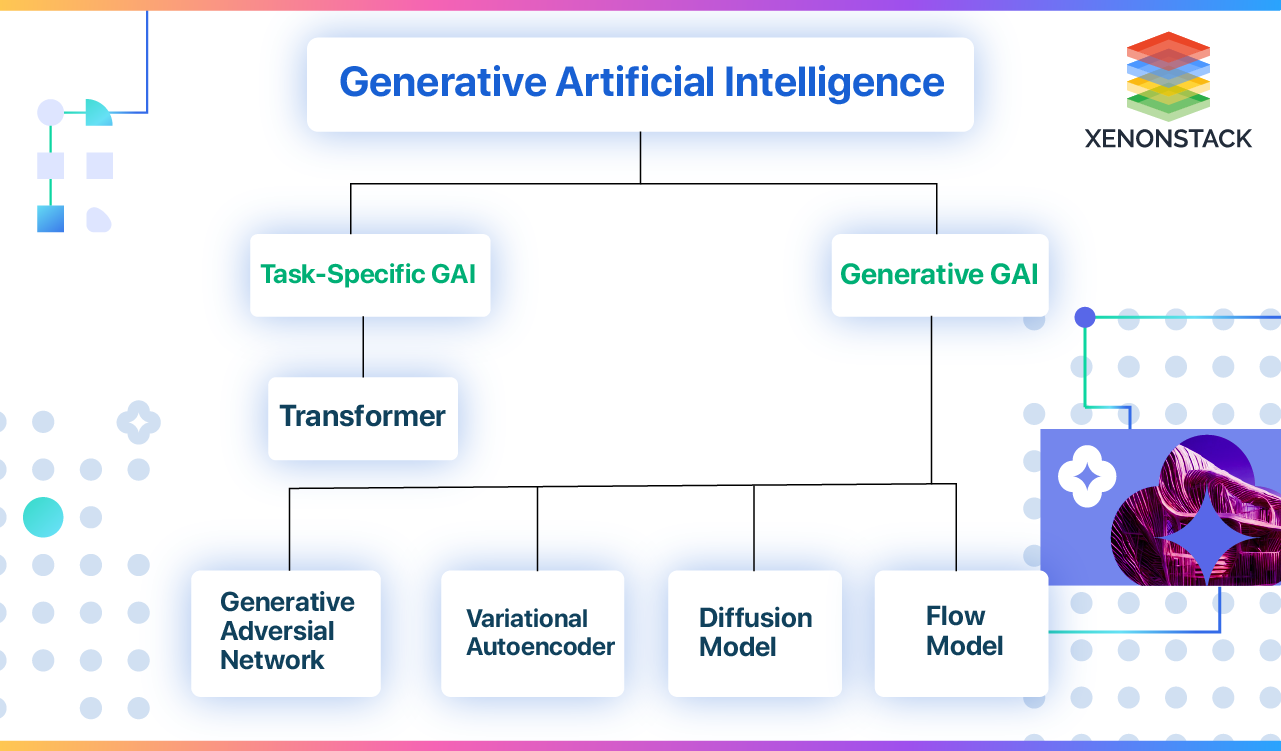

Choosing the exemplary model architecture is a crucial step in ensuring the success of your generative AI project. Various architectures exist, such as Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformers. Each architecture has unique advantages and limitations, so it is essential to carefully evaluate the objective and dataset before selecting the appropriate one.

4. Implement the Model

There is a need to create the neural network, define the layers, and establish the connections between them by writing code to implement the chosen model architecture; frameworks and libraries like TensorFlow and PyTorch offer prebuilt components and resources to simplify the implementation process.

5. Train the Model

Train a generative AI model involves sequentially introducing the training data to the model and refining its parameters to reduce the difference between the generated output and the intended result. This training process requires considerable computational resources and time, depending on the model's complexity and the dataset's size. Monitoring the model's progress and adjusting its training parameters, like learning rate and batch size, is crucial to achieving the best results.

6. Evaluate and Optimize

After training a model, it is crucial to assess its performance. This can be done by using appropriate metrics to measure the quality of the generated content and comparing it to the desired output. If the results are unsatisfactory, adjusting the model's architecture, training parameters, or dataset could be necessary to optimize its performance.

7. Fine-tune and Iterate

Developing a generative AI model is a process that requires continuous iteration and improvement. Once the initial results are evaluated, areas for improvement can be identified. By incorporating feedback from users, introducing fresh training data, and refining the training process, it is possible to enhance the model and optimize the results. Therefore, consistent improvements are crucial in developing a high-quality generative AI model.

Best Strategies for Training Generative AI Models

1. Choose the exemplary model architecture

When it comes to data generation, selecting the most appropriate model is a critical factor that can significantly impact the resulting data quality. The most used models are Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and autoregressive models. Each of these models has advantages and disadvantages, depending on the complexity and quality of the data.

VAEs are particularly useful for learning latent representations and generating smooth data. However, they may suffer from blurriness and mode collapse. On the other hand, GANs excel at producing sharp and realistic data, but they may be more challenging to train. Autoregressive models generate high-quality data but may be slow and memory-intensive.

When selecting the most appropriate model for particular requirements, it is crucial to compare their performance, scalability, and efficiency. This allows for a well-informed decision based on the project's specific requirements and constraints. Therefore, carefully considering these factors is critical to achieving the best results in data generation.

Explore the Advantages of Model-Centric AI for Businesses in 2023

2. Use transfer learning and pre-trained models

One practical approach for generative tasks is the application of transfer learning and pre-trained models. Transfer learning involves leveraging knowledge from one domain or task to another. Pre-trained models have already been trained on large, diverse datasets such as ImageNet, Wikipedia, and YouTube. The use of pre-existing models and applying transfer learning can substantially cut down on the time and resources required for model training. Furthermore, pre-trained models can be adapted to specific data and tasks. For example, developers may use pre-trained models like VAE or GAN for images and GPT-3 or BERT for text to generate images or text. Better results can be achieved by fine-tuning these models with their dataset or domain.

Discover the Inner Workings of the ChatGPT Model and Its Promising Future Applications

3. Use data augmentation and regularization techniques

The quality of generative tasks can be improved through data augmentation and regularization techniques. Data augmentation encompasses the creation of diverse data by applying transformations such as cropping, flipping, rotating, or introducing noise to the existing dataset. Conversely, regularization involves imposing constraints or penalties on the model to prevent overfitting and enhance generalization. These methods expand the training data's scope and diversity, mitigate the risk of memorization or replication, and enhance the generative model's resilience and variety. Techniques such as data augmentation can be used for random cropping or color jittering for image generation. In contrast, regularization techniques such as dropout, weight decay, or spectral normalization can be used for GAN training.

Discover about the Importance of Model Robustness

4. Use distributed and parallel computing

A helpful strategy to enhance generative tasks is to use distributed and parallel computing. This technique involves dividing the data and model among devices, such as GPUs, CPUs or TPUs, and coordinating their work. Distributed and parallel computing can accelerate training and enable the management of extensive, complex data and models. It also helps to reduce memory and bandwidth consumption and scale up the generative model. For instance, distributed and parallel computing techniques such as data parallelism, model parallelism, pipeline parallelism, or federated learning can be used to train generative models.

5. Use efficient and adaptive algorithms

Efficient and adaptable algorithms have the capability to swiftly and flexibly enhance the parameters and hyperparameters of the generative model. These include the learning rate, batch size, and number of epochs. These algorithms can improve the model's performance and convergence and reduce trial-and-error time. Several algorithms are available for optimizing generative models, including SGD, Adam, and AdaGrad. Additionally, Bayesian optimization, grid search, and random search algorithms are suitable for hyperparameter tuning. By leveraging these techniques, one can effectively fine-tune models to suit different data and tasks while addressing non-convex and dynamic optimization challenges. It is recommended that these methods be employed to achieve optimal results in generative modeling.

Evaluation & Monitoring Metrics for Generative AI

Language models such as OpenAI GPT-4 and Llama 2 can cause harmful outcomes if not designed carefully. The evaluation stage helps identify and measure potential harms by establishing clear metrics and completing iterative testing. Mitigation steps, such as prompt engineering and content filters, can then be taken. AI-assisted metrics can be helpful in scenarios without ground truth data, helping to measure the quality and safety of the answer. Below are metrics that help to evaluate the results generated by generative models:

-

The groundedness metric assesses how well an AI model's generated answers align with user-defined context. It ensures that claims made in an AI-generated answer are substantiated by the source context, making it essential for applications where factual correctness and contextual accuracy are critical. The input required for this metric includes the question, context, and generated answer, and the score range is Integer [1-5], where one is bad, and five is good.

-

The relevance metric is crucial for evaluating an AI system's ability to generate appropriate responses. It measures how well the model's responses relate to the given questions. A high relevance score signifies the AI system's comprehension of the input and its ability to generate coherent and suitable outputs. Conversely, low relevance scores indicate that the generated responses may deviate from the topic, lack context, or be inadequate.

-

Coherence is a metric that measures the ability of a language model to generate output that flows smoothly, reads naturally, and resembles human-like language. It assesses the readability and user-friendliness of the model's generated responses in real-world applications. The input required to calculate this metric is a question and its corresponding generated answer.

-

The Fluency score gauges how effectively an AI-generated text conforms to proper grammar, syntax, and the appropriate use of vocabulary. It is an integer score ranging from 1 to 5, with one indicating poor and five indicating good. This metric helps evaluate the linguistic correctness of the AI-generated response. It requires the question and the generated answer as input.

-

The Similarity metric rates the similarity between a ground truth sentence and the AI model's generated response on a scale of 1-5. It objectively assesses the performance ofT in text generation tasks by creating sentence-level embeddings. This metric helps compare the generated text with the desired content. To use the GPT-Similarity metric, input the question, ground truth answer, and generated answer.

- Know more about Generative Adversarial Network Architecture

- Explore more about How to Build a Generative AI Model for Image Synthesis

- Deep dive into the Introduction to Foundation Models

Conclusion of Training and Assessing Generative AI and LLM Models

Enterprises must consider the options when incorporating and deploying foundation models for their use cases. Each use case has specific requirements, and several decision points must be considered while deciding on the deployment options. These decision points include cost, effort, data privacy, intellectual property, and security. Based on these factors, an enterprise can use one or more deployment options.

Foundation models will play a vital role in accelerating the adoption of AI in businesses. They will significantly reduce the need for labeling, making it easier for businesses to experiment with AI, build efficient AI-driven automation and applications, and deploy AI in a broader range of mission-critical situations

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)