How to Build a Knowledge Graph for LLMs

The convergence of Large Language Models (LLMs) and Knowledge Graphs is changing how enterprises unlock value from their data. LLMs are powerful at understanding and generating human-like language, but they often struggle with context, accuracy, and explainability. Knowledge Graphs fill this gap by providing structured and interconnected insights, ensuring that outputs are reliable and trustworthy.

When combined, LLMs and Knowledge Graphs enable Agentic AI — intelligent systems that don’t just respond, but also reason, orchestrate, and act with context.

At XenonStack, we see this integration as the foundation for building autonomous enterprise operations powered by Akira AI. Agentic AI merges the natural language fluency of LLMs with the factual precision of Knowledge Graphs to drive workflows, automate decision-making, and deliver intelligent action across domains. From customer support to cybersecurity operations and data governance, unifying these technologies transforms static responses into explainable, actionable intelligence.

This synergy delivers trust, scalability, and real-time adaptability — all essential as enterprises scale AI. With Agentic AI, businesses gain systems that continuously learn, self-correct, and deliver measurable outcomes. This blog explores how building Knowledge Graphs for LLMs accelerates enterprise transformation, strengthens decision intelligence, and prepares organizations for autonomous operations.

The Evolution from Generative AI to Agentic AI

The rise of LLMs marked a major milestone in AI adoption. Their ability to answer questions, summarize documents, and generate fluent responses made them ideal for conversational applications. But as enterprises deployed them, limitations became clear.

Generative AI systems often produced plausible but inaccurate answers, creating risks in industries where precision and compliance matter most. For example, a financial advisor chatbot powered only by an LLM might generate convincing but non-compliant advice. In healthcare, a misinterpreted record could lead to dangerous recommendations.

These challenges revealed a critical truth: LLMs excel at language fluency, but fall short on reasoning, grounding, and explainability.

This is why Agentic AI is emerging as the next stage of enterprise AI. Unlike generative models that stop at producing responses, Agentic AI systems are designed to reason, orchestrate, and act in context. By combining LLMs with Knowledge Graphs, Agentic AI delivers both language intelligence and fact-grounded decisions.

Solutions like Akira AI by XenonStack are driving this shift. Instead of isolated outputs, enterprises can deploy intelligent agents capable of workflow automation, contextual reasoning, and continuous learning — unlocking measurable value across operations.

Why LLMs Alone Are Not Enough

Large Language Models (LLMs) have advanced natural language tasks, but their limits become clear in enterprise use cases. On their own, they cannot provide the reliability, context, or explainability that businesses demand.

Key limitations include:

-

Hallucination Risks – LLMs can generate answers that sound convincing but are factually wrong, leading to poor decisions.

-

Static Knowledge – Models are trained on historical data and require retraining to reflect new events or updates.

-

Limited Contextual Awareness – They cannot natively connect to enterprise databases, policies, or compliance frameworks.

-

Black-Box Reasoning – Their outputs lack transparency, making it difficult to trace or justify decisions.

For example, in cybersecurity, an LLM might detect unusual traffic but fail to link it to a wider attack campaign across multiple vectors. In supply chain management, it may flag delays but overlook connected geopolitical risks.

This is where Knowledge Graphs become essential. They provide structured, interconnected insights that ground LLM outputs, enabling Agentic AI systems that reason, act, and orchestrate with trust and context.

The Role of Knowledge Graphs in Agentic AI

A Knowledge Graph organizes enterprise data into entities and relationships, turning isolated facts into a semantic network where information is both interconnected and contextual. This structure provides reasoning and precision that LLMs cannot achieve on their own.

Key strengths of Knowledge Graphs include:

-

Contextual Precision – Unifies multiple data sources into a single, connected knowledge fabric.

-

Explainability – Creates a transparent chain of reasoning behind AI-driven decisions.

-

Dynamic Adaptability – Updates knowledge in real-time, unlike static model retraining.

-

Cross-Domain Integration – Links structured and unstructured data across diverse enterprise systems.

When LLMs are grounded with Knowledge Graphs, enterprises gain trustworthy, explainable AI. This pairing forms the backbone of Agentic AI orchestration, ensuring that every response or action by an autonomous agent is rooted in reliable, enterprise-specific knowledge.

How LLMs and Knowledge Graphs Work Together

LLMs vs Knowledge Graphs → Why Together?

LLM Strengths

-

Natural language fluency

-

Summarization & content generation

-

Conversational interfaces

LLM Limitations

-

🚨 Hallucination Risks: Plausible but false outputs

-

📉 Static Knowledge: Needs retraining for updates

-

🔒 Limited Context: No direct link to enterprise data/policies

-

❓ Black-Box Reasoning: Outputs lack traceability

Knowledge Graph Strengths

-

Structured, interconnected insights

-

Real-time adaptability

-

Explainability & transparency

-

Trustworthy, governed context

Together = Agentic AI

-

LLMs provide language fluency

-

Knowledge Graphs ensure grounding, reasoning, and trust

-

Unified → context-aware, explainable, and actionable AI systems

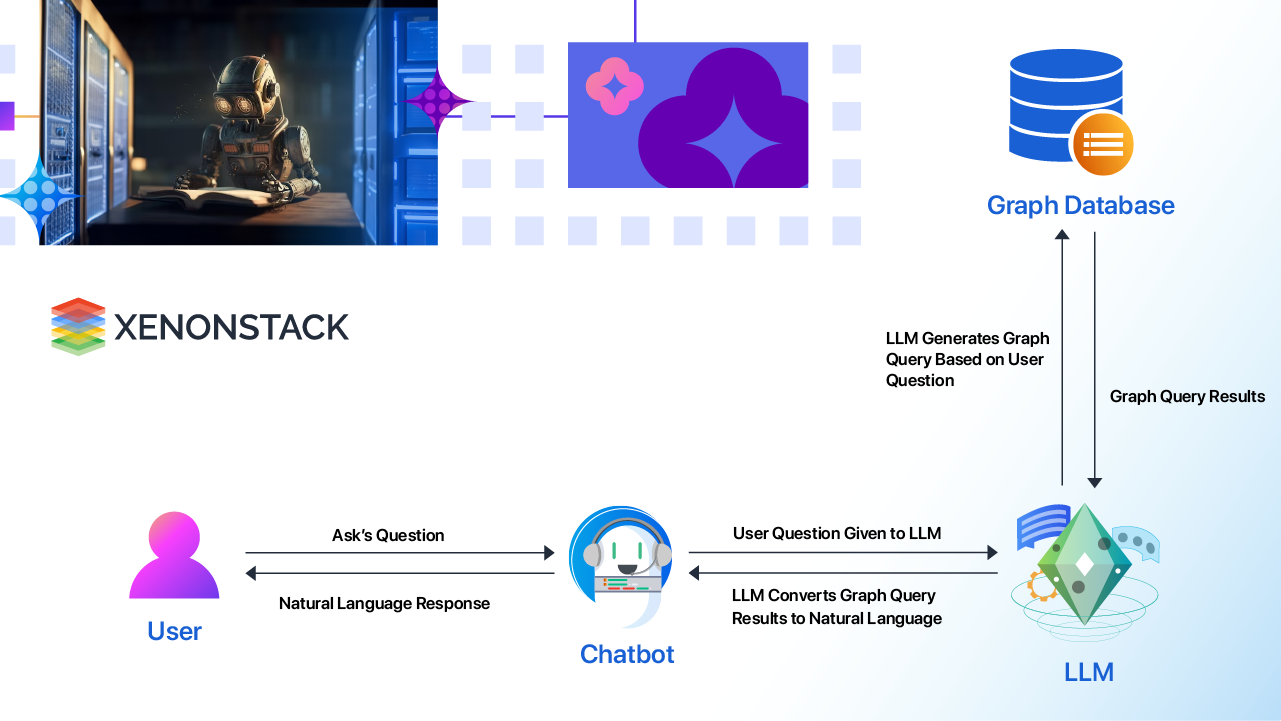

Integrating LLMs and Knowledge Graphs

Bringing LLMs and Knowledge Graphs together allows enterprises to achieve outcomes neither can deliver alone.

Key benefits include:

-

Grounded Responses – LLMs generate fluent answers, while Knowledge Graphs validate facts for accuracy.

-

Contextual Queries – Graphs provide the right context, reducing hallucinations.

-

Actionable Insights – Outputs are linked directly to enterprise systems for execution.

-

Continuous Learning – Graph updates keep the AI system aligned with real-time knowledge.

For example, in Akira AI’s agentic architecture, an LLM may interpret a query such as:

“What risks exist in our supply chain?”

The Knowledge Graph grounds this query in supplier data, shipping delays, and geopolitical events. The Agentic AI system then provides an answer and orchestrates automated actions — such as rerouting logistics or alerting procurement teams.

Enterprise Applications of Unified LLMs and Knowledge Graphs

1. Customer Experience Optimisation

By integrating conversational intelligence with contextual personalisation, enterprises deliver seamless customer journeys. Telecom providers, for instance, can combine LLM-powered support with Knowledge Graph-driven account history, ensuring that troubleshooting, billing inquiries, and upsell recommendations are personalised and accurate.

2. Cybersecurity and Autonomous SOC

Solutions like Metasecure.ai and Akira AI show how Agentic AI empowers Security Operations Centers (SOC). Threat intelligence graphs map adversarial patterns, while LLMs interpret logs and alerts—the result: faster detection, automated containment, and reduced mean-time-to-respond (MTTR).

3. Data Governance and Compliance

Agentinstruct.ai ensures data quality, lineage, and compliance by integrating Knowledge Graph-based governance with LLM-based interfaces. Compliance officers can query regulations in natural language and receive context-aware, evidence-backed answers tied to enterprise policies.

4. Financial Operations and Cost Optimisation

Xenonify applies this approach in FinOps. By aligning LLM-powered reporting with Knowledge Graph cost structures, enterprises can optimise multi-cloud expenses, predict AI workload spending, and create accurate budget forecasts.

5. Enterprise Search and Knowledge Discovery

Agent Search illustrates how unifying these technologies enhances enterprise search. Employees can ask complex natural language questions, and the system provides precise, evidence-backed answers instead of generic search results.

6. Insurance Claims and Risk Assessment

LLMs can process unstructured claim reports in insurance, while Knowledge Graphs validate them against policy conditions and fraud detection patterns. Agentic AI enables faster claims processing, risk scoring, and fraud prevention.

7. Energy and Smart Infrastructure

Energy providers use Knowledge Graphs to map assets, consumption data, and grid risks. When combined with LLMs, agents can predict outages, recommend energy optimisations, and automate grid responses. This is critical for sustainability and innovative city initiatives.

Benefits of Unifying LLMs and Knowledge Graphs

Integrating LLMs with Knowledge Graphs gives enterprises the confidence to deploy AI that is not only powerful, but also reliable, transparent, and action-oriented.

Key benefits include:

-

Trustworthy AI → Verified, context-grounded outputs.

-

Explainable Intelligence → Clear reasoning paths improve user trust.

-

Real-Time Adaptability → Continuous updates ensure relevance.

-

Scalable Decision-Making → Supports automation across departments and functions.

-

Cross-Industry Flexibility → Applicable across finance, retail, healthcare, manufacturing, energy, and more.

With Akira AI by XenonStack, organizations can move beyond generic generative models and build Agentic AI systems that ground every action in enterprise-specific knowledge, ensuring trust, scalability, and measurable outcomes.

Agentic AI Architecture: Where LLMs Meet Knowledge Graphs

The unified architecture of Agentic AI brings structure and intelligence to enterprise automation through a layered pipeline:

-

Input Layer → Captures enterprise signals such as queries, events, or alerts.

-

LLM Layer → Handles intent recognition, summarization, and natural language understanding.

-

Knowledge Graph Layer → Validates responses, enriches them with context, and links data across systems.

-

Agent Orchestration → Executes tasks, coordinates workflows, and enables multi-agent collaboration.

-

Execution Layer → Delivers automated outcomes across IT, customer support, cybersecurity, and business operations.

-

Feedback Loop → Continuously updates the Knowledge Graph, improving system intelligence and adaptability.

This architecture enables autonomous enterprise operations, ensuring real-time responses without human bottlenecks while maintaining transparency, trust, and explainability.

Real-World Impact Across Industries

-

Healthcare → Medical knowledge graphs grounded with LLMs help doctors with diagnosis support, patient risk scoring, and drug recommendations.

-

Retail → Contextual personalisation drives customer loyalty and revenue growth.

-

Manufacturing → Supply chain resilience improves with predictive risk analysis.

-

Government → Transparent AI enhances public trust in digital services.

-

Energy → Smart grid automation ensures sustainability and efficiency.

-

Insurance → Faster claim settlement, reduced fraud, and better risk pricing.

These examples prove that Agentic AI is not limited to chatbots — it is a strategic enabler of enterprise transformation.

XenonStack and Akira AI: Driving the Future of Agentic AI

XenonStack has positioned Akira AI as a context-first agentic platform where LLMs and Knowledge Graphs form the foundation of enterprise autonomy.

Key differentiators include:

-

Autonomous Agents for IT, Security, Customer Ops, and FinOps.

-

Knowledge Graph-Driven Context for reliable, explainable insights.

-

Seamless Integrations with platforms like Databricks, Jira, and ServiceNow.

-

Continuous Learning via real-time graph enrichment.

By unifying LLMs and Knowledge Graphs, Akira AI enables enterprises to deploy reliable AI agents at scale, ensuring that outputs are always contextually accurate, explainable, and actionable.

The Road Ahead: Future of Agentic AI

The future of Agentic AI goes beyond text-based reasoning. The next frontier is multimodal intelligence, where LLMs process images, video, and sensor data alongside Knowledge Graph reasoning. This will open new applications in autonomous vehicles, industrial IoT, and digital twins.

Enterprises will also demand AI governance and compliance frameworks. Here, Knowledge Graphs play a critical role by enabling explainability, auditability, and regulatory alignment. As scrutiny from regulators grows, explainable grounding will shift from a nice-to-have to a non-negotiable requirement.

Another frontier is real-time integration. As organizations adopt event-driven architectures, Agentic AI must be able to reason and act within milliseconds. This will be crucial for domains like cybersecurity, fraud detection, and predictive maintenance, where rapid orchestration is essential.

By investing in this convergence today, enterprises can future-proof their digital strategies. XenonStack and Akira AI are already building this foundation, enabling organizations to confidently achieve autonomous operations.

Conclusion: Unifying LLMs and Knowledge Graphs for Agentic AI

Unifying LLMs and Knowledge Graphs marks a turning point in the shift from generative AI hype to practical, agentic intelligence. The future is not just about producing fluent outputs, but about executing intelligent, explainable actions that enterprises can trust.

With Akira AI by XenonStack, enterprises gain a platform that delivers autonomous, transparent, and scalable AI systems. This integration is more than an enhancement — it is the core strategy for achieving enterprise-grade Agentic AI.

By embracing this approach, organizations unlock the next era of AI adoption, where decisions are generated, grounded, explained, and executed — delivering measurable outcomes at scale.

Next Steps with Agentic AI

Talk to our experts about unifying LLMs and Knowledge Graphs with Agentic AI. See how Akira AI by XenonStack helps enterprises automate operations, enhance decision intelligence, and drive autonomous transformation with trust and explainability.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)