Overview of Persistent Storage

Persistent Storage is critical for running stateful containers. Kubernetes is an open-source system for automating the deployment and management of containerized applications. There are many storage options available. In this blog, we are going to discuss the most widely used storage, which can be used on-premises or in the cloud, such as GlusterFS, CephFS, Ceph RBD, OpenEBS, NFS, GCE Persistent Storage, AWS EBS, NFS, and Azure Disk.Open Source Distributed Object Storage Server written in Go, designed for Private Cloud infrastructure providing S3 storage functionality. Click to explore about, Minio Distributed Object Storage Architecture and Performance

Why Persistent Storage Solutions are important?

To follow this guide, you need -- Kubernetes

- Kubectl

- DockerFile

- Container Registry

- Storage technologies that will be used -

- OpenEBS

- CephFS

- GlusterFS

- AWS EBS

- Azure Disk

- GCE persistent storage

- CephRBD

Kubernetes

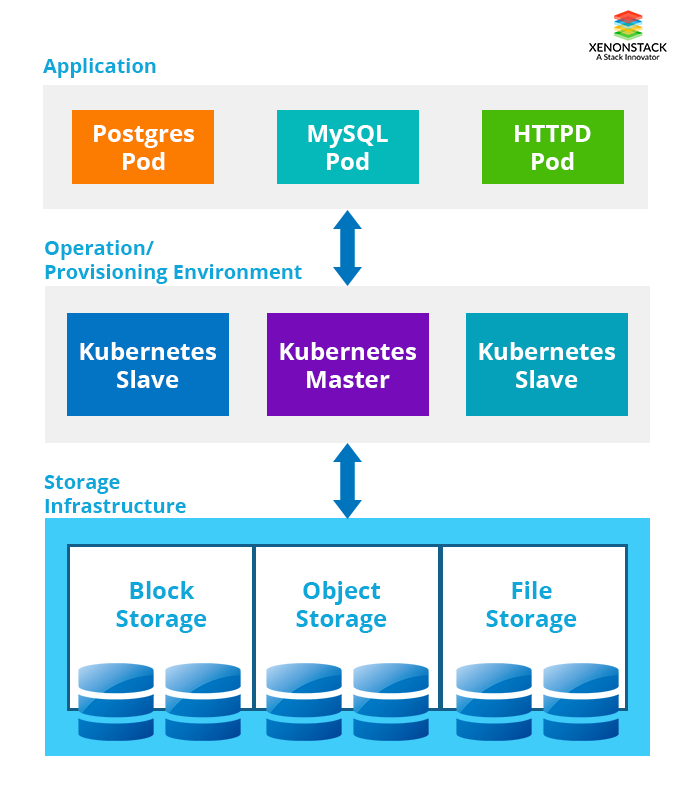

Kubernetes is one of the best open-source orchestration platforms for deploying, autoscaling and managing containerized applications.

Kubectl

Kubectl is a command-line utility for managing Kubernetes clusters remotely or locally. Use this link to configure Kubectl.

Container Registry

Container Registry is the repository where we store and distribute docker images. Several repositories are available online, including DockerHub, Google Cloud, Microsoft Azure, and AWS Elastic Container Registry (ECR). Container storage is ephemeral, meaning all the data in the container is removed when it crashes or restarted. Persistent storage is necessary for stateful containers to run applications like MySQL, Apache, PostgreSQL, etc., so we don’t lose our data when a container stops.Running Containers at any real-world scale requires container orchestration, and scheduling platform like Docker Swarm, Apache Mesos, and AWS ECS. Click to explore about, Laravel Docker Application Development

3 Types of Storage

- Block Storage is the most commonly used storage and is very flexible. It stores chunks of data in blocks, each identified by its address. Because of its performance, block storage is mostly used for databases.

- File Storage stores data as files; each file is referenced by a filename and has attributes associated with it. NFS is the most commonly used file system. We can use file storage when we want to share data with multiple containers.

- Object Storage: Object storage is different from file storage and block storage. In object storage, data is stored as an object and is referenced by object ID. It is massively scalable and provides more flexibility than block storage, but performance is slower. Amazon S3, Swift and Ceph Object Storage are the most commonly used object storage.

7 Emerging Storage Technologies

The below highlighted are the 7 Emerging Storage Technologies:

OpenEBS Container Storage

OpenEBS is a pure container-based storage platform available for Kubernetes. Using OpenEBS, we can easily use persistent storage for stateful containers, and the process of provisioning a disk is automated. It is a scalable storage solution that can run anywhere, from the cloud to on-premises hardware.Ceph Storage Cluster

Ceph is an advanced and scalable software-defined storage system that fits best with today’s requirements, providing object storage, block storage, and file systems on a single platform. Ceph can also be used with Kubernetes. We can either use CephFS or CephRBD for persistent storage for Kubernetes pods.- Ceph RBD is the block storage we assign to each pod. In read-write mode, CephRBD can’t be shared with two pods at a time.

- CephFS is a POSIX-compliant file system service which stores data on top Ceph cluster. We can share CephFS with multiple pods at the same time. CephFS is now announced as stable in the latest Ceph release.

GlusterFS Storage Cluster

GlusterFS is a scalable network file system suitable for cloud storage. It is also a software-defined storage which runs on commodity hardware just like Ceph but it only provides File systems, and it is similar to CephFS. Glusterfs provides more speed than Ceph as it uses larger block size as compared to ceph i.e Glusterfs uses a block size of 128kb whereas ceph uses a block size of 64Kb.AWS EBS Block Storage

Amazon EBS provides persistent block storage volumes which are attached to EC2 instances. AWS provides various options for EBS, so we can choose the storage according to requirements depending on parameters like the number of IOPS, storage type(SSD/HDD), etc. We mount AWS EBS with Kubernetes pods for persistent block storage using AWSElasticBlockStore. EBS disks are automatically replicated over multiple AZ’s for durability and high availability.GCEPersistentDisk Storage

GCEPersistentDisk is a durable and high-performance block storage used with the Google Cloud Platform. We can use it either with Google Compute Engine or Google Container Engine. We can choose from HDD or SSD and increase the volume disk size as the need increases. GCEPersistentDisks are automatically replicated across multiple data centres for durability and high availability. We mount GCEPersistentDisk with Kubernetes pods for persistent block storage using GCEPersistentDisk.Azure Disk Storage

An Azure Disk is also durable and high-performance block storage like AWS EBS and GCEPersistentDisk. It provides the option to choose from SSD or HDD for your environment and features like Point-in-time backup, easy migration, etc. An AzureDiskVolume is used to mount an Azure Data Disk into a Pod. Azure Disks are replicated within multiple data centres for high availability and durability.Network File System Storage

NFS is one of the oldest file systems, providing the facility to share a single file system on the network with multiple machines. There are several NAS devices available for high performance, or we can make our system a NAS. We use NFS for persistent storage for pods, and data can be shared with multiple instances.RAID storage uses different disks to provide fault tolerance, to improve overall performance, and to increase storage size in a system. Click to explore about, Types of RAID Storage for Databases

Continuous Deployment of Storage Solutions

Now, we will walk through the deployments of storage solutions described above, starting with Ceph.Ceph Deployment

For Ceph, we need an existing Ceph cluster. Either we have to deploy the Ceph cluster on Bare Metal or we can use Docker Containers. Then, we install the Ceph client on a Kubernetes host. For CephRBD, we have to create a separate pool and user for the created pool.- Creating new/separate pool

- Creating a user with full access to the Kube pool

- Get the authentication key from the Ceph cluster for the user client.kubep

- Creating a new secret in default namespace in Kubernetes

# kubectl create secret generic ceph-secret-kube --type="kubernetes.io/rbd" --from- literal=key='AQBvPvNZwfPoIBAAN9EjWaou6S4iLVg/meA0YA==’ --namespace=default

- kube-controller-manager must have the privilege to provision storage and it needs admin key from Ceph to do that. For that, we must get admin key

- Creating a new secret for admin in default namespace in Kubernetes

# kubectl create secret generic ceph-secret --type="kubernetes.io/rbd" --from-literal=key='AQAbM/NZAA0KHhAAdpCHwG62kE0zKGHnGybzgg==' --namespace=ceph-storage

- After adding secrets, we have to define a new Storage Class by copying the following content in the file named ceph-rbd-storage.yml

apiVersion: storage.k8s.io / v1

kind: StorageClass

metadata:

name: rbd

provisioner: kubernetes.io / rbd

parameters:

monitors: 192.168 .122 .110: 6789

adminID: admin

adminSecretName: ceph - secret

pool: kube

userId: kubep

userSecretName: ceph - secret - kube

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

- Creating a volume using rbd StorageClass in the file named it ceph-vc.yml -

apiVersion: v1

kind: PersistentVolume

metadata:

name: apache - pv

namespace: ceph - storage

spec:

capacity:

storage: 100 Mi

accessModes:

-ReadWriteMany

rbd:

monitors:

-192.168 .122 .110: 6789

pool: kube

image: myvol

user: admin

secretRef:

name: ceph - secret

fsType: ext4

readOnly: false

- Creating a volume claim using rbd StorageClass in the file named it ceph-pvc.yml -

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: apache-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

Storage: 500Mi

- Now, we are going to launch Apache Pod using the claimed volume. Create a new file with the following content -

apiVersion: v1

kind: ReplicationController

metadata:

name: apache

spec:

replicas: 1

selector:

app: apache

template:

metadata:

name: apache

labels:

app: apache

spec:

containers:

-name: apache

image: bitnami / apache

ports:

-containerPort: 80

volumeMounts:

-mountPath: /var/www / html

name: apache - vol

volumes:

-name: apache - vol

persistentVolumeClaim:

claimName: apache - pvc

- For CephFS, we are going to create a Ceph pool -

- A new secret for the Ceph admin user is being created in the default namespace in Kubernetes. Using

# kubectl create secret generic ceph-secret --type="kubernetes.io/rbd" --from-literal=key='AQDkTeBZLDwlORAA6clp1vUBTGbaxaax/Mwpew==' --namespace=default

- After adding secrets, we have to copy the following content in the file named ceph-fs-storage.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: apache

spec:

replicas: 1

selector:

app: apache

template:

metadata:

name: apache

labels:

app: apache

spec:

containers:

-name: apache

image: apache

ports:

-containerPort: 80

volumeMounts:

-mountPath: /var/www / html

name: mypvc

volumes:

-name: mypvc

cephfs:

monitors:

-192.168 .100 .26: 6789

user: admin

secretRef:

name: ceph - secret

GlusterFS Deployment

We can deploy the Glusterfs cluster on Bare Metal servers or containers using Heketi and create a GlusterFS volume afterward.- Creating the following directory on the servers where we want to keep the data.

- Then, we will create a volume using -

- Now, we have to create cluster endpoints for Kubernetes. By adding the content to a file named gluster-endpoint.yaml

kind: Endpoints

apiVersion: v1

metadata:

name: glusterfs - cluster

subsets:

-addresses:

-ip: 10.240 .106 .152

ports:

-port: 1 - addresses:

-ip: 10.240 .79 .157

ports:

-port: 1

- Create the cluster service in Kubernetes by adding the following content in glusterfs-service.yaml

kind: Service

apiVersion: v1

metadata:

name: glusterfs-cluster

spec:

ports:

- port: 1

- Then we will launch Apache pod using gluster as backend storage and add the following content to file apache-pod.yaml -

apiVersion: v1

kind: Pod

metadata:

name: glusterfs

spec:

containers:

- name: glusterfs

image: apache

volumeMounts:

- mountPath: "/var/www/html"

name: glusterfsvol

volumes:

- name: glusterfsvol

glusterfs:

endpoints: glusterfs-cluster

path: kube_vol

readOnly: true

NFS Deployment

For the NFS server to be consumed by the Kubernetes pod. First, we will create persistent volume by adding the following content in nfs-pv.yaml.apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

path: /data/nfs

server: nfs.server1

readOnly: false

- Creating persistent volume claim by adding the following content in nfs-pvc.yaml -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs - pvc

spec:

accessModes:

-ReadWriteMany

resources:

requests:

storage: 10 Gi

- Now we will launch web-server pod with NFS persistent volume by adding the following content in apache-server.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: apache

spec:

replicas: 1

selector:

app: apache

template:

metadata:

name: apache

labels:

app: apache

spec:

containers:

-name: apache

image: apache

ports:

-containerPort: 80

volumeMounts:

-mountPath: /var/www / html

name: nfs - vol

volumes:

-name: nfs - vol

persistentVolumeClaim:

claimName: nfs - pvc

AWS EBS Deployment

For using Amazon EBS in Kubernetes pod first, we have to make sure that -- The nodes on which Kubernetes pods are running are Amazon EC2 instances.

- EC2 instances need to be in the same region and AZ as of EBS.

- First, we have to create storage class in kubernetes for EBS disk by adding the following content in awsebs-storage.yaml

kind: StorageClass

apiVersion: storage.k8s.io / v1

metadata:

name: slow

provisioner: kubernetes.io / aws - ebs

parameters:

type: io1

zones: us - east - 1 d, us - east - 1 c

iopsPerGB: "10"

- Then, we will create PVC by adding the following content in aws-ebs.yaml -

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: aws - ebs

annotations:

volume.beta.kubernetes.io / storage - class: standard

spec:

accessModes:

-ReadWriteOnce

resources:

requests:

storage: 10 Gi

storageClassName: io1

- Now we will launch apache-webserver pod with AWS EBS as persistent storage by adding the following content apache-web-ebs.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: apache

spec:

replicas: 1

selector:

app: apache

template:

metadata:

name: apache

labels:

app: apache

spec:

containers:

-name: apache

image: apache

ports:

-containerPort: 80

volumeMounts:

-mountPath: /var/www / html

name: aws - ebs - storage

volumes:

-name: aws - ebs - storage

persistentVolumeClaim:

claimName: aws - ebs

Azure Disk Deployment

- This is for using the Azure disk as persistent storage for Kubernetes pods. We have to create a storage class by adding the following content in the sc-azure file.yaml

kind: StorageClass

apiVersion: storage.k8s.io / v1beta1

metadata:

name: slow

provisioner: kubernetes.io / azure - disk

parameters:

skuName: Standard_LRS

location: eastus

- After creating storage class we are going to create Persistent Volume claim by adding the following content in azure-pvc.yaml.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: azure - disk - storage

annotations:

volume.beta.kubernetes.io / storage - class: slow

spec:

accessModes:

-ReadWriteOnce

resources:

requests:

storage: 10 Gi

- Now we will launch Apache-webserver pod with AZURE DISK as persistent storage by adding the following content apache-web-azure.yaml.

apiVersion: v1

kind: ReplicationController

metadata:

name: apache

spec:

replicas: 1

selector:

app: apache

template:

metadata:

name: apache

labels:

app: apache

spec:

containers:

-name: apache

image: apache

ports:

-containerPort: 80

volumeMounts:

-mountPath: /var/www / html

name: azure - disk

volumes:

-name: azure - disk

persistentVolumeClaim:

claimName: azure - disk - storage

GCEPersistantDisk Deployment

For using GCEPersistantDisk in Kubernetes pod first, we have to make sure that.- The nodes on which Kubernetes pods are running are GCE instances.

- EC2 instances must be in the same GCE project and zone as the PD.

- First, we have to create a storage class in Kubernetes for the GCEPersistantDisk disk by adding the following content in gcepd-storage.yaml.

kind: StorageClass

apiVersion: storage.k8s.io / v1beta1

metadata:

name: fast

provisioner: kubernetes.io / gce - pd

parameters:

type: pd - ssd

- We will create a PVC by adding the following content in gcepd-pvc.yaml.

apiVersion: extensions / v1beta1

kind: Deployment

metadata:

name: apache

spec:

template:

metadata:

name: apache

labels:

app: apache

spec:

containers:

-name: apache

image: bitnami / apache

ports:

-containerPort: 80

volumeMounts:

-mountPath: /opt/bitnami / apache / htdocs

name: apache - vol

volumes:

-name: apache - vol

gcePersistentDisk:

pdName: gce - disk

fsType: ext4

- After creating a persistent disk, we are going to use it to store web data for the web server pod by adding the following content to the file named "web-data."

OpenEBS Deployment

- For OpenEBS cluster setup, click on this link for the setup guide. First, we are going to start the OpenEBS Services

using Operator by -

# kubectl create -f https://github.com/openebs/openebs/blob/master/k8s/openebs-operator.yaml- Then, we are going to create some default storage classes by -

# kubectl create -f https://raw.githubusercontent.com/openebs/openebs/master/k8s/openebs-storageclasses.yaml

- Now, we are going to launch jupyter with OpenEBS persistent volume by adding the following content in the file named demo-openebs-jupyter.yaml -

apiVersion: apps

kind: Deployment

metadata:

name: jupyter - server

namespace: default

spec:

replicas: 1

template:

metadata:

labels:

name: jupyter - server

spec:

containers:

-name: jupyter - server

imagePullPolicy: Always

image: satyamz / docker - jupyter: v0 .4

ports:

-containerPort: 8888

env:

-name: GIT_REPO

value: https: //github.com/vharsh/plot-demo.git

volumeMounts:

-name: data - vol

mountPath: /mnt/data

volumes:

-name: data - vol

persistentVolumeClaim:

claimName: jupyter - data - vol - claim

-- -

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: jupyter - data - vol - claim

spec:

storageClassName: openebs - jupyter

accessModes:

-ReadWriteOnce

resources:

requests:

storage: 5 G

-- -

apiVersion: v1

kind: Service

metadata:

name: jupyter - service

spec:

ports:

-name: ui

port: 8888

nodePort: 32424

protocol: TCP

selector:

name: jupyter - server

sessionAffinity: None

type: NodePort

Features Comparison for Storage Solutions

|

Storage Technologies |

Read Write Once |

Read Only Many |

Read Write Many |

Deployed On |

Internal Provisioner |

Format |

Provider |

Scalability Capacity Per Disk |

Network Intensive |

|---|---|---|---|---|---|---|---|---|---|

|

CephFS |

Yes |

Yes |

Yes |

On-Premises/Cloud |

No |

File |

Ceph Cluster |

Upto Petabytes |

Yes |

|

CephRBD |

Yes |

Yes |

No |

On-Premises/Cloud |

Yes |

Block |

Ceph Cluster |

Upto Petabytes |

Yes |

|

GlusterFS |

Yes |

Yes |

Yes |

On-Premises/Cloud |

Yes |

File |

GlusterFS Cluster |

Upto Petabytes |

Yes |

|

AWS EBS |

Yes |

No |

No |

AWS |

Yes |

Block |

AWS |

16TB |

No |

|

Azure Disk |

Yes |

No |

No |

Azure |

Yes |

Block |

Azure |

4TB |

No |

|

GCE Persistent Storage |

Yes |

No |

No |

Google Cloud |

Yes |

Block |

Google Cloud |

64TB/4TB (Local SSD) |

No |

|

OpenEBS |

Yes |

Yes |

No |

On-Premises/Cloud |

Yes |

Block |

OpenEBS Cluster |

Depends on the Underlying Disk |

Yes |

|

NFS |

Yes |

Yes |

Yes |

On-Premises/Cloud |

No |

File |

NFS Server |

Depends on the Shared Disk |

Yes |

Storage Class in Kubernetes

First, we need to understand the Kubernetes volume concept to understand the storage class concept. In simple terms, a Kubernetes volume is a directory where our data is stored; containers inside pods in Kubernetes use this volume to persist their data. Kubernetes supports many types of volumes, such as persistent, Ephemeral, and Projected Volumes.

Here, the Storage class concept will be around persistent volume type, which we will discuss in this document. In persistence volume, we have two things to discuss before moving towards storage class, i.e., two APIs provided by Kubernetes PersistentVolumeClaim and PersistenceVolume; the reason for providing two APIs instead of directly provisioning storage to the pod is to hide some information like from where storage has been provided and where it is used.

An open-source system, developed by Google, an orchestration engine for managing containerized applications over a cluster of machines. Taken From Article, Kubernetes Deployment Tools and Best Practices

Persistent Volume is either created by the administrator or dynamically provisioned with the help of the Storage class; this resource is available at the cluster level, just like the node and any other resources available at the cluster level.

PersistentVolumeClaim is the resource from which we request the storage PersistenVolumeClaim uses PersistencVolume just like pod uses node resources. PersistentVolumeClaim allows users to utilize abstract storage provided by the administrator in the form of PersistentVolume.

What is Storage Class in Kubernetes?

The storage class is the Kubernetes resource from which we achieve dynamic, persistent volume provisioning. The storage class concept in Kubernetes allows the user(administrator) to provide the type of storage they want to offer. To do all this, the administrator needs to create the class.

Below is the way we can create the storage class in Kubernetes.

apiVersion: storage.k8s.io/v1

kind: StorageClass

Metadata:

name: standard

provisioner: kubernetes.io/aws-ebs

Parameters:

type: gp2

reclaimPolicy: Retain

allowVolumeExpansion: true

Mount options:

- debug

volumeBindingMode: Immediate

Provisioner, parameters, and reclaimPolicy: these three fields must be in every storage class the administrator makes.

In the Provisioner section, we assign different volume plugins; our PVs are created using this plugin. In the above example, we have used aws-ebs

Importance of Storage Class in Kubernetes?

In Kubernetes, storage classes are an essential concept that provides a way to define different types of storage that can be used by persistent volume claims (PVCs) in a cluster.

Here are some of the critical reasons why storage classes are essential in Kubernetes:

- Flexibility: Storage classes allow the definition of multiple types of storage, such as network-attached storage (NAS), block storage, and object storage, and make them available to applications. This gives the flexibility to choose the type of storage that best fits an application's needs.

- Automation: Kubernetes storage classes can be automated, which means that when a user creates a new PVC, Kubernetes can dynamically provision a new volume using the appropriate storage class. This can save time and reduce errors, as the user cannot manually create and manage the volumes.

- Performance: Storage classes can also define performance characteristics such as disk speed, IOPS, and latency. This helps ensure that the storage resources used by the application meet the necessary performance requirements.

- Storage Management: Storage classes can also be used to manage the lifecycle of storage resources, such as by defining retention policies and backup schedules. This makes it easier to manage and monitor storage resources in the cluster.

Serverless Framework is serverless computing to build and run applications and services without thinking about the servers. Taken From Article, Kubeless - Kubernetes Native Serverless Framework

Types of Storage Classes

There are several different types of storage classes in Kubernetes, each with its own set of features and use cases. Let's explore each of these types in more detail.

Standard Storage Class

The standard storage class is the default storage class in Kubernetes. It is used when no other storage class is specified. This storage class uses the default settings of the underlying infrastructure, which may be a cloud provider, a local disk, or a network-attached storage device. The standard storage class is ideal for applications that do not require specific performance or availability requirements.

Managed Storage Class

The managed storage class provides managed storage resources, such as Amazon Elastic Block Store (EBS) or Google Cloud Persistent Disks. The cloud provider manages These storage resources and offers high availability and durability. Managed storage classes are ideal for applications that require reliable storage with high availability and durability.

Types of Managed Storage Classes in Kubernetes?

Let's see some managed storage classes in Kubernetes:

AWS EBS Storage Class

The AWS EBS storage class is used to provision and manage storage volumes in the AWS Elastic Block Store. It is commonly used by developers deploying their applications on the Amazon Web Services (AWS) cloud platform.

Azure Disk Storage Class

The Azure Disk storage class provides and manages storage volumes in Microsoft Azure Disk Storage. This storage class is commonly used by developers deploying their applications on the Microsoft Azure cloud platform.

GCE Persistent Disk Storage Class

The GCE Persistent Disk storage class is used to provision and manage storage volumes in the Google Compute Engine Persistent Disk. This storage class is commonly used by developers deploying their applications on the Google Cloud Platform (GCP).

Network File System (NFS) Storage Class

The Network File System (NFS) storage class provides shared storage accessible from multiple nodes in the cluster. This storage class is ideal for shared storage applications like databases or file servers. However, to prevent data loss, the NFS server must be highly available and reliable.

Managing Storage Classes in Kubernetes

Kubernetes provides various tools for managing storage classes in a Kubernetes cluster. Let's examine some of the standard management tasks that need to be performed on storage classes in Kubernetes.

Creating a Storage Class

To create a storage class in Kubernetes, one must define the storage class specifications in a YAML file and then use the kubectl to apply the command to create the class.

For example, here is a sample YAML file that defines an AWS EBS storage class:

apiVersion: storage.k8s.io/v1

kind: StorageClass

Metadata:

name: aws-ebs

provisioner: kubernetes.io/aws-ebs

Parameters:

type: gp2

Modifying a Storage Class

To modify a storage class in Kubernetes, you need to update the specifications in the YAML file and then use the kubectl apply command to apply the changes.

For example, if someone wants to change an AWS EBS storage class type from gp2 to io1, one can update the parameters section in the YAML file and then apply the changes using the kubectl apply command.

Deleting a Storage Class

To delete a storage class in Kubernetes, use the kubectl delete command and specify the name of the storage class you want to delete.

For example, if suppose one wants to delete an NFS storage class named nfs-storage, then one can use the following command:

kubectl delete storage-class nfs-storage

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)