Modern enterprises operate in increasingly complex environments powered by Cloud Native Engineering, Kubernetes Operations, distributed systems, and real-time data pipelines. As digital platforms scale, ensuring consistent performance, reliability, and availability becomes a significant challenge—making Site Reliability Engineering (SRE) a core discipline for production excellence. Yet with microservices, hybrid cloud, and multi-cluster architectures, SRE teams face new layers of complexity involving observability gaps, deployment risks, infrastructure drift, and high customer expectations around uptime.

Common SRE challenges include maintaining end-to-end visibility across distributed services, managing noisy alerts, optimizing performance, enforcing error budgets, tracking SLOs, and reducing operational toil. As architectures evolve, traditional incident management and monitoring approaches are no longer sufficient. Enterprises must adopt a more resilient, automated, and scalable model to handle production workloads.

To meet these demands, leading organizations are implementing best practices such as DevOps automation, continuous reliability, chaos engineering, AIOps-driven observability, service mesh visibility, intelligent alerting, and automated incident response. Tools like XenonStack’s Observability Platform, SRE Platform, and Platform Engineering frameworks enable deeper insights into application health, predictive monitoring, and high-trust reliability automation.

AI-driven and Agentic capabilities further enhance SRE by powering smart diagnostics, automated runbooks, and faster root-cause analysis, thereby reducing Mean Time to Detect (MTTD) and Mean Time to Resolution (MTTR). With advanced approaches to production monitoring, cloud cost optimization, and resilience engineering, enterprises can scale infrastructure confidently while delivering a consistent user experience.

Mastering SRE challenges and best practices is crucial for organizations seeking to accelerate innovation, enhance reliability, and achieve true production readiness.

Why Site Reliability Engineering Matters?

A site reliability engineer (SRE) is responsible for configuring, maintaining, and ensuring the reliability and availability of complex computing systems. They manage deployments, monitor services, and respond to any issues that may arise.

According to Google's Site Reliability Engineering book, the traditional approach caused gaps and conflicts between developers and sysadmins due to differences in skills. Developers wanted to release new features as frequently as possible, while sysadmins focused on avoiding disruptions and ensuring resiliency.

Defining SRE and Essential Skills

Site reliability engineering (SRE) is a practice that applies both software development skills and mindset to IT operations. SRE utilizes software engineering techniques, including algorithms, data structures, performance optimization, and programming languages, to develop highly reliable web applications.

Site reliability engineering utilizes these techniques to ensure the stability and availability of the production system while introducing new features and implementing operational improvements. The SRE team, which consists of site reliability engineers, also known as sysadmins, focuses on replacing manual labor with automation in SRE and ensuring system availability. Their goal is to improve operational efficiency, ensuring that the services are reliable, scalable, and resilient to changes and failures.

Key Site Reliability Engineering Skills

The type of skills required will differ from organization to organization. It is widely based on the type of application a particular organization uses and how and where it is deployed and monitored. The other essential skills for SREs are to be more focused on application monitoring and diagnostics. Apart from the specific technical skills that depend on the organization's practices, below are some non-technical and basic technical skills one should look for in site reliability engineering.

Non-Technical Skills

-

Problem-Solving

-

Teamwork

-

Work well under pressure and solve problems

-

Translating the technical into business language

-

Have excellent written and verbal communication skills

Fundamental Technical Skills

-

Know version control

-

Knowledge of Linux (most preferably)

-

Automate things over manual work

-

CI/CD Knowledge

-

Knows how to troubleshoot effectively

Opencast for automated video capturing, processing, managing, and distributing. Click to explore Best Practises and Solutions for the SRE Team

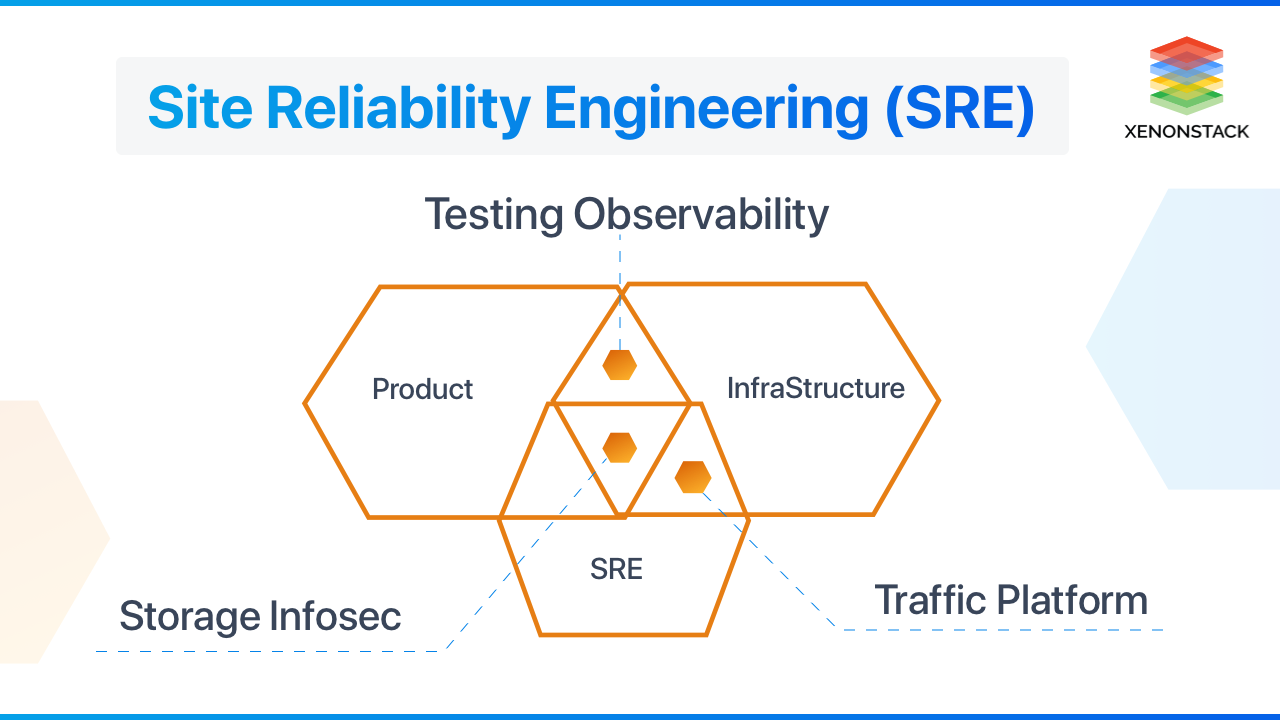

How Site Reliability Engineering Functions

A site reliability team provides availability, performance, effectiveness, emergency response, and service monitoring. In short, site reliability engineering is accountable for all those things that make their services up and reliable for their users. To fulfill these goals, the SREs work according to the following principles, which form the foundation of site reliability engineering:

-

Embracing Risk

-

Service Level Objectives

-

Eliminating Toil

-

Monitoring Distributed Systems

-

The Automation

-

Release Engineering

-

Simplicity

Service-Level Objective (SLO)

An SLO sets a specific numerical target for system availability. This numerical value is referred to as a service-level objective (SLO), which defines a target level for the reliability of your service. A more reliable service will cost more to operate; hence, the SLO should be set carefully.

Service-Level Agreement (SLA)

An SLA involves a response to a service to ensure that it's available as expected. The SLO must meet a certain level over a defined period, and if it fails to meet the agreed-upon standards, a fine or refund may be applied. Define the SLA's availability SLO carefully, being mindful of which queries count as genuine.

Service-Level Indicator (SLI)

An SLI is an indicator of the level of services provided. It provides the service availability rate to determine whether the system operated under the defined SLO in the past. If the SLI falls below the defined SLO, a problem exists, and it needs to be addressed—someone must make the system more available to resolve the issue.

Key Principles Behind Site Reliability Engineering

-

Recruit Programmers: Hire skilled coders for SRE roles, focusing on automating system growth rather than linearly expanding the engineering team.

-

Treat SREs as Developers: SREs and developers come from the same pool and work interchangeably to improve system stability rather than just add functionality.

-

Dev Team Involvement: Developers handle about 5% of operations work, staying informed about system changes and taking full-time support responsibility if their features cause instability.

-

Limit SRE Operational Load: SREs spend at least 50% of their time automating and improving system reliability, with a cap on the number of issues they can address during a shift.

-

On-Call Team Size: On-call teams should have a minimum of 8 engineers per site, managing no more than two incidents per shift to prevent burnout.

-

Postmortems for Improvement: Focus on process and technology in postmortems, aiming for continuous improvement to avoid repeating the same issues.

-

Service Level Objectives (SLOs): Each service should have defined SLOs and measurable metrics that guide actions and set limits on allowable unavailability.

-

Launch Criteria Based on SLO Finances: Base system changes on SLO budgets to ensure stability; avoid introducing changes when nearing the budget limit to maintain service quality and customer satisfaction.

Core Components of Site Reliability Engineering

Site reliability engineers (SREs) collaborate with other engineers, product owners, and customers to define targets and measures. It's crucial to take action once you've set a system's uptime and accessibility. Below are some essential aspects of SRE to consider:

-

Key Tools and Metrics: This is often done through Observability, service-level indicators (SLIs), and service-level objectives (SLOs).

-

Holistic System Understanding: An engineer ought to have a holistic understanding of the systems because of the connections between the systems.

-

Early Detection of Issues: Site reliability engineers are responsible for ensuring the early detection of issues to reduce failure costs.

-

Shared Ownership and Team Collaboration: Since SRE aims to resolve issues between groups, the expectation is that both the SRE teams and the development teams have a holistic view of libraries, front-end, back-end, storage, and other parts. Shared ownership ensures that no team has exclusive control over specific components.

Steps to Adopt Site Reliability Engineering

Google was the first to embrace site reliability engineering (SRE) culture, but what works for Google may not work for all organizations. The adaptation of site reliability engineering in an organization depends on various factors, such as organization size, technology used, culture, and other considerations. Adopting SRE refers to implementing the principles and practices that Google has developed and aligning them with the established methods of the organization.

Project Analysis

-

Evaluate the current situation of the organization, including its systems and processes.

-

Identify the challenges faced by the organization that SRE can address.

-

Analyze the capabilities of the existing team and identify any capability gaps.

Hiring Site Reliability Engineering team by Analysis

-

Based on the analysis, organizations can hire the most efficient and required site reliability engineers to fill key roles.

Recommendations

-

Determine which SRE principles are most suitable for the organization's needs.

-

Assess whether SRE practices are feasible for the organization.

-

Identify which practices can make the most significant difference in the shortest time.

-

Plan how best to integrate site reliability engineering into the organization’s culture.

Implementation

-

Structure SRE teams according to the organization’s specific needs and scale.

-

Identify skill gaps within the team and determine the best ways to address them.

-

Bring the existing team up to speed with SRE by providing the necessary training or tools.

-

Define what to look for when hiring new site reliability engineers to complement the team.

Challenges in Site Reliability Engineering Explained

Site reliability engineering (SRE) supports the business by automating tasks to eliminate unnecessary work and roles, reduce overall costs by optimizing resources, and improve mean time to repair (MTTR). The key areas that SRE focuses on are:

Reliability

Maintaining a high level of network and application availability, thus maintaining software system reliability.

Monitoring

Implementing performance metrics and establishing benchmarks to monitor the systems.

Alerting

Readily find any problems and make sure that there is a closed-loop support process to resolve them.

Infrastructure

To understand cloud infrastructure and physical infrastructure scalability and limitations.

Application Engineering

Understanding all application necessities as well as testing and readiness needs.

Debugging

Understanding the systems, log files, code, use case, and troubleshooting will debug as required.

Security

Understanding common security problems and tracking and addressing vulnerabilities to make sure the systems are properly secured.

Best Practices Documentation

Prescribing solutions, production support playbooks, and many more.

Best Practice Training

Site reliability engineering best practices are implemented through production readiness reviews, blameless postmortems, technical talks, and tooling.

There are alternative resource domains that overlap with the SRE role, such as DevOps, IT Service Management (ITSM), Agile Software Development Life Cycle (SDLC), and other organizational frameworks. SRE and DevOps/NetDevOps teams are interdependent. By providing monitoring solutions that address the needs of both, information is shared across teams, enabling collaborative troubleshooting and problem resolution.

A way to get insights into the whole infrastructure. It is essential for the operations team. Click to explore Observability Working Architecture and Benefits.

Best Practices for Effective Site Reliability Engineering

Site reliability engineering (SRE) focuses on speed, performance, security, capacity planning, software/hardware upgrades, and availability, all contributing to reliability—a critical goal for any organization. SREs operate services with networked systems for users, both internal and external, and are ultimately responsible for these services' health.

Successfully operating a service involves a variety of tasks, such as developing monitoring capabilities, capacity planning, incident response, and ensuring the root causes of outages are addressed. Google has defined more than nine practices for site reliability engineering. Below is a brief categorization of these practices for better understanding:

Controlling Overload Operation

-

Hire coders, as the primary duty of an SRE is to write code.

-

About 5% of the ops work should go to the development team, with all overflow handled by SREs.

-

The goal is to cap the SRE operational load at 50%, typically aiming for around 30%.

-

The on-call team should have at least eight engineers per location, handling a maximum of two events per shift.

SLA-Driven Operation Monitoring

-

Have an SLA for each service, which may vary depending on the service.

-

Measure and report the performance against SLA.

-

Use error budgets and get launches on them.

Ways to Handle Incident/Blackout Smoothly

-

Conduct postmortems for every incident.

-

Ensure postmortems focus on process and technology, not blame, to improve future performance.

-

Aim for a maximum of two events per on-call shift.

Management/Budget Policies

-

Hire SREs and developers from the same staffing pool and treat them as developers.

-

Carefully define SLA, SLI (Service Level Indicators), and SLO (Service Level Objectives) to ensure alignment with business goals.

Additional SRE Best Practices Include:

-

Participate in and improve the entire lifecycle of services from inception and design through deployment, operation, and refinement.

-

Support services before they go live by engaging in activities such as system design consulting, developing software platforms and frameworks, capacity planning, and launch reviews.

-

Maintain services once live by measuring and monitoring availability, latency, and overall system health.

-

Scale systems sustainably through mechanisms like automation in SRE.

-

Evolve systems by advocating for changes that improve reliability and velocity.

-

Conduct sustainable incident response and blameless postmortems to drive continuous improvement.

Comparing SRE with DevOps Methodologies

Site reliability engineering (SRE) shares many core concepts with DevOps. Both methodologies depend on a culture of sharing, metrics, and automation. They help organizations achieve the appropriate level of reliability in their systems, services, and products.

Both SRE and DevOps are methodologies addressing organizations' needs for production operation management. However, the differences between the two approaches are significant.

-

Site reliability engineering is more focused on maintaining a stable production environment while also enabling rapid changes and software updates. Unlike the DevOps team, SRE emphasizes stability, but one of the team's goals is to improve performance and operational efficiency.

-

DevOps Culture is concerned with the "What" that needs to be done, while site reliability engineering focuses on the "How" to achieve it. SRE is about translating theoretical strategies into practical approaches with the right work methods, tools, and automation in SRE. It’s also about shared responsibility across teams, ensuring everyone is aligned with the same goal and vision.

Whereas DevOps delegates issues to development teams for resolution, SRE proactively identifies problems and resolves some internally.

SRE vs DevOps

|

Aspect |

Site Reliability Engineering (SRE) |

DevOps |

|

Primary Focus |

Focus on creating an ultra-scalable and highly reliable software system |

Focus on automated deployment processes in production and staging environments |

|

Role Definition |

SRE is a specialized engineering role within the organization |

DevOps is a cross-functional role bridging development and operations teams |

|

Change Management |

Encourages quick movement by reducing the cost of failure |

Implements gradual change to ensure safe and reliable deployments |

|

Incident Management |

Focuses on postmortems to analyze and learn from incidents |

Focuses on environmental building and ensuring infrastructure reliability |

|

Monitoring and Alerts |

Prioritizes monitoring, alerting, and managing events to ensure system health |

Deals with configuration management and maintaining system consistency |

|

Capacity Planning |

Involves capacity planning to ensure systems scale efficiently |

Implements infrastructure as code to manage infrastructure changes |

|

Core Goal |

Reliability is the primary focus, ensuring minimal downtime and high system availability |

Delivery speed is the primary goal, focusing on efficient and rapid deployments |

Best Site Reliability Engineering Tools

Understanding the SRE approach is not set in stone, whether it's an organization implementing site reliability engineering services or adopting SRE practices. Organizations need to conceptualize their approach to SRE and adapt by choosing the right tools accordingly.

Here are some essential SRE tools:

Core Benefits of Site Reliability Engineering

Site reliability engineering (SRE) aims to improve the reliability of high-scale systems through automation and continuous integration and delivery. The primary goal of SRE is to bridge the gap between developer teams and sysadmin teams. When discussing SRE benefits, we typically highlight how it can provide significant advantages to an enterprise.

Meeting Customer Expectations

SRE accomplishes customer expectations regarding the functionality and valuable life of performance monitoring tools.

Exposure to Staging and Production Systems

By involving all technical teams in staging and production, SRE improves system performance and collaboration.

Risk Mitigation

SRE reduces risks related to tool performance and system health through error budgets and automation.

Improved Reliability and Availability

SRE boosts reliability and availability by reducing failure rates and downtime with capacity planning and monitoring.

Prevention and Quick Recovery

SRE prevents failures and ensures quick recovery using incident response and root cause analysis.

Efficient Production Goal Achievement

SRE helps achieve production goals faster with automation and CI/CD processes.

Enhanced Product Marketing and Guarantees

With SLOs and SLIs, SRE guarantees system availability and software reliability, boosting product marketing.

How does Runbook Automation help SRE teams?

Runbook Automation helps SRE teams by reducing manual, repetitive tasks (toil) and enabling faster, more consistent incident response. It standardizes operational procedures, minimizes human error, and improves system reliability. Automating common workflows like service restarts or log collection allows SREs to respond to issues more quickly and focus their time on higher-value engineering work.

Conclusion on Embracing Site Reliability Engineering

Site Reliability Engineering (SRE) requires specialized skills to succeed and a sense of trust between teams. SRE Solutions include responsibility for site reliability engineering services and owning production-related operations. It is a specific approach focused on improving IT operations. Want to adopt the SRE culture in your project? Train your team, follow the best practices, and trust the process. It’s a myth that you'll achieve 100% perfection, but SRE will help you improve and get as close to perfection as possible.

Frequently Asked Questions (FAQs)

Advanced FAQs on Site Reliability Engineering (SRE) Challenges and Best Practices.

What is the biggest scaling challenge for SRE teams today?

Managing reliability across distributed, multi-cloud systems while keeping error budgets intact under rapid release cycles.

How can teams reduce operational toil effectively?

By automating incident workflows, adopting self-healing systems, and shifting operational tasks into code-managed pipelines.

How do SRE teams maintain observability at enterprise scale?

Through unified telemetry pipelines, distributed tracing, and anomaly detection models that operate across heterogeneous systems.

What best practices improve incident response maturity?

Using runbook automation, blameless retrospectives, real-time collaboration tools, and pre-defined playbooks aligned with system risks.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)