Generative AI Platform

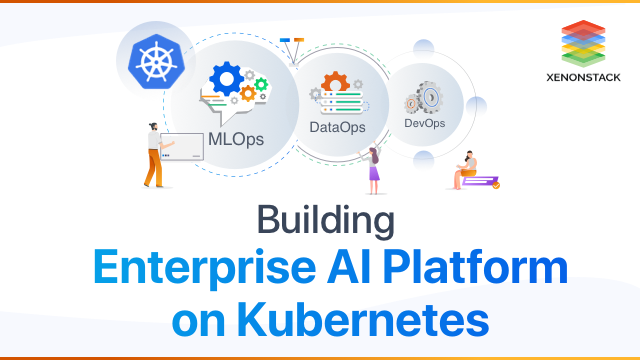

In this blog post, the practicality of using Kubernetes to address the challenges of adapting Artificial Intelligence will be discussed. Machine-learning algorithms have progressed, primarily through the development of deep learning and reinforcement-learning techniques based on neural networks.- Integration ( Intelligent ETL and Workflows)

- Security

- Governance (Data Access Management)

- Storage ( I/O Processes + Cost per operations)

- productionizing the ML/DL Models

- Continuous Delivery for ML/DL

An important process that ensures customer satisfaction in the application. Click to explore, Artificial Intelligence in Software TestingKubernetes has become the de-facto standard to manage containerized architecture on Cloud Native Scale on Cloud Native Scale. AI and Kubernetes are a match made in heaven. It is much-needed clarity for an organization to define one standard pipeline and toolkit for orchestrating containers. The goal of Kubernetes for AI is to make scalable models and deployment on production most inherently. No matter how much experienced data science guys you have, if you don’t have the support of Infrastructure Engineers, things get complicated when we try to run things on a Large Scale. It is because of the gap between working locally and running things on Production. The difference is not only due to tools but the way elements are getting deployed nowadays. With the Rise of Containers as the first choice preference for deployments, it’s getting even harder for developers to understand how things work in production.

The requirement for Versatility and Seamless Integration for Enterprise AI Platform The versatility in the way of developing a model and the architecture behind it makes scaling a challenging job because of the reason that everyone does everything differently. In terms of models, there is no Interoperability of Models. There are numerous libraries and different kinds of versions being used to develop the model. There is a need for one standard approach, which is -

The requirement for Versatility and Seamless Integration for Enterprise AI Platform The versatility in the way of developing a model and the architecture behind it makes scaling a challenging job because of the reason that everyone does everything differently. In terms of models, there is no Interoperability of Models. There are numerous libraries and different kinds of versions being used to develop the model. There is a need for one standard approach, which is -

- Framework Agnostic

- Language Agnostic

- Infrastructure Agnostic

- Library Agnostic

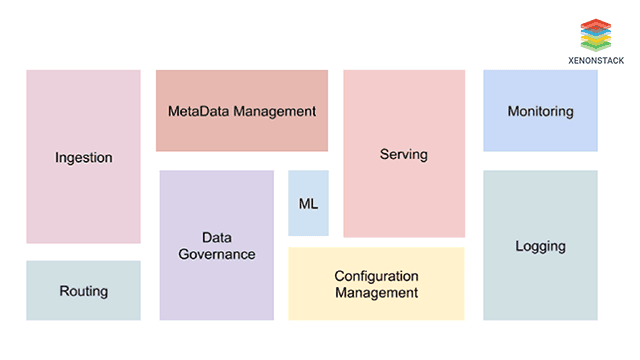

Enterprise AI Infrastructure Platform Capabilities

Capabilities of enterprise AI infrastructure platform are described below:

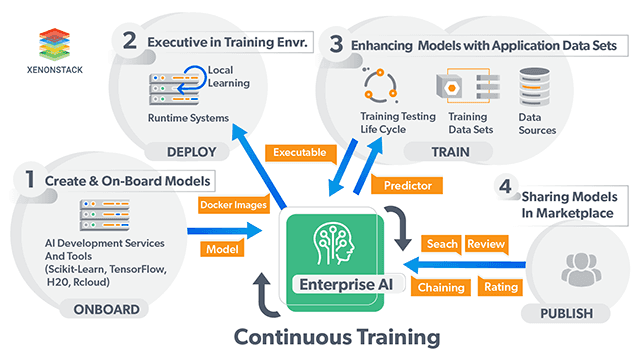

Software Engineering Practices for AI

Software engineering disciplines such as Test-Driven Development (TDD), continuous integration, rollback and recovery, change control, etc, are being included in advanced machine learning practices. It is not sufficient for a specialist to develop a Jupyter notebook and toss it over the fence to a team to make operational. The same end-to-end DevOps practices that we find today in most high-grade engineering organizations are also going to be necessitated in machine learning efforts. Many fascinating challenges remain. Strategy for an Enterprise should be general-purpose and already maintains a variety of model and data types; it is flexible and supports innovations from the machine learning/deep learning community.Challenges of Storage and Disk/IO

Data parallel computes workloads need parallel storage. The main problem in running Deep Learning Models, either in Cloud or On-Premise, is the bottlenecks from the edge to the core to the cloud, which include -- Bottlenecks that slow down data ingest.

- Bottlenecks on-premises due to outdated technology.

- Bottlen+ in the cloud due to virtualized storage

Health Checks & Resiliency

Kubernetes comes with Features to ensure the application running on the infrastructure can handle failovers. These features are available on any public cloud, but having them on Kubernetes makes your On-Prem Environment as resilient as Cloud.Framework Interoperability

For making the model Framework, Language, and Library Agnostic, There is a community project created by Facebook and Microsoft, backed by AWS. The need for such a framework is there because a Single ML Application can be deployed on Various Platforms. ONNX and MLeap are the frameworks which provider will provide much-needed framework interoperability and shared optimization. It is achieved by having Standard Format for the Models. The artifacts of these models are optimized and can run on a Serverless Platform on top of Kubernetes very efficiently.Build Your Own DataOps Platform using Kubernetes

With Enterprises becoming jealous of data and security is paramount, Becoming an AI-powered enterprise requires a custom-made Data Platform. The other reason is that every enterprise has its natural of data and needs from them. The number of teams involved it challenging to have security and compliance in place and at the same time, give freedom to the organizations to run experiments and discover data. An Enterprise Data Strategy should elaborate on how Data will be securely shared across various teams.

Big Data Applications are evolving and have got more friendly to Kubernetes. With the rise of Kubernetes Operators, It has become a much simpler task to run complex stateful workloads on Kubernetes. Data Governance is required, and how it is beneficial for them as well as for an organization.

Data Lineage for AI Platform

Capturing all events happening across the Data and AI platform comprises events that happened in Infrastructure to Application. Data Lineage Service should be capable of capturing events from ETL Jobs, Querying Engines, and Data Processing Jobs, and There should be Data Lineage as a Service as well which can be integrated by any other services in which there is a need to incorporate Data Lineage. ELK Stack and Prometheus are capable enough to provide Lineage for the Kubernetes Cluster and the Workloads Happening on top of it.Data Catalog with Version Control

With having MultiCloud and Cloud Bursting in Mind. The Data Stored should have version control. The version control for data should be as reasonable as the version control for Software code. The Data Version Control should be built on top of Object Storage which can be integrated with Existing Services. Minio is such a service that can be combined with S3 and provides the same APIs. For Machine learning Models data, DVC allows storing and versioning source data files, ML models, directories, intermediate results with Git, without checking the file contents into Git. Meta Cat should be integrated with the Data on Premises and External Sources.A platform to define data collection layer to collect metrics stats of clusters, pipelines, applications running on it using REST API, Agent-based collection using SNMP protocols. Taken From Article, AIOps for Monitoring Kubernetes and Serverless

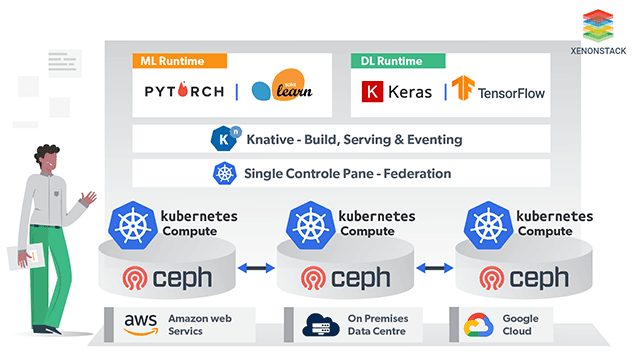

Multi-Cloud AI Infrastructure

This infrastructure should meet the following high-level infrastructure requirements -- Massive compute power to train models in less time

- High-performance storage to handle large datasets

- Seamlessly and independently scale compute and storage

- Handle varying types of data traffic

- Optimize costs

Federated Kubernetes on Multi-Cloud

Models as Cloud Native Containers

Microservices, in general, is very beneficial when we have a complex stack with a variety of business domains workloads running and talking to each other in a Service Mesh, but the way Microservices suits Models Development and Operations Lifecycle is excellent, and the benefits are immense.Portability

With the use of Kubernetes, the pain of configuration management gets easy. Once the ML System is containerized, The infrastructure can be written as a code that can run on Kubernetes. Helm can be used to create Curated Applications and Version Controlled Infrastructure Code.Agile Training at Scale

Kubernetes provide Agile methods of Training at large scale with Training jobs running as Containers which can be scheduled on a variety of Node types having GPU acceleration. Kubernetes can manage the scheduling of such workloads.Experimentation

The dynamicity of Artificial Intelligence requires a lot of research. If the cost of running experiments is too high, the development lags. Running Kubernetes on a commodity hardware On-Premise helps in running very low-cost operations.Cloud Bursting for AI Platform

Cloud Bursting is a model for the application, but it's very relevant to AI workloads which require a lot of Experimentation. In Cloud Bursting, the workload which is deployed on On-Premises Data Center scales into a Cloud whenever there is a spike. To implement Cloud Bursting, Kubernetes with Federation can be set up, and the AI Workloads shall be containerized in a cloud-agnostic architecture. The lift and shift from On-Premises can be triggered based on custom policies which can depend on Cost or Performance.Leverage your On-Prem for Experimentation

Customized Hardware

There is a need of Customised Hardware for running Deep Learning and Machine Learning Systems on Production because, On Virtualized Storage or Compute, The Performance Degradation could be of enormous impact and we may miss essential anomalies due to the performance issues. There are Use Cases which requires ultra-fast, low latency hardware. Traditionally, there used to be a Trade-Off between performance and features that came with AWS or other Cloud. Unfortunately, OpenStack was not able to Complete in those terms but Using Kubernetes On-Premise, we do not miss any such add on functionality like RBAC, Network Policies or other Enterprise-Grade Features. Kubernetes can be installed on Highly Customised, Tailor Made Hardware to run Specific Workloads.Serverless For AI Platform on Kubernetes using Knative

KNative or Kubernetes aims to automate all the complicated part of the build, train, and deploy pipeline. It is an end to end framework for - Promoting Eventing Ecosystem in Build Pipeline. Knative's eventing is the framework that pulls external events from various sources such as GitHub, GCP PubSub, and Kubernetes Events. Once the events are pulled, they are dispatched to event sinks. A commonly used sink is a Knative Service, an internal endpoint running HTTP(S) services hosted by a serving function. KNative currently supports the following as Data sources to its eventing -- AWS SQS

- Cron Job

- GCP PubSub

- GitHub

- GitLab

- Google Cloud Scheduler

- Google Cloud Storage

- Kubernetes

Using Functions to Deploy Models

Using functions is useful for dealing with independent events without needing to maintain a complex unified infrastructure. This allows you to focus on a single task that can be executed/scaled automatically and independently. Knative can be used to deploy models that are simple. The PubSub eventing source can use APIs to pull events periodically, post the events to an event sink, and delete them.Artificial Intelligence for Cyber Security is the new wave in Security. Click to explore about, Artificial Intelligence in Cyber Security

AI Assembly Lines with KNative

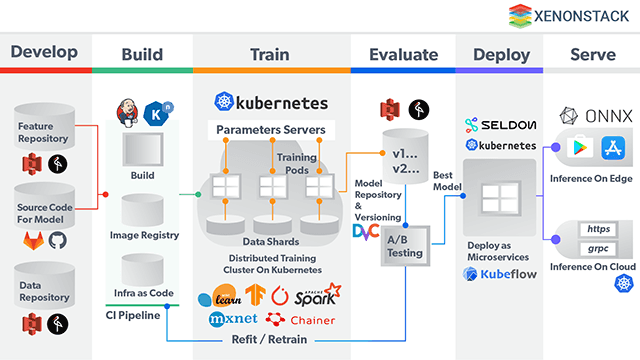

There are three main components for the Pipeline and Workflow for Models. With the help of Kubernetes, all three of the main components can be achieved. KNative supports full assembly line from Build to Deploy, Embracing current CI Tools, and making them Cloud and Framework Agnostic. It also routes and manages traffic with blue/green or Canary deployments, Binding running services to eventing ecosystems.Model Building

Model development is part of this stage. Data scientists must have the liberty to choose its workbench for Building the Model. With Kubernetes, the process of provisioning workbenches for a data scientist is possible. Workbenches like Jupyter, Zeppelin or RStudio can be provided to data scientists who would install the workbench on the Kubernetes Cluster rather than a laptop. It’ll help in doing Adhoc Experimentation much faster and can even support GPU Acceleration during Model Building.Model Training

Model Training is a crucial stage in a project and is the most time-consuming. With the help of Kubernetes, Frameworks like Tensorflow provide significant support to run Distributed Training jobs as Containers preventing a lot of time wastage and fast training. Online Training shall also be supported in the architecture in a much efficient way.Model Serving

In Terms of Serving the model, With the Help of Kubernetes, The following essential features can be introduced to ML/DL Serving -- Logging

- Distributed Tracing

- Rate Limiting

- Canary Updates

GitOps for ML and DL

GitOps Flow is Kubernetes Friendly in terms of Continuously Deploying the Microservices. In terms of Machine Learning, the same GitOps Flow can provide Model Versioning. Models are stored as docker images in an image registry. The image registry has tagged images of every code change and checkpoints. The image registry acts as an artifacts repository with all the docker images version controlled. The Models can be version controlled in the same way with the help of GitOps approach in development.

GitOps Flow is Kubernetes Friendly in terms of Continuously Deploying the Microservices. In terms of Machine Learning, the same GitOps Flow can provide Model Versioning. Models are stored as docker images in an image registry. The image registry has tagged images of every code change and checkpoints. The image registry acts as an artifacts repository with all the docker images version controlled. The Models can be version controlled in the same way with the help of GitOps approach in development.

Moving Towards NoOps with the help of AIOps and Cognitive Ops

To achieve NoOps, AIOps can help to achieve the following goals -

- Reduced Effort - Intelligent discovery of potential issues by cognitive solutions minimizes the effort and time required by IT to make basic predictions.

- Reactive System - With access to predictive data on-demand, Infrastructure Engineers can quickly determine the best course of action before serious network or hardware consequences occur.

- Proactive Steps - Access to both end-user experience and underlying issue data helps achieve the “Holy Grail” of dynamic IT - Problems solved before they impact the end-user.

- NoOps - The next step going beyond technology outcomes is to assess the business impact of networking and hardware failures. It solidifies the place of Technology in the decision-makers and provides them relevant, actionable data to inform ongoing strategy.

Building Platform for Standardizing and Scaling AI Workflows

Akira.AI is working on Building MicroServices based, Event-Driven AI Platform which is capable of -- Seamless Integration and workflow Management

- Versatile - Any Framework, Multi-Cloud

- Scalable

- Security

- Data Governance

A Holistic Startegy

Containerization and Microservices architecture are essential pieces in the Artificial Intelligence workflow from the rapid development of models to serving models in production. Kubernetes is a great solution to do this job by solving a lot of challenges which a data science guy should not worry about. The much-needed abstraction layers come with Kubernetes, which removes the tedious and repetitive jobs of configuration and infrastructure tasks.- Explore here about Observability for Kubernetes and Serverless

- Read more about Artificial Intelligence for IT Operations