What is Apache Arrow?

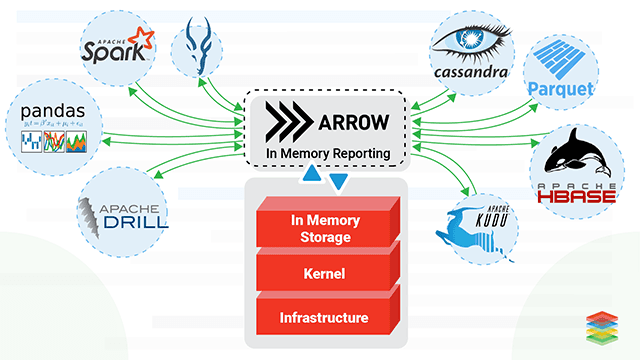

Apache Arrow is a cross-language development platform for in-memory data that specifies a standardized language-independent columnar memory format for flat and hierarchical data that is organized for efficient analytic operations on modern hardware.

It also provides inter-process communication, zero-copy streaming messaging, and computational libraries. C, C++, Java, JavaScript, Python, and Ruby are the languages currently supported," as quoted by the official website.

A place to store data on the cloud when data is ready for the cloud. Click to explore about, AWS Data Lake and Analytics Solutions

This project is a move to standardize the In-Memory data representation between libraries, systems, languages, and frameworks. Before Apache Arrow, every library and language had its way of representing data in native in-memory data structures. For example, look at the Python world -

|

Library

|

In-memory representation

|

|

Pandas

|

Pandas data frame

|

|

Numpy

|

Numpy arrays

|

|

PySpark

|

Spark add / data frame/dataset

|

| Library | In-memory representation |

| Pandas | Arrow format |

| Numpy | Arrow format |

| PySpark | Arrow format |

It simplifies system architectures, improves interoperability, and reduces ecosystem fragmentation. Viewed from the point of view of various programming languages, it's easy to port a dataset prepossessed in R to Python for Machine Learning without any overhead of serialization and deserialization.

How to adopt Apache Arrow?

Extend Pandas using Apache Arrow Pandas are written in Python, whereas Arrow is written in C++. Using Apache Arrow with pandas solves the problems that occur with Pandas, such as speed, and leverages modern processor architectures. Improve Python and Spark Performance and Interoperability with Apache Arrow This is a great Big Data Processing framework that also launched support for Apache Arrow.

Spark exploits Apache Arrow to provide Pandas UDF functionality. Vectorized Processing of Apache Arrow data using LLVM compiler Gandavia is an open-sourced project supported by Dreamio, a toolset for compiling and executing queries on Apache Arrow data. LLVM is a compiler infrastructure that supports various language compiler front ends and back ends.

Its main component is converting code ( in any language ) to an intermediate representation (IR), which uses modern processor features such as Parallel Processing and SIMD.

Over the years, new technologies in Big Data Analytics are changing continuously. Click to explore about, 10 Latest Trends in Big Data Analytics

Why does Apache Arrow matter?

To the time of writing, the latest release is 0.11.0 - 8th of October 2018. Apache Arrow has a bright future ahead, and it's one of a kind in its field. It can be coupled with Parquet and ORC, which makes a great big-data ecosystem. The adaption of Apache Arrow has been on rising since its first release in and adopted by Spark. The corporation has also welcomed Apache Arrow with open hands and adopted it at organizations such as Cloudera, Dreamio, etc.

Developers from more than 11 critical Big Data Open Source projects are involved in this project. Their team is working on an Arrow Flight messaging framework based on gRPC and optimized to transfer data over the wire. It transfers data in Arrow format from one system to another even more comfortably. Integration with Machine Learning frameworks such as TensorFlow and PyTorch.

It will be significant progress and further reduce training and prediction times. It comes at a perfect time because, with the use of new-generation GPUs, the bottleneck of data read speeds seems to end.

How does Apache Arrow work?

The main features are: It is a columnar in-memory layout, which allows O(1) random access. This kind of layout is highly cached efficient in OLAP (analytical) workloads and also benefits from modern processor optimizations such as SIMD. Its data model supports various simple and complex data types that handle flat data structures and real-world JSON-like nested workloads.

Columnar data has become the de facto format for building high-performance query engines that run analytical workloads. It is an In-Memory columnar data format that houses canonical In-memory representations for both flat and nested data structures.

What are Apache Arrow's best practices?

Leverage the columnar format and Parquet for In-Memory and On-Disk representations, respectively, to make systems faster than before. First, locate any value of interest in constant O(1) time by “walking” the variable-length dimension offsets. Data itself gets stored contiguously in Memory; in this way, it becomes highly cache-efficient to scan the values for analytics purposes.

The process of collecting, examining complex and large data sets to get useful information to draw conclusions about the datasets and visualize the data or information in the form of interactive visual interfaces. Click to explore about, Visual Analytics Solutions for Data-Driven Decisions

Benefits of Apache Arrow?

-

It provides a common data access layer for all the applications.

-

More native data types are supported than Pandas, such as date, nullable int, list, etc. It also offers highly efficient I/O.

-

Zero-copy to another ecosystem like JAVA /R.

-

Optimized for data locality, SIMD, and Parallel Processing.

-

Apache Arrow has suited for SIMD ( single instruction, multiple data ) kinds of Vectorized Processing.

-

Accommodate both random access and scan workloads.

-

Low overhead while streaming messaging /RPC.

-

"Fully shredded"columnar supports flat and nested schemas.

-

Support GPU as well.

It is used as a Run-Time In-Memory format for analytical query engines. It includes Zero-copy (no serialization/ deserialization ) interchange via shared memory. It sends large datasets over the network using Arrow Flight. It develops a new file format that allows zero-copy random access to on-disk data, for example, Feather files. It’s used in the Ray ML framework from Berkeley RISELab.

- Discover here about Big Data and Parallel Processing Applications

- Click to check out our Managed Apache Spark Services.

Next Steps with Apache Arrow

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)