What is Service Mesh?

Service Mesh is an infrastructure layer atom of all services, which handles communication between them. It allows reliable delivery of requests (packets in term of TCP/IP) from service 1 to service 2. Practically, a Service mesh is implemented by deploying a proxy in parallel to service, part of the mesh. Application/Service doesn't need to be aware of proxy and no changes required in application code, which is a great thing. A Service Mesh provides dedicated infrastructure layer atom application. It adds visibility, through which admin/developers see which applications communicating healthily, which applications dropping/rejecting requests.

An open-source, lightweight Service Mesh developed mainly for Kubernetes. Click to explore about, Linkerd Benefits and its Best Practices

Why Service Mesh Matters?

In the modern Cloud-Native world, an application might consist of 100s of services, deployed dynamically through a CI/CD in place. Service communication in the Cloud-Native world is incredibly complex.- It allows management of all services, as and when they grow in a number of sizes.

- Provide efficient communication between them.

- Service meshes capable of handling network failures.

- It abstracts the mechanics of reliably delivering requests/responses between any number of services.

- To provide end-to-end performance and reliability.

- It provides a uniform, application-wide point introducing visibility and control into the application runtime.

- Provide telemetry info from proxy containers.

Service Mesh Architecture

Microservices communicates in the default manner. There can be any number of Microservices, written in any language. So consumers hit on REST interfaces exposed by these Microservices. An API gateway added in front of these Microservices to handle all requests from consumer plus adding its business logic.Proxy components

A lightweight proxy such as envoy deployed in parallel with the application. All applications communicate through this proxy. There will be no direct app-2-app communication. These proxies highly intelligent which provide authentication, authorization, telemetry data ( sent to a monitoring such as Prometheus ).- Independently deployed app and proxies.

- Each application and proxy deployed, updated, managed separately.

- Control Plane and Data Plane.

A Stateless application or process is something that does not save or reference information about previous operations. Click to explore about, Stateful and Stateless Applications

Domain/Business Logic and Network Functions

Domain logic implements how computations performed, how integration logic applied between various Microservices. Network Functions responsible for communication between services. They have their mechanisms such as service calls, response render, protocol to use for invocation, service discovery.Service discovery

Service discovery is an important part of the puzzle, it is also very complex in Cloud‑Native Microservices application as it needs to be done dynamically as and when Node/Container where service is running changes. Cluster, where service is running, might be in auto-scaling mode (new nodes added with random IPs), load balanced mode. So dynamic discovery of services needs to be enabled, to automatically map to new changing IPs.Service meshes provide Server Side Service Discovery. Request made to a service via an LB from the client. The load balancer talks to the service registry and routes request to a service instance based on an algorithm. Service instances registered and deregistered dynamically with the service registry. Example -

- AWS ELB - Distributes traffic from the internet as well as nodes from a VPC. It doesn't have a service registry.

- Kubernetes - Proxy is run on each node in the cluster.

The proxy acts as load balancer which discovers the nodes. To request a service, a client sends a request to the host’s IP address and the Node port of svc. The proxy forwards the request to a service instance from the cluster.

A design model that makes use of shared systems that give services to additional applications over the protocol. Click to explore about, Service-Oriented Architecture

How Service Mesh works?

A Service Mesh provides a collection of lightweight proxies alongside containers in a kubernetes pod. Each proxy acts as a gateway to interactions that occur between containers. The proxy forwards the request to load across the Service Mesh to the appropriate downstream service containers which serve the request. The controller in the control plane orchestrates the connections between proxies. Control plane knows about each request/responses, even though the service/application traffic flows directly between proxies. The controller provides access control policies and collects metrics from containers for telemetry and observation. The controller tightly integrates with Kubernetes, which is an open-source system for automating the deployment and orchestration of containerized applications. Let's understand three approaches to Service Mesh Architecture -- One Service proxy on every node.

- Each node in the cluster has own service proxy. Application instances on the node, access the local service proxy. Example- Kube-Proxy per node in the cluster.

- One Service proxy per application.

- Every application has a service proxy. Application instances access their service proxy.

- One Service proxy per application replica/instance.

- Every application instance has own “sidecar” proxy. It's implemented by default in popular Service Meshes such as Linkerd and Istio.

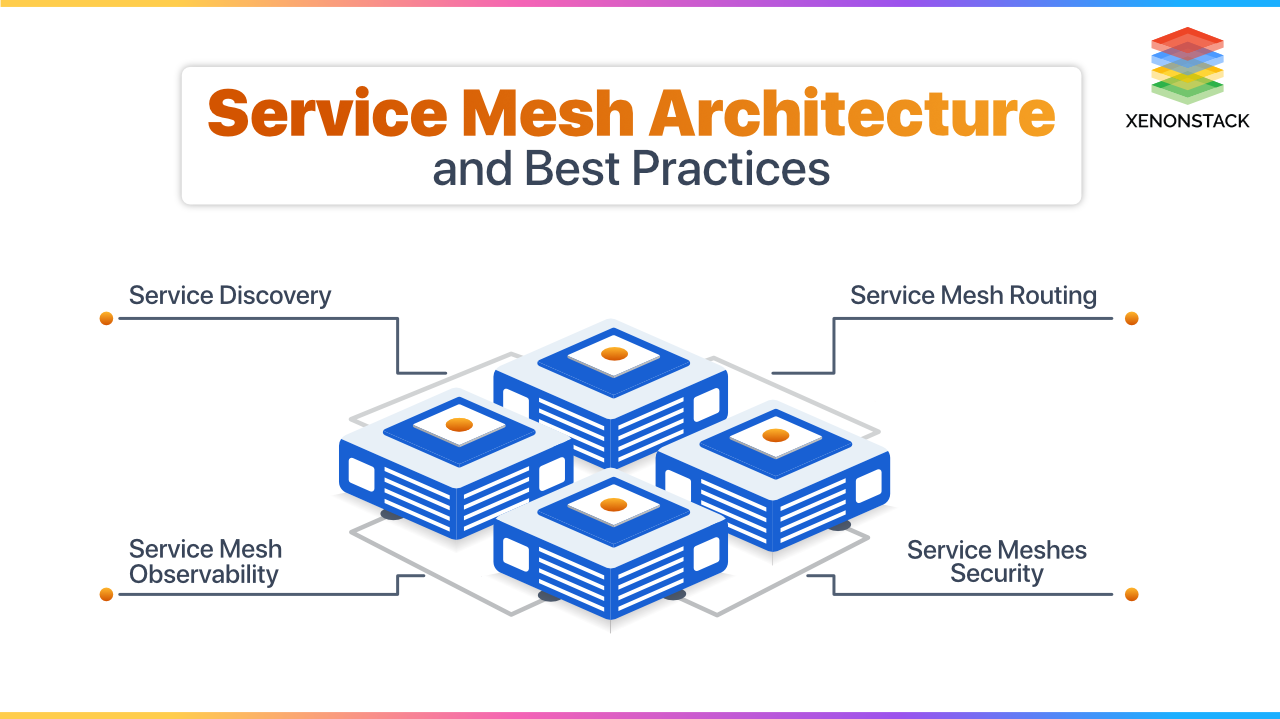

Service Discovery

Proxies provide the route for the communication between Microservices/applications. Discovery happens dynamically as and when new replicas added/removed.Service Mesh Routing

Service Mesh lightweight proxies have inbuilt smart routing mechanisms, which helps provide best routes for requests. Routing is done dynamically between services.Service Mesh Observability

Modern services meshes have components deployed in control plane, which help in logging, tracing request/response calls between services, monitoring and alerting. Failure patterns detected through dashboards.Service Meshes Security

Service Meshes provide authentication, authorization, and encryption of communication between services. In Istio, service two service communication through a proxy blocked by default. Destination rules and Virtual Service created to allow communication. In Linkerd, service to service communication through proxy enabled by default.Modern Data warehouse comprised of multiple programs impervious to user. Click to explore about, Modern Data Warehouse Services

What are the benefits of a Service Mesh?

- Management of all services.

- Adds transparency between communication.

- Provides abstraction layer for network functions done from proxy rather than embedding in application code.

- Evolutionary Architecture allows finding bottlenecks.

- Components deployed with sidecar proxies.

- Features released and updated independently.

- The dedicated component does routing.

- More comfortable to troubleshoot when all request/response is happening transparently, it's easy to track down calls which are failing and fixing/replacing the service with a new instance.

- Enable resiliency to enhances network robustness.

- Efficient debugging allows Debugging 100s of Microservices made easy & fast using Service Mesh.

- Analyze the frequency of failure when failures happen to analyze when & why they occur & provide a permanent fix.

Characteristics of Service Mesh

- Distributed in size.

- Controlled by the control plane.

- Provides all networking such as routing, monitoring through software components (Handled by APIS).

- Decrease operational complexity.

- Provides abstraction layer at the top of Microservices, distributed across various clusters,

- Multi data-centers or Multi-Cloud.

- Decentralized.

- Almost zero latency as the proxies is lightweight.

- Proxy sidecars injected dynamically inside the deployment.

Data mesh provides data that is highly available, easily discoverable, and secure. Click to explore about, Data Mesh and its Benefits

How to adopt Service Mesh?

A Service Mesh adoption journey is a daunting task at first since it's a new paradigm. The basic requirement is that running application as a collection of Microservices in containers at top of Kubernetes. If 10-15s Microservices, then implementation of a Service Mesh might not be the right decision. It will add more overhead. But if run above 50-100s Microservices, then adding a Service Mesh abstraction layer can be quite beneficial to simplify the communication between various services. It will enable faster development, and Continuous Deployment of applications, without focusing on network layer functions now, and rather build the logic. All network and communication-related functions handled by proxies deployed in the data plane. Make health endpoints for each service. Hence, Service mesh will take some time, but when done correctly, it's worth adding a new infrastructure layer at top of all Microservices running in the cluster.What are the best practices of Service Mesh?

Best Practices while implementing Service Mesh layer atop existing Microservices are as follows - Try to Auto-inject proxies - Always try to auto-inject proxies instead of manually. CI/CD pipelines run well with auto-injection. Focus on automation - Automate everything. Monitor and Trace everything - Ensure failure doesn't impact too much by monitoring everything. Only keep minimal network functions inside app logic- To connect to the proxy, send a request and receive a response.Service Mesh Tools

Tools to manage Microservices using Service Mesh -- Istio

- LinkerD

- Istio is more battle-tested in production and used by many companies.

- Containers and Orchestration - Docker, Kubernetes.

- Service Discovery - Kube proxy service, Etcd, Apache Zookeeper.

- API Gateway - To connect to Service Mesh & then in turn to downward Micro-services running in a cluster.

Conclusion

The most complex thing in Microservices is not building and deploying them. Microservices deployed independently, there might be 100s of Microservices running inside Docker containers, managed using Kubernetes or Docker Swarm. With all the advantages of Microservices architecture, it becomes really hard to manage all of them. When things fail, it's a nightmare to find out where the connections breaking, why certain requests failing, having latency issues. There is a need to abstract the service layer & manage communication between services.

- Discover more about Istio Service Mesh Architecture

- Explore here about Microservices for Java and Golang

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)