Graph Databases is “a database that uses graph architecture for semantic inquiry with nodes, edges, and properties to represent and store data.” Every Graph databases include the number of objects. These objects are known as vertices, and the relationship between these vertices are represented in the form of edges which connect the two vertices. We can say that our data model is a graph model if our data model contains the many to many relationships is highly hierarchical with multiple roots, an uneven number of levels, a varying number of levels or cyclical relationships.

Big Data Platform refers to IT solutions that combine severaBig Data Tools and utilities into one packaged answer, and this is then used further for managing as well as analyzing Big Data. Click to explore about, Big Data Platform

Some typical examples with which we can make link analysis using graph databases are Twitter, Facebook, LinkedIn.

- Nodes - It can be an entity like we give the name to our tables in our relational databases.

- Relationship - It is an edge which connects two edges and represents the relationship between two connected nodes.

What are the types of Graph Databases?

- Databases based on the relational storage (triple stores).

- Example of Graph comes under this type of graph database is Hyperlink graph.

- Databases based on native storage.graph databases big data.

With the triples format of triple stores data is stored in the form of the subject, object, and predicate. It stores the data in semantic querying and the query language likeSPARQLfor querying this type of triple store (semantic structure). A graph database data model is a multi-relational graph. The relationship between the nodes of the graph can be unidirectional and bidirectional.

All databases will be deployed or migrated to a cloud platform, with only 5% ever considered for repatriation to on-premises. Source- Future of the Database

Why we require Big Data?

The above example of combining the mart dataset with the weather dataset gives us with many reasons that why we need to use the big data or why the organizations need to use the big data, Some of the reasons to use the big data are -

- It helps to display hidden information.

- If we consider the above example, then we will be able to understand that the Walmart would not be able to find out that the demand for the specific things will increase in the storm season.

- But if we analyze previous data of the Walmart data with the weather data, then we will able to find out in advance in which season for which things the users increase demand.

- So they can improve the stock for that item in advance. Nowadays, available graph databases all these have native graph storage.

- Because they are right in term of storage and processing feature.

There are two main features available with native graph technology which distinguishes it from non-native graph database technology -

- Storage

- Processing

Storage - Non-Native graph uses the storage of relational databases they don’t have their storage. In the case of native graph databases, we don’t have any issue for storage they have their storage. Mainly the native graph storage is built to store the highly interconnected data, so it is helpful when we have to store and retrieve the data from the database which uses the native graph storage, and this feature is missing with the native graph storage.

Processing - It refers to the process of how the operations on the graph databases are processed in terms of storing data to graph databases and executing queries on the data which is stored on the graph.

ElixirData It provides Flexibility, Security, and Stability for an Enterprise application and Big Data Infrastructure to deploy on-premises and Public Cloud with cognitive insights using ML and AI. Click to explore about, Big Data Integration and Management Platform

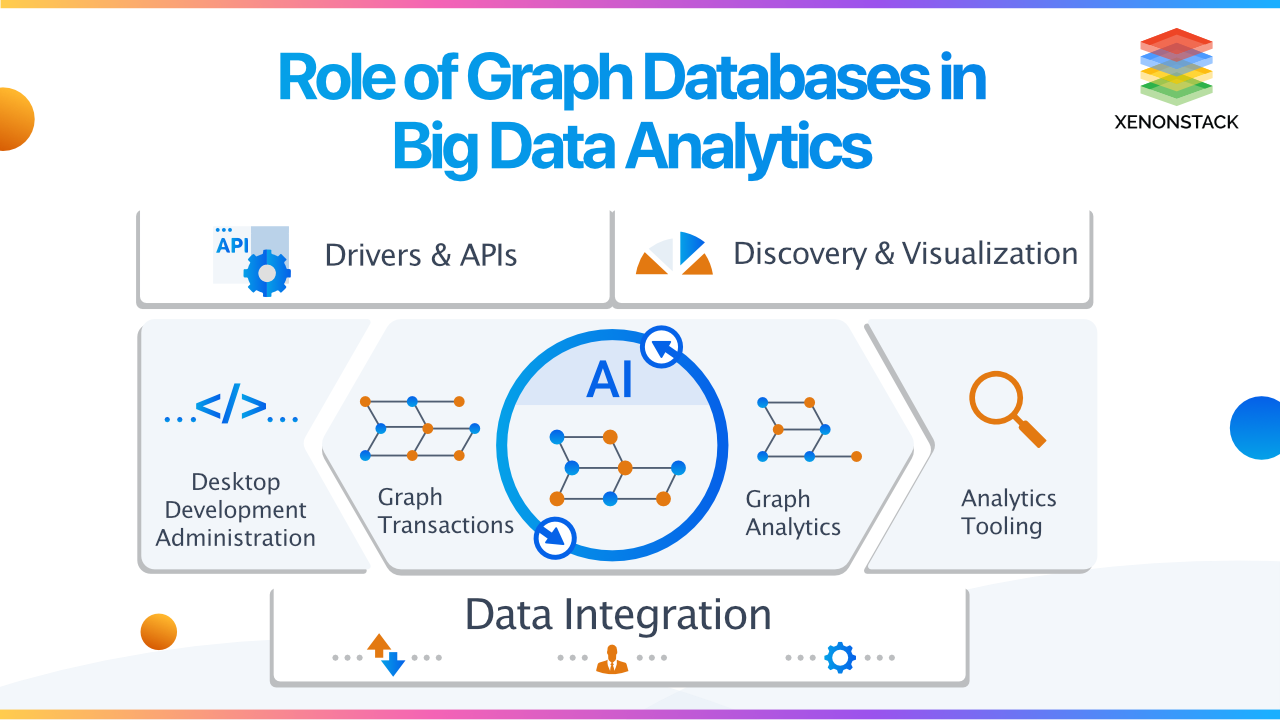

What is the role of graph databases in Big Data Analytics?

We require the graph databases in big data so that we can organize the messy or complicated data points according to the relationships. Graph analytics for big data is an alternative to the traditional data warehouse model as a framework for absorbing both structured and unstructured data from various sources to enable analysts to probe the data in an undirected manner.- Big data Architecture

- Data Collection

- Data Storage

- Data Analysis Module

- Output and Data Visualization Module

Planning the Big Data Architecture

Big data architecture includes ingesting, protecting, processing and transforming data into file systems or database structures. Analytics tools and queries can be run in the environment to get intelligence from data, which outputs to a variety of different vehicles. The big data architecture consists of the following things -

- Big data sources layer -Data from various big data architecture are all over the map. Data can come through from company servers and sensors, or it could be from third-party providers. The big data environment can ingest batch mode, or real-time data can be ingested to make an analysis on the data.some of the examples are as follows from where we can get the data like data warehouses and relational database management systems (RDBMS), databases, mobile devices, sensors, social media, and email.

- Data Processing and storage layer - This type of layer receives the data from the sources. If necessary, it converts unstructured data to a format that analytic tools can understand and stores the data according to its format, so that various type of analytics can be executed on that data. The big data architecture might store structured data in an RDBMS, and unstructured data in a specialized file system like Hadoop Distributed File System (HDFS), or a NoSQL database.

- Analysis layer - This is a layer of big data architecture which interacts with stored data to extract business intelligence. Multiple analytics tools operate in the big data environment. Structured data supports mature technologies like sampling, while unstructured data needs more advanced specialized analytics tools.

- Consumption layer - This layer receives analysis results and presents the results. If we are using graph databases, we have to use the various graph visualization tools according to our requirement so that we view the result in proper graph format. For example, if we consider the big data architecture using Hadoop, then the whole processing will be shown as follow - Let’s take an example of big data architecture using Hadoop as a popular ecosystem. Hadoop is open-source, and several vendors and large cloud providers offer Hadoop system and support.

Core Clusters

The architecture of Hadoop is in the form of a cluster. It runs on community servers, recommends dual CPU server between 4-8 each and at least 48GB of RAM(Using accelerated analytics technologies like Apache Spark will speed up the environment even more). Another option for this is cloud Hadoop environments where the cloud provider does the infrastructure to us. Cloud is a better choice for Hadoop installation or when you don't want to grow your data center racks.Loading the Data

Hadoop supports both batched data such as loading in files or records at specific times of the day. Different software tools are recommended for loading source data include Apache Sqoop for batch loading and Apache Flume for event-driven data loading our big data environment can stage the incoming data for processing, including converting data as needed and sending it to the correct storage in the right format. Additional activities include the partitioning of data and assigning access controls.Data processing

Once the system has ingested, identified and stored then the data will be automatically processed. This is a two-step process which includes the transformation of data and then makes an analysis of data. Transforming data means to process it into analytics-ready formats.Output and Querying

One of the unique features of Hadoop's shining features is that once data is processed and placed, we can use different analytics tools that operate on the unchanging data set(on which we applied transformations).Data Pipelines

Micro and macro pipelines enable processing steps. Micro-pipelines works at a step-based level to create a sub-processes. For example, if we consider the customer transactional data from the company's primary data centre. There is an issue because the data includes customer credit card numbers. A micro-pipeline adds a processing step that cleans credit card numbers Macro-pipelines operate on a workflow level. They define

1) Workflow Control: what steps enable the workflow.

2) Action: what occurs at each stage to allow proper workflow.

A graph database is that sheer performance increases when dealing with connected data versus RDBs and NoSQL stores. Click to explore about, Graph Database Architecture and Use Cases

What is Graph Analytics?

If we are talking about the graph databases, they have to speak for Graph Analytics. Graph analytics are required to analyze the graph. While the nodes represent the different entities of the system, then the edges represent the relationship between them. Graph analytics used to model the pairwise correlation between people and objects in the system. Having defined graphs and graph analytics, it is necessary to explain the components of the two.

The strength of the relationship between is determined by how the nodes communicate with each other, which other participants in the communication and what the importance of the node is in the communion, based on the context of analysis. A graph is a mathematical structure comprising of nodes or vertices, connected by edges. While the nodes represent the different entities of the system, the edge is illustrative of the relationship between them. However, if graphs are extrapolated to the context of data sciences, they are rather powerful and organized data structures. These, in turn, represent complex dependencies in the data. Graph analytics is used to model pairwise relationships between people and/or objects in any system.

This would help one in generating insights into the strength and direction of the relationship. The edges are the more critical component, might connect nodes to other nodes or its properties. Graph analytics offers different features for analyzing relationships, unlike conventional analytics algorithms that focus on summarizing, aggregating and reporting on data. There are four different analysis done using graphs. They include -

- Analytic Techniques

- Path Analysis

- Connectivity Analysis

- Community Analysis

- Centrality Analysis

- SubGraph Analysis

Path analysis - Its the technique to analyze the connections between a pair of entities .for example, the distance between them.

Connectivity analysis - This technique assesses the strength of links between nodes. The application of connectivity analysis can be found in identifying weak links in a power grid.

Centrality Analysis - It helps one in identifying the relevance of the different entities in your network and analyzing the central entities. One can use this to find the most highly accessed website or web pages for further analysis.when we talk about graphs than in that we can use this to find the most highly accessed node in the graph database.

Community Analysis - This method is a distance and density-based analysis which is used to identify communities of people or devices in a huge network. For this we can say detecting target audience by identifying people on a social network can be an example of the same.

Sub-graph Analysis - This can be used to identify the pattern of relationships. Examples of this type of graph are fraud detection and identifying hacker attacks.

Graph visualization is a better way to understand and manipulate connected data. Source- Graph Visualization Tools and Best Practices.

What are the applications of Graph Analytics?

Graph Analytics helpful for assigning page ranks to web pages for analyzing the performance of the same. It can be widely done in social media analytics and can be in other applications. Some other use cases are -Link Base Mining Activities

This can be classified in various techniques. It covers algorithms like -- PageRank

- HITS

- SImRank

Pagerank Algorithm

The PageRank Algorithm Contrary to popular belief, PageRank is not named after the fact that the algorithm ranks pages, rather it is named after Larry Page, its inventor. Ranks are not assigned to subject domains, but specific web pages. According to the creators, the rank of each web page averages at about 1. It is usually depicted as an integer in the range [0, 10] by most estimation tools, 0 being the least ranked. PageRank is an algorithm that addresses the problem of Link-based Object Ranking (LOR). The objective of this is to assign a numerical rank or priority to each web page. We will work with a model in which a user starts at a web page and performs a “random walk” by randomly following links from the page he/she is currently in.

Production Recommender system is a useful information tool based on algorithms to provide customers with the most suitable products. Click to explore about, Product Recommendation with Graph Database

What are the best Data Visualization Tools?

The best data visualization tools are explained below:

Gource

Gource includes built-in log generation support for Git, Mercurial, Bazaar and SVN. Gource can also parse logs produced by several third-party tools for CVS repositories. It helps to visualize the interconnected data.Walrus

A walrus is a tool we can use to visualize the large directed graphs in a three-dimensional space. Using this tool it is possible to display graphs which contains a million nodes or more, but occlusion, visual clutter, and other factors can diminish the effectiveness of Walrus as the degree of their connectivity, or the number of nodes increases. Thus, in practice, Walrus is suitable for to visualize moderately sized graphs. It makes use of 3D hyperbolic geometry to display graphs under a fisheye-like distortion. This allows the user to examine the fine details(by magnifying small portion) of a small area of the graph. Some conditions when working with Walrus - Walrus currently has some requirements, restrictions, and limitations which may render it unsuitable for a given problem domain or dataset- It supports only directed graphs.

- It supports only connected graphs with reachable nodes.

- Multiple links are not supported.

- It doesn't support graphs which change dynamically.

- Using this tool only one graph can be loaded at any time.

- It's not an API its a standalone application.

Big Data Architecture helps design the Data Pipeline with the various requirements of either the Batch Processing System or Stream Processing System. Click to explore about, Big Data Architecture

Keylines

Developers make use of KeyLines to build powerful custom network visualization applications. KeyLines application work on any device and in all standard web browsers, to reach everyone who needs to use them. KeyLines is compatible with any IT environment, letting you deploy your network visualization application to an unlimited number of diverse users.Linkurious

Import your data into Linkurious and start finding answers. Query, visualize and collaboratively investigate your data to discover hidden insights.Cytoscape

Cytoscape is open-source software for visualization of biological, networks, pathways, integrating these networks with annotations, and other state data. It was initially designed for biological research purpose, but now it is a general platform for visualization and complex network analysis. Its core distribution provides a basic set of features for analysis, data integration, and visualization. Some additional features are available as Apps( such as Plugins). Apps are available for molecular profiling analyses and network, new layouts, additional file format support, scripting, and connection with databases.VisAnt

VisAnt is used for visual analysis of metabolic networks in cells and ecosystems. Meta graph-based multi-modal visualization provides a solution to represent a symbolic network of multiple species.JSNetworkx

NetworkX is a Python language software package to create, manipulate, and study of the structure, dynamics, and function of complex networks. It provides the support to load and store networks in standard and nonstandard data formats, generate many types of random and classic networks, analyzes network structure, builds network models, design new network algorithms, draws systems, and much more.Graph Tool

Graph-tool is a Python module for manipulation and statistical analysis of graphs. Opposite to this most other python modules with the core data structures and algorithms, similar functionality is implemented in C++, makes use of template metaprogramming, based heavily on the Boost Graph Library. This is a level of performance that is comparable (both in memory usage and computation time) to that of a pure C/C++ library.Arcade

Arcade Analytics is your data’s new visual playground. In today’s visual world it’s not enough to recognize your data; you have to be able to present it. Convey relationships, connections, and more with contemporary graph analysis to find more significant and more in-depth insights into your data. If available, Arcade utilizes the GPU on the client’s browser for a smoother experience. Our innovative Graph Analytics technology allows you not just to understand the meaning of your data, but take it to the next level.Sigma.js

Sigma is a JavaScript library dedicated to graph drawing. It makes it easy to publish networks on Web pages and allows developers to integrate network exploration in rich Web applications.D3.js

It is a JavaScript library that helps us to manipulate documents based on data. D3 helps you to bring data to life using HTML, SVG, and CSS. D3’s emphasis on web standards gives you the full capabilities of modern browsers without tying yourself to a proprietary framework, combining powerful visualization components and a data-driven approach to DOM manipulation.There are several tools that make it easier for us to analyze the trajectory of business assets using data. Source- Data Visualization with Power BI

Gephi

- Exploratory Data Analysis - It helps in the intuition-oriented analysis by networks manipulations in real-time.

- Link Analysis - It reveals the underlying structures of associations between objects.

- Social Network Analysis - Easy creation of social data connectors to map community organizations and small-world networks.

- Biological Network analysis - Representing patterns of biological data.

- Poster creation - Scientific work promotion with hi-quality printable maps.

Graphviz

Graphviz is an open-source graph visualization software. Graph visualization is a way of representing structural information in the form of graphs and networks. It is useful in different areas like networking, software engineering, bioinformatics, machine learning, database, and web design, and in visual interfaces for other technical domains.Gitgraph.js

Gitgraph.js is a simple JavaScript library that is meaningful to help you visually presenting git branching stuff like a git workflow, a tricky git command or whatever git tree you'd have in mind.Alchemy.js

Alchemy is a graph visualization application for the web. Create full applications with built-in features like search, clustering, and filters, or embed small graphs as visual elements in larger projects.NetworKit

NetworKit is a growing open-source toolkit for large-scale network analysis. It aims to provide tools for analyzing large networks in the size range from thousands to billions of edges. For this purpose, it implements efficient graph algorithms, many of them parallel to utilize multicore architectures. These are meant to compute standard measures of network analysis, such as degree sequences, clustering coefficients, and centrality measures. In this respect, NetworKit is comparable to packages such as NetworkX, albeit with a focus on parallelism and scalability.Netlytic

Netlytic is a cloud-based text and social networks analyzer tool that can automatically discover communication networks from publicly available social media posts. It makes use of public APIs to collect posts from Twitter, Instagram, YouTube, and Facebook. We can also use it to analyze our dataset.Vis.js

It is a dynamic, web browser-based visualization library. This library easy to use. It makes the handling of large amounts of dynamic data easy. It consists of components DataSet, Timeline, Network, Graph2d and Graph3d.Holistic Strategy Towards Graph Database

We all are connected with each other. There is the only a semantic approach of databases that are particularly helpful because they reveal the links and relationships between related data similarly to how we do so ourselves. In recent decades, our capacity to generate and store data has increased exponentially. At the same time, our large-scale processing capacity has increased, allowing us to analyze the data generated by the activities we carry out every day. While data analysis has been one of the cornerstones of scientific, technological, and economic advance until today, it is the amount of data available today that has opened the door to new opportunities.

For example, there are patterns of consumer behavior that are impossible to detect with little data, which become evident on a large scale; In the same way, the parameters of certain predictive models, which in the absence of sufficient data are chosen thanks to the expertise of professionals in the area, can be accurately estimated when the amount of data is massive.

Explore More About

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)