Introduction to Docker Container

Docker is an open platform tool to make it easier to create, deploy and to execute the applications by using containers. Docker Containers allow us to separate the applications from the infrastructure so we can deploy application/software faster. Docker have main components which includes Docker Swarm, Docker Compose, Docker Images, Docker Daemon, Docker Engine. We can manage our infrastructure in the same ways as we manage our applications.

What is Docker?

The Docker is like a virtual machine but creating a new whole virtual machine; it allows us to use the same Linux kernel. The advantage of Docker platform is to ship, test, and deploy code quicker so that we can reduce the time between writing code and execute it in production. And the main important thing about Docker is that its open source, i.e., anyone can use it and can contribute to Docker to make it easier and more features in it which aren’t available in it.

Running Containers at any real-world scale requires container orchestration, and scheduling platform like Docker Swarm, Apache Mesos, AWS ECS. Click to explore about, Laravel Docker Application Development

What are the Components of Docker Container?

The Core of the Docker consists of

- Docker Engine

- Docker Containers

- Docker images

- Docker Client

- Docker daemon

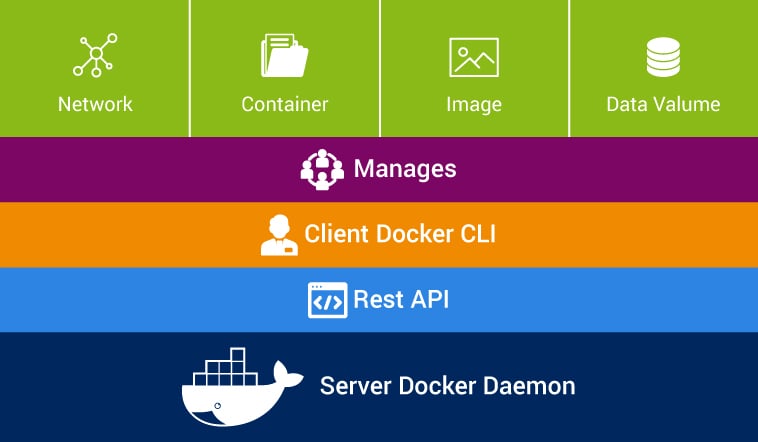

Let discuss the components of the Docker about Docker Engine. The Docker engine is a part of Docker which create and run the Docker containers. The docker container is a live running instance of a docker image. Docker Engine is a client-server based application with following components -

- A server which is a continuously running service called a daemon process.

- A REST API which interfaces the programs to use talk with the daemon and give instruct it what to do.

- A command line interface client.

The command line interface client uses the Docker REST API to interact with the Docker daemon through using CLI commands. Many other Docker applications also use the API and CLI. The daemon process creates and manage Docker images, containers, networks, and volumes.

The command line interface client uses the Docker REST API to interact with the Docker daemon through using CLI commands. Many other Docker applications also use the API and CLI. The daemon process creates and manage Docker images, containers, networks, and volumes.

Why we need of Docker Daemon?

The docker daemon process is used to control and manage the containers. The Docker daemon listens to only Docker API requests and handles Docker images, containers, networks, and volumes. It also communicates with other daemons to manage Docker services.

Using Docker Client

Docker client is the primary service using which Docker users communicate with the Docker. When we use commands “docker run” the client sends these commands to dockerd, which execute them out. The command used by docker depend on Docker API. In Docker, client can interact with more than one daemon process.

What is Docker Images?

The Docker images are building the block of docker or docker image is a read-only template with instructions to create a Docker container. Docker images are the most build part of docker life cycle. Mostly, an image is based on another image, with some additional customization in the image. We can build an image which is based on the centos image, which can install the Nginx web server with required application and configuration details which need to make the application run. We can create our own images or only use those created by others and published in registry directory.

To build our own image is very simple because we need to create a Dockerfile with some syntax contains the steps that needed to create the image and make to run it. Each instruction in a Dockerfile creates a new layer in the image. If we need to modify the Dockerfile we can do the same and rebuild the image, the layers which have changed are rebuilt. This is why images are so lightweight, small, and fast when compared to other virtualization technologies.

Web apps are developed to generate content based on retrieved data that changes based on a user’s interaction with the site. Click to explore about, Introduction to Python Flask Framework

Managing Docker Registries

A Docker registry keeps Docker images. We can run our private registry. When we execute the docker pull and docker run commands, the required images are removed from our configured registry directory. Using Docker push command, the image can be uploaded to our configured registry directory.

Docker Containers

A container is the instance of an image. We can create, run, stop, or delete a container using the Docker CLI. We can connect a container to more than one networks, or even create a new image based on its current state. By default, a container is well isolated from other containers and its system machine. A container defined by its image or configuration options that we provide during to create or run it.

Docker Namespaces Components

Docker using a service named namespaces is provided to the isolated environment called container. When we run a container, Docker creates a set of namespaces for that particular container. The namespaces provide a layer of isolation. Some of the namespace layer is -

- Namespace PID provides isolation for the allocation of the process, lists of processes with details. In new namespace is isolated from other processes in its "parent" namespace still see all processes in child namespace

- Namespace network isolates the network interface controllers, IP tables firewall rules, routing tables etc. Network namespaces can be connected with each other using the virtual Ethernet device.

Docker Control Groups

Docker Engine in Linux relies on named control groups. A group limits the application to a predefined set of resources. Control groups used by Docker Engine to share the available hardware resources to containers. Using control groups, we can define the memory available to a particular container.

Union File Systems

Union file systems is a file system which is used by creating layers, making them lightweight and faster. Docker Engine using union file system provide the building blocks to containers. Docker Engine uses many UnionFS variants some of including are AUFS, btrfs, vfs, Device Mapper, etc.

Docker Container Format

Docker Engine adds the namespaces, control groups & UnionFS into a file called a container format. The default size for the container is lib container.

Using Docker File

A docker file is a text file that consists of all commands so that user can call on the command line to build an image. Use of base Docker image add and copy files, run commands and expose the ports. The docker file can be considered as the source code and images to make compile for our container which is running code. The Dockerfile are portable files which can be shared, stored and updated as required. Some of the docker files instruction is -

- FROM - This is used for to set the base image for the instructions. It is very important to mention this in the first line of docker file.

- MAINTAINER - This instruction is used to indicate the author of the docker file and its non-executable.

- RUN - This instruction allows us to execute the command on top of the existing layer and create a new layer with the result of command execution.

- CMD - This instruction doesn’t perform anything during the building of docker image. It Just specifies the commands that are used in the image.

- LABEL - This Instruction is used to assign the metadata in the form key-value pairs. It is always best to use few LABEL instructions as possible.

- EXPOSE - This instruction is used to listen on specific as required by application servers.

- ENV - This instruction is used to set the environment variables in the Docker file for the container.

- COPY - This instruction is used to copy the files and directory from specific folder to destination folder.

- WORKDIR - This instruction is used to set the current working directory for the other instruction, i.e., RUN, CMD, COPY, etc.

the next generation container image builder, which helps us to make Docker images more efficient, secure, and faster. Click to explore about, Building Efficient Docker Container Images with BuildKit

What is Docker Container Platform and Architecture?

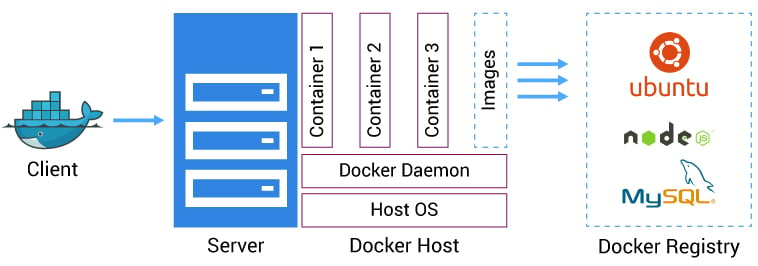

The advantage of Docker is to build the package and run the application in sandbox environment said Container. The docker container system utilizes the operating system virtualization to use and combine the components of an application system which support every standard Linux machine. The isolation and security factors allow us to execute many containers parallel on a given system.

Containers are lightweight in size because they don’t need the extra resource of a HyperV or VMware, but run directly within the machine kernel. We can even run Docker containers within machines that are actually virtual/hyper machines. Docker uses a client-server based architecture model. The Docker client communicates with the Docker daemon, which does process the lifting of the building, running, and distributing Docker containers. We can connect a Docker client to another remote Docker daemon. The Docker client and daemon communicate using of REST API and network interface.

What are the Key Features of Docker?

- Docker allows us to faster assemble applications from components and eliminates the errors which can come when we shipping the code. For example, we can have two Docker containers running two different versions of the same app on the same system.

- Docker helps us to test the code before we deploy it to production as soon as possible.

- Docker is simple to use. We can get started with Docker on a minimal Linux, Mac, or Windows system running with compatible Linux kernel directly or in a Virtual Machine with a Docker binary.

- We can "dockerize" our application in fewer hours. Mostly Docker containers can be launch with in a minute.

- Docker containers run everywhere. We can deploy containers on desktops, physical servers, virtual machines, into data centers, and up to public and private clouds. And, we can run the same containers everywhere.

What is Docker Security?

Docker security should be considered before deploy and run it. There are some areas which need to be considered while checking the Docker security which include security level of the kernel and how it support for namespaces and groups.- Docker daemon surface.

- The container configuration file which can have loopholes by default or user has customized it.

- The hardening security policy for the kernel and how it interacts with containers.

What is Docker Compose?

Docker Compose is a tool which is used to define and running multiple-containers in Docker applications. Docker composes use to create a compose file to configure the application services. After that, a single command, we set up and start all the services from our configuration. Docker Compose is a beneficial tool for development, testing, and staging environments. Docker Compose is a three-step process.

- Define the app’s environment with a Dockerfile so that it can be reproduced anytime and anywhere.

- Define the services in docker-compose.yml after that we can be run together in an isolated environment.

- After that, using docker-compose up and Compose will start and execute the app.

What are the features of Docker Compose?

The features of docker compose that make it unique are -- Multiple isolated environments can be run on a single host

- Store volume data when containers are created

- Only recreate containers in which configurations have been changed.

Containerization & container orchestration technology like docker & kubernetes have made it possible to adapt a microservice approach for app development. Click to explore about, Security Automation for Kubernetes

Getting Started With Docker Swarm Mode

Docker Engine version also includes swarm mode for managing a cluster of Docker Engines said a swarm. With the help of Docker CLI, we create a swarm, deploy application services to a swarm, and manage swarm.What are the Features of Docker Swarm Mode?

- Cluster management integrated with Docker Engine - Using the Docker Engine CLI we create a swarm of Docker Engines where we can easily deploy application services. We don’t need any additional software to create or manage a swarm.

- Decentralized design - We can deploy any kinds of node, manager, and worker, using the Docker Engine. It means we can build an entire swarm from a single disk image.

- Service model - Docker Engine uses a declarative approach so that we can define the desired state of the various services in our application stack.

- Scaling - For every service, declare the number of tasks we want to run. When we scale up or down, the swarm manager automatically does the changes by add or remove functions to maintain the required state.

- MultiSystem Networking - We can use an overlay network for the services or applications. Swarm manager assigns addresses to the containers on the overlay network when it starts the application.

- Discovery Service - Swarm manager nodes assign each service in the swarm a DNS name and load balance running containers. We can query any container running in the swarm through a DNS server in the swarm.

- Load balancing - We can expose the ports for services to an external load balancer. In Internal, using swarm, we can decide how to distribute service containers between nodes or hosts.

- TLS Certificate - Each node in the swarm mode enforces to use TLS authentication & encryption to secure communications with all other nodes. We have the option to use self-signed root certificates or certificates from a custom root CA.

- Rolling updates in Docker - We can apply service updates to nodes incrementally. The swarm manager controls the delay between service deployment to different sets of hosts. If something goes wrong, we can roll-back a task to a previous version of the service.

Docker Container Security

To secure the Docker Swarm cluster we have following options -- Authentication using TLS

- Network access control

Docker Swarn - Setting Up TLS

All nodes in a Swarm cluster should bind their Docker Engine daemons to a network port. It brings with it all of the common network related security concern such as man-in-the-middle attack. These type of risks are compounded when the network is untrusted such as the internet. To eliminate these risks, Swarm and the Engine use TLS for authentication. The Engine daemons, including the Swarm manager, which is configured to use TLS will only accept commands from Docker Engine clients who sign their communications. The Engine and Swarm also support other party Certificate Authorities as well as internal corporate CAs. Docker Engine and Swarm ports for TLS are -- Docker Engine daemon - 2376 tcp

- Docker Swarm manager - 3376 tcp

Docker Access Control Model

Production networks are those networks in which everything locked down so that only allowed traffic can flow from the network. The below mention lists show the network ports and protocols which are used by different components of a Swarm cluster. We should configure firewalls and other network access control lists to allow the traffic from below mention ports.Swarm Manager

- Inbound 80 TCP (HTTP) - Used for docker pull commands. If we need to pull images from Docker Hub, we must allow Internet connections through port 80, in most cases by default it's already allowed.

- Inbound 2375 TCP - Used for Docker Engine CLI commands directly to the Engine daemon.

- Inbound 3375 TCP - Used for Engine CLI commands to the Swarm manager.

- Inbound 22 TCP - This port allows remote access management via SSH.

Swarm Nodes

- Inbound 80 TCP (HTTP) - This port allows docker pull commands to work and pull images from Docker Hub.

- Inbound 2375 TCP - Used for Engine CLI commands directly to the Docker daemon.

- Inbound 22 TCP - Used for remote access management via SSH.

Custom, Cross-Host Container Networks

- Inbound 7946 TCP/UDP - This port allows for discovering other container networks.

- 4789 UDP - This port allows the container overlay network.

For added security, we need to configure the well-known/unknown port rules only to allow connections from interfaces on known Swarm devices. To configured Swarm cluster for TLS, replace 2375 & 3375 with 2376 & 3376.

Setting High Availability For Docker Swarm

The Swarm manager is a single point of accepting all commands for the Swarm cluster. It also schedules resources against the cluster. In case Swarm manager becomes unavailable, cluster operations stop working until the Swarm manager becomes up again, which is not unacceptable any in critical scenarios. In Swarm, we have High Availability features against possible failures of the Swarm manager. We can use Swarm’s HA feature to configure multiple Swarm managers for a single cluster. These Swarm managers operate in an active and passive formation with a single Swarm manager one is primary, and all others will be secondaries. In Swarm secondary managers operate as a warm standby, i.e. they run in the background of the primary Swarm manager.

The secondary Swarm managers can accept commands issued to the cluster, acting as a primary Swarm manage; still, any commands received by the secondaries are directly forwarded to the primary where they are run. In the case of primary Swarm manager fail, a new primary is selected from the available secondaries. While creating high availability Swarm managers, it should take care to distribute them over as many failure domains as possible. A failure domain is a network that can be negatively affected if a critical device or service experiences problems.

Docker Network Containers

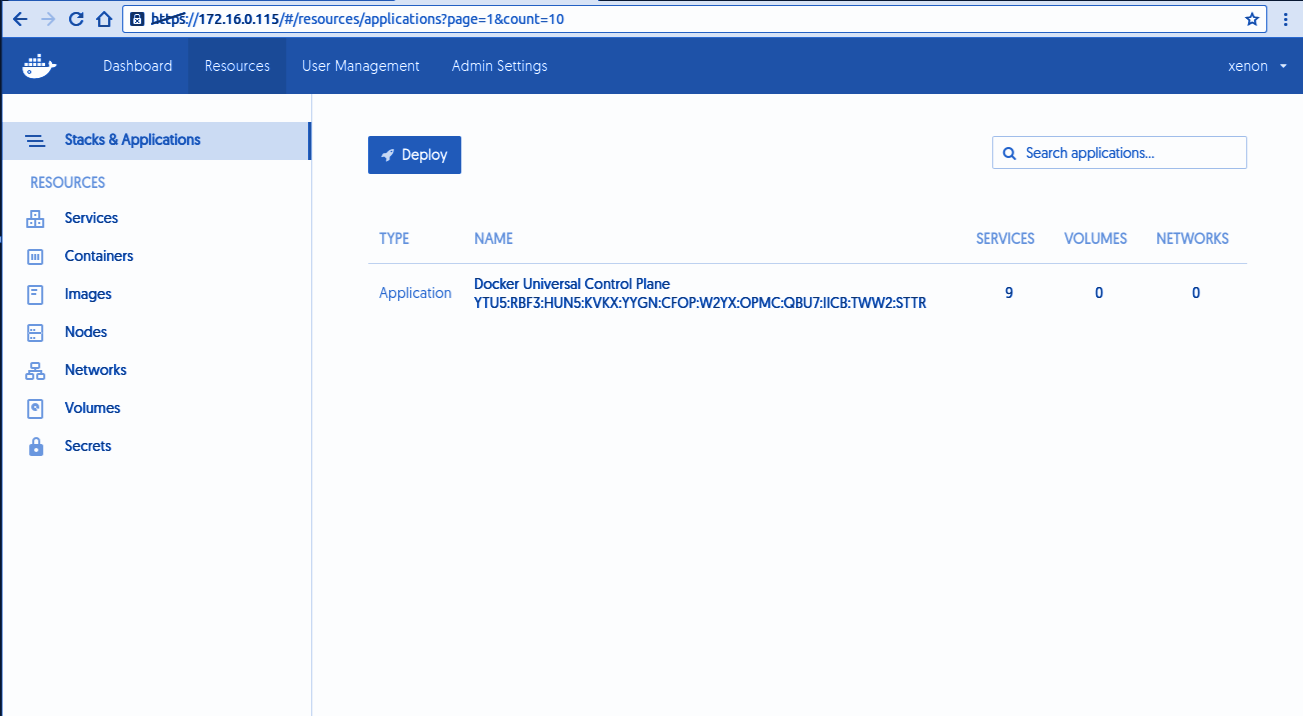

Docker container networks are overlay networks and created over the multiple Engine hosts. A container network needs a key value to store and maintain the network configuration and state. These key value can be shared in common with the one used by the Swarm cluster discovery service.Docker Container for the Enterprise

Docker developed an enterprise edition solutions where development and IT teams who build, ship and run applications in production at scale level. Docker enterprise edition is a certified solution to provide enterprises with the most secure container platform in the industry to deploy any applications. Docker EE can run on any infrastructure.

Docker EE Infrastructure and Plugins

Docker provides an integrated, tested platform for apps running on Linux or Windows operating systems and Cloud providers. Docker Certified Infrastructure, Containers and Plugins are premium features which are available for Docker EE with cooperative support from Docker.- Certified Infrastructure Provides an integrated environment for Linux, Windows Server 2016, and Cloud providers like AWS and Azure.

- Certified Containers provide the trusted packaged as these docker containers are built with security best practices.

- Certified Plugins provide networking and volume plugins which are easy to download and install containers to the Docker environment.

Deploying Application with Docker EE

In today's world, a very app is dynamic in nature and requires a security model which is defined for the app. Docker EE provides an integrated security framework which provides high default security with the permission to change configurations interfaces for developers and IT to easily collaborate. Secure the environment, and Docker Enterprise Edition provides the safe apps for the enterprise.

Docker For Microsoft Azure

Docker for Microsoft Azure provides a Docker-native solution which avoids operational complexity and removing the unneeded additional APIs to the Docker stack. Docker for Azure allows interacting with Docker directly. Our primary focus on the thing that matters is running workloads. It will help the team to deliver more value to the business faster and fewer details to keep in mind once. The Linux distribution used by Docker is well developed and configured to run docker on Azure. Everything from kernel configuration and networking stack is customized to make it a place to run Docker. For instance, we need to make sure that the kernel versions are compatible with the latest Docker functionality. In unexpected Azure logging and Linux kernel killing memory-hungry processes, such issues can occur anytime by default. Log rotation on the host has configured automatically so that logs can’t use up all of your disk space. The self-cleaning and self-healing properties are enabled by default like system prune service which ensures unused Docker resources such as old images are cleaned up automatically.Deploying App on Docker for Microsoft Azure

- After login to Azure account portal, and click on the + (new) button, and search for Web App for Linux.

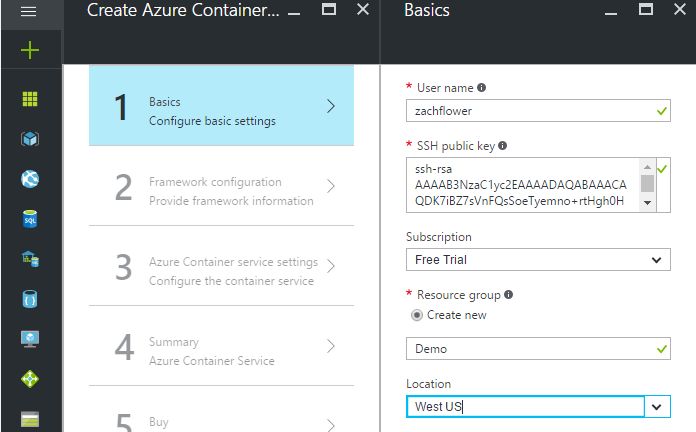

- In Azure Container Service, Azure Container Service will show as the first item. On clicking the Create button, it will start a simple step-by-step setup process for container cluster.

- Fill out the Basics configuration settings which required for docker cluster. In first field username of the administrator for virtual machines inside the Docker Swarm cluster. This information should be user-specific.

- In the next step, we have to provide configure the Orchestrator, which can be Docker Swarm or Docker DC/OS according to need.

- In the Service Settings, where we need to provide the number of agents. This number can be as high as 100. The number of masters depend on our configuration and need.

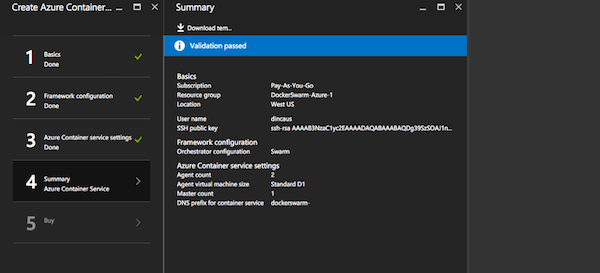

- After saving all this, it will show the Summary view.

- Once we have created and deployed Docker Swarm, we need to connect to the Master to be sure that everything is working. Docker Swarm resource should be shown on the dashboard. Select the Deployments item on the left menu, under the Settings section.

- In Deployment entries, choose the last one, which is the first entry in the list. On the right side, we will see information about the deployment. Here we can see the DNS of the master as the command for connecting via SSH to the master.

Docker for AWS

Docker on AWS is very simple to install, configure and maintain. Docker setup is quick and can be done with the help of Cloud Formation based template. Docker on AWS is highly integrated with the Amazon infrastructure to deploy a most secure Docker cluster spread over multiple availability zones. High-performance Docker deployment let us build, ship and run portable apps in the cloud.Deploying Docker on AWS

We have two options to deploy the Docker for AWS -- Having a pre-existing VPC

- Create a new VPC set up by Docker

Prerequisites for Deploying Docker on AWS

Log in to the AWS account and must have following permissions to use CloudFormation and creating the objects.- EC2 instances & Auto Scaling groups

- IAM profiles

- DynamoDB Tables

- SQS Queue

- VPC + subnets and security groups

- ELB

- CloudWatch Log Group

- SSH key required in AWS to completed the Docker installation

- AWS account which supports EC2-VPC

Configuration for Docker on AWS

Docker for AWS is installed with a CloudFormation which configures Docker in swarm-mode by default. There are two options to deploy Docker for AWS, using AWS management console, or use the AWS CLI. In both, we have the following options.

KeyName - Pick the SSH key that will be used for SSH into the manager nodes.

InstanceType - The EC2 instance type for worker nodes.

Manager Instance Type - The EC2 instance type for manager nodes.

Cluster Size - The number of workers we need in the swarm (0-1000).

Manager Size - The number of Managers for the swarm. One manager is good for the testing environment but in productions, there are no failover guarantees with one manager because if the single manager fails the swarm will go down.

Enable System Prune - It can be considered and can be enabled if we want docker for AWS clean automatically unused space on the swarm nodes. Pruning removes the following -

- Stopped containers

- Volumes not used by at least one container

- Dangling images

- Unused networks

Enable Cloudwatch Logs - Should be enabled if Docker needs to send container logs to CloudWatch. Defaults to yes.

Manager Disk Size - Size of Manager’s ephemeral storage volume in GiB (20 - 1024)

Installing AWS Management Console For Docker

First, we go to AWS management after that go to the Release page, and click on the launch stack button to start the deploying process.Deploy App on Docker for AWS

We can start creating containers and services. $ docker run hello-world To run websites, ports exposed with -p are automatically exposed through the platform load balancer - $ docker service create --name Nginx -p 80:80 NginxDevelop and Deploy .NET applications using Docker and Kubernetes and Adopt DevOps in existing .NET Applications. Click to explore about, Develop and Deploy ASP.NET Application on Kubernetes

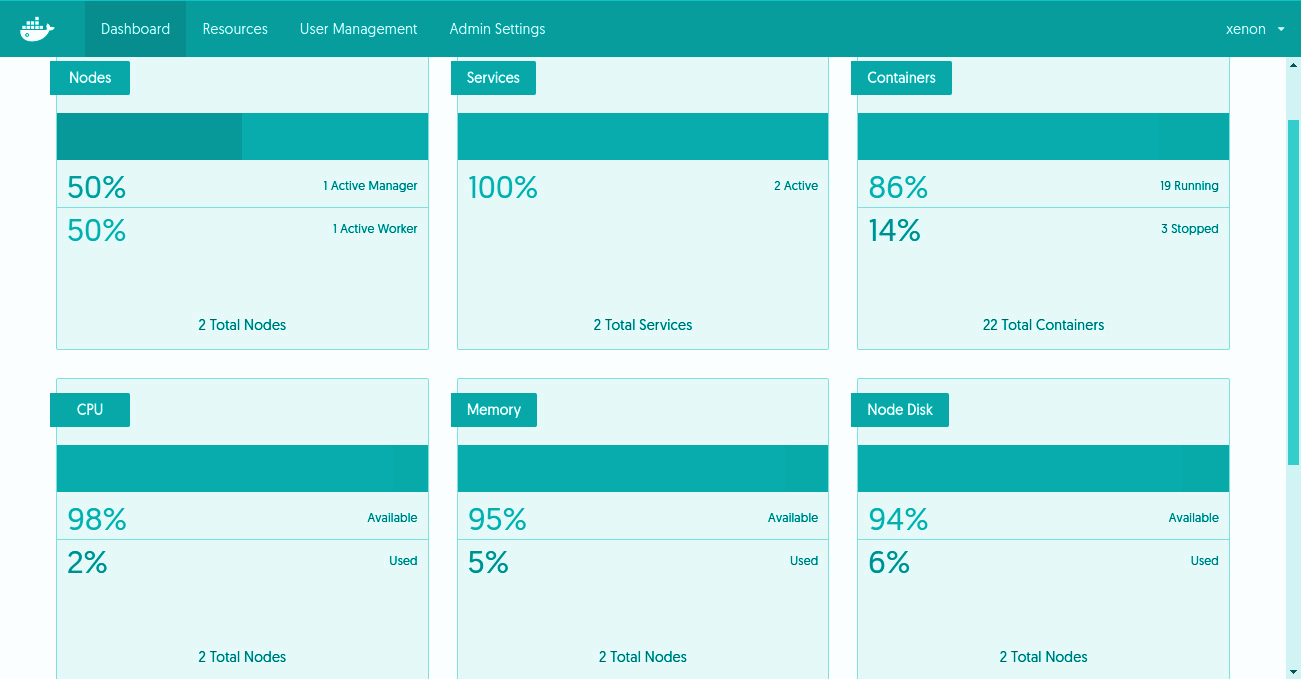

Docker Container Monitoring

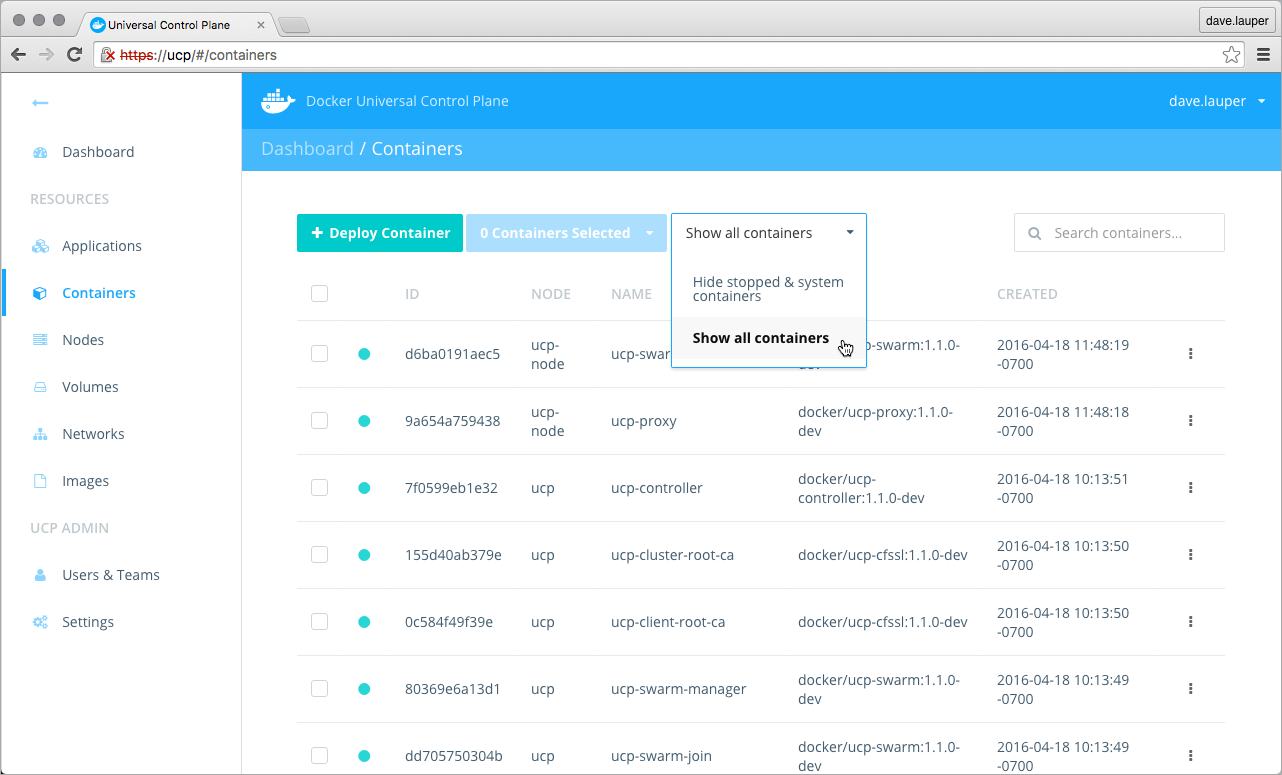

Docker Universal Control Plane offers real-time monitoring of the cluster, metrics & logs for each container. Operate the larger infrastructures requires a long retention time for logs. Monitoring and logging solution provides the following operational insights -- Docker events Auditing - Docker events provides a complete view of containers life cycle. By collecting events, we can see what happens with containers during (re)deployments of containers to different nodes. Some containers are configured for automatic restarts, and the events can help us to find out whether container processes crash frequently. In case a container is out of memory events, it might be wise to modify the memory limits or consultant with developers why this event happened. Docker Events carry the information about the critical security of applications, such as -

- Version changes in application images

- Application shutdowns/auto restart

- Change in storage volumes or network settings

- Deletion of storage volumes

- Resource - The resource management with Docker is a significant advantage of running multi-node workflows on shared resources, for this resource limits like CPU, IO and Memory are required. Some organizations enable to determine the exact requirements of the Dockerized applications, mostly because they deployed the application in other ways in the past. But now using UCP monitoring the resources required by containers helps one determine the right limits.

- Details of nodes and containers - If we have detailed metrics which helps optimize application resource usage. It allows drill down from a cluster view a single container while troubleshooting or only trying to understand operations details.

- Centralized log management - It allows full-text search, filtering & analytics across all containers. Logs collected and shipped to an indexing engine. The integrated functions in Logsene and integrations forou Kibana make it easy to analyze logs obtained in Docker EE.

Monitoring of Docker Using CLI

Following command can be used to view the status of Docker - $ sudo docker ps -a $ sudo docker images $ sudo docker networks $ sudo docker services $ sudo docker node ls $ sudo docker info $ sudo docker logs container id $ sudo docker rm container id $ sudo docker rmi image idHow Can XenonStack Help You?

XenonStack Docker Consulting Services For Enterprises and Startups -Microservices With Docker

Now build your Microservices in a new and easy way with Docker Containers. Run each service inside a container and combine all those containers to form a complex Microservices Application. Setup your Continuous Integration Pipeline to build and deploy your Microservices Application on Docker. Drive the application from testing, staging, and into production without having to tweak any code.Managed Container Solutions

Managed Container Solutions offer Docker Hosting Services. Deploy your container application within seconds by setting up your orchestration platform.Hadoop and Spark on Docker

Deploy, Manage and Monitor your Big Data Infrastructure on Docker. Run large-scale multi-tenant Hadoop Clusters and Spark Jobs on Docker with proper Resource utilization and Security.Docker Toolbox For Data Science

Setup your Docker Data Science environment with Deep Learning, Jupyter Notebook, Tensor Flow, GPU's and Docker Containers.

- Discover more about the Role of Containers in DevOps

- Click to know about Containerization for Applications

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)