Introduction to Docker BuildKit

It is the next generation container image builder, which helps us to make images more efficient, secure, and faster. It's integrated into the Docker release version v18.06. BuildKit is a part of the Moby project which was developed after learning's and failures to make the image build process -- Concurrent

- Cache-efficient

- Better Support for storage management

An open platform tool to make it easier to create, deploy and to execute the applications by using containers Click to explore about, Container Architecture and MonitoringIt works on multiple export formats such as OCI or along with the Support of Frontends (Dockerfile) and provides features such as efficient caching and running parallel build operations. It only needs container runtime for its execution and currently supported runtimes include containerd and runc. Before getting to the critical components of BuildKit project, let's take a look at the features it provides.

What are the key features ?

The key features of BuildKit are listed below:

- Automatic garbage collection

- Extendable Frontend formats

- Concurrent dependency resolution

- Efficient instruction caching

- Build cache import/export

- Nested build job invocations

- Distributable workers

- Multiple output formats

- Pluggable architecture

- Execution without root privileges

An Open-Source Language. Basically like Java, C and C++ - Kotlin is also “statically typed programming language”. Click to explore about, Kotlin Application Deployment

What is the architecture?

It could be used as a standalone daemon or along with the containerd. It consists of two key components, the build daemon named as BuildKitd and the CLI tool build ctl to manage the BuildKitd.Low-Level Build LLB

LLB is a low-level build definition format. LLB is used to define a content-addressable dependency graph that can be used to put together for complex build definitions. All the caching and execution work is set in the low-level builder. The caching module is completely rewritten concerning the current caching module to support many new features such as caching from remote and build cache import-export.

The Build cache can also be exported to the registry and later could be retrieved from the remote location from any host. LLB expects a Frontend to which it can execute and pass to the bucket. A frontend could be a human-readable Dockerfile in which we have written the set of instructions to move to the BuildKit. We can say that LLB is to Dockerfile what LLVM IR is to C.

A sort of database service which is used to build, deploy and deliver through cloud platforms. Click to explore about, Cloud Native Databases

BuildKitd and Buildctl

BuildKitd daemon supports two worker backends: OCI (runc) and Containerd. By default, the OCI runc backend is used, but we also have provision to handle the conatinerd worker backend. The more supported worker would be added in the future.What are the BuildKit performance examples?

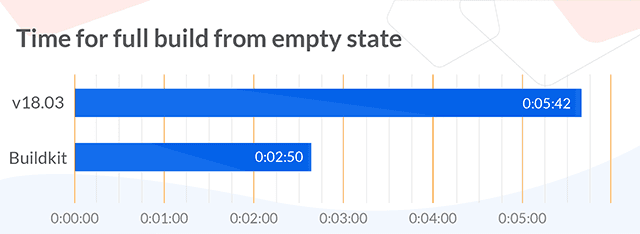

Using the latest caching and parallel execution feature in it, let's check out how much difference it makes concerning the traditional docker build. Fig 4a - Based on the docker build from scratch, the results are 2.5x faster build of the sample project.

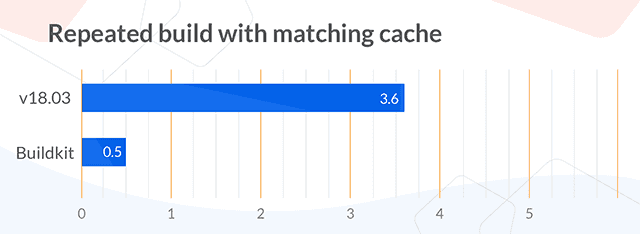

Fig 4a - Based on the docker build from scratch, the results are 2.5x faster build of the sample project.  Fig 4b - Rerunning the same build with local cache the speed is 7x faster.

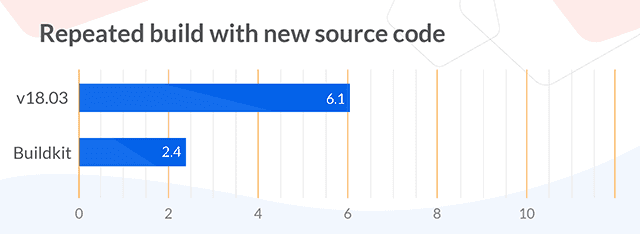

Fig 4b - Rerunning the same build with local cache the speed is 7x faster.  Fig 4c - Repeatable builds with new source code leads to 2.5 fx faster build speed.

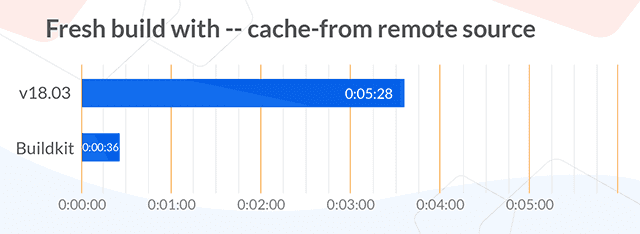

Fig 4c - Repeatable builds with new source code leads to 2.5 fx faster build speed.  Fig 4d - Using the new build caching --cache-from remote source the caching is 9x faster. Note that in the above scenario there was no cache on local and it's getting the cached content from the remote source.

Fig 4d - Using the new build caching --cache-from remote source the caching is 9x faster. Note that in the above scenario there was no cache on local and it's getting the cached content from the remote source.What are the various use cases?

The Use cases of BuildKit are listed below:

Building Docker Images in Kubernetes

Currently, while building its images in the Kubernetes cluster, we need to have a pod running with /var/run/docker. sock hostPath mount. So it could share the sock with the host, or the other way could be running the docker:dind image as a base image for its build process in Kubernetes. Both of these solutions are not secure at all. But with it can be executed as a non-root user and also it does need to define the security Context configuration. BuildKit works in rootless mode.Custom Outputs

It leverages its power beyond just building images. We could add an output directory flag, and it would output the resultant binary or any result to local. For example, we don't have the Go Environment setup on our system, but we have a docker file with build Environment and after running that it can output the binary to the local that could be used by another in the build process. The output directory feature was integrated into the version v19.03 beta.An open platform for developers and sysadmins to build, ship, and run distributed applications, whether on laptops, data center VMs, or the cloud. Click to explore about, Rocket Chat Deployment

Concurrent build process and execution

BuildKit Uses DAG-style low-level intermediate language LLB which help us to get the Accurate dependency analysis and cache invalidation. Also, it would check and run multiple verticals in parallel. So all the instructions in the any frontend backend could run in parallel.Remote caching

This feature is an upgraded version from the --cache-from which was having problems such as images need to be pre-pulled, no support for multi-stage builds, etc. but now in the latest v19.03 release you could pull the cache from remote source and it would pull only the cacheable content from a docker image from remote location instead of pulling down the whole image.Frontend Support

It not only supports the frontend but BuildKit LLB can also be compiled from non-Dockerfiles. Several new languages are being proposed such as -- Buildpacks

- Mockerfile

- Gockerfile

A Holistic Startegy

BuildKit is very useful for building the Dockerfile more efficiently and securely. It will be great to see it in action with more new features. Also, there is still more work to be done in Support of windows containers build process. To learn more about Containers we advise taking the following steps -- Understand How To Deploy PostgreSQL with Docker on Kubernetes

- Learn more about Docker Architecture and Security

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)