What are the Common Use Cases of IaC using Terraform?

The Use Cases are listed below:

Heroku App Setup

Heroku is a PaaS for hosting web applications. Developers create an application and then attach add-ons like a database or email provider. Its best feature is the ability to scale the number of dynos or workers elastically. It is used for codifying the setup required for a Heroku application, ensuring that all necessary add-ons are available. But it can go even further -- Configuring DNSimple to set a CNAME,

- By setting Cloudflare as CDN for the app.

Multi-Tier Applications

One of the pervasive patterns is N-tier architecture. A widespread 2-tier architecture is a pool of web servers that uses a database tier. More tiers can be added for API servers, caching servers, routing meshes, etc. To scale tiers independently and provide a separation of concerns, this pattern is used. It is an optimal tool for building and managing these infrastructures. Every tier is described as a collection of resources and automatically handles the dependencies between each tier. Before the web servers are started, the database tier is available will ensure by it and that the load balancers are aware of the web nodes. By using it, each tier can be scaled easily by modifying a single count configuration value because the creation and provisioning of a resource are codified and automated, elastically with load becomes trivial.Self-Service Clusters

In a specific organizational size, managing a large and growing infrastructure becomes very challenging for a centralized operations team. Instead, to make “self-serve” infrastructure more attractive, using tooling provided by the central operations team allows product teams to manage their infrastructure. By using it, the ability of how to build and scale a service can be codified in a configuration. To enabling customer teams to use the configuration as a black box, configurations can be shared within an organization and to manage their services it is used as a tool.

AI enabled the IT Infrastructure to be flexible, intangible and on-demand. Click to explore about, AI in IT Infrastructure Management

Terraform Comparison with Chef, Ansible, Puppet and Salt

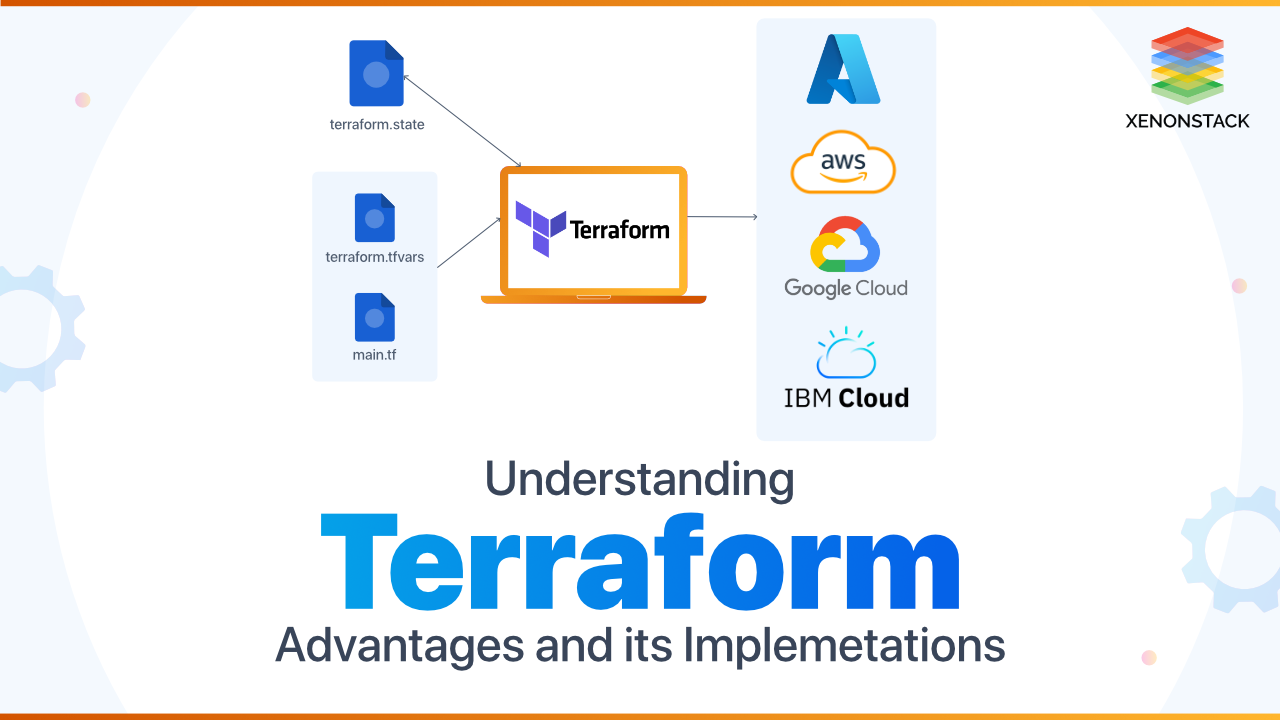

On a machine that already exists, the configuration management tools install and manages. It does the same, and also it allows us to focus on bootstrapping and initializing resources. Configuration management tools can also be used along with it to configure things inside a virtual machine. It makes the configuration management tool to be used to set up a resource once it has been created by using provisioners. When using Docker containers for running applications, these are self-sufficient and will contain the whole configuration of the application. Tools like Chef, Puppet, Ansible, etc. is not needed. But still, need something to manage infrastructure because the container will run anyway in the top of a server/virtual machine. It is used to create infrastructure for containers to run on. Tools such as Chef, Ansible, Puppet, etc. are used as IAS or Infrastructure as Code, but it is best for this because it can even maintain the state of infrastructure.How to implement Terraform using AWS Services?

There are following steps to implement Terraform (By using AWS)

Setting up AWS Account

(Infrastructure across different types of cloud providers like AWS, Google Cloud, Azure, DigitalOcean, and many others) Cloud hosting services provided by AWS were reliable and scalable. AWS is one of the most popular cloud infrastructure providers.

Installations

Find the supported package it for the system and download it. It is downloaded as a zip archive, and then the package is unzipped. After installing, verify the installation by opening a terminal session and checking that it is available. Deploying a single server - HCL language is used for writing it code in files with the extension “.tf.” HCL is a declarative language that describes the infrastructure that is wanted, and it will find out how to create it. Infrastructure across different platforms or providers can be created by it, like AWS, Google Cloud, Azure, DigitalOcean, and many others. Configuring the provider is the first step for using it. Deploying a single web server: In the next step, run a web server on this instance.

Deploying a Cluster of Web Servers

To running a single server can be a good start, but a single server can be a single point of failure. If the server is overwhelmed by heavy traffic or the server crashes, users can no longer access the site. To eliminate this run, a cluster of servers, which are routed around servers that go down and on the base of traffic, adjust the size of cluster up or down. There is a lot of work for managing such a cluster manually. Fortunately, AWS will take care of this by using the Auto Scaling Group (ASG).

Deploying a Load Balancer

One more problem to solve before launching the ASG: there are many Instances, for this needs a load balancer to distribute traffic across all of them. There is a lot of work in creating a load balancer that is highly available and scalable. AWS will let take care of this by using an Elastic Load Balancer (ELB) Clean up - After doing experimenting with it, remove all the created resources so AWS doesn’t charge for them. It keeps track of created resources, and cleanup is a breeze.

Command-line interface for Terraform?

The command-line interface (CLI) is used for controlling Terraform.- apply - Use for changes or builds infrastructure

- console - Use for interactive console

- destroy - Use for impair it – managed infrastructure

- fmt - Use for rewriting config files into canonical format

- get - Use for downloading and installing modules for configuration

- graph - Use for creating a visual graph of its resources

- import - Use for importing existing infrastructure into it

- init - Use for initializing existing or new it configuration

- output - Use for reading output from a state file

- plan - Use for generating and showing an execution plan

- providers - Use for printing tree of providers used in the configuration

- push - Use for uploading this it module to it Enterprise to run

- refresh - Use for updating local state file against real resources

- show - Use for inspecting it state or plan

- taint - Use for manually marking resources for recreation

- untaint - Use for manually unmarking a resource as tainted

- validate - Use for validating its files

- version - Use for printing its version

- workspace - Use for workspace management

What are the Best Practices of Infrastructure as Code?

The best practices for Infrastructure as Code are:

Code Structure

Writing it code better by having several files split logically like this:

- main.tf - call modules, locals, and data-sources to create all resources

variables.tf - contains declarations of variables used in main.tf

outputs.tf - includes outputs from the resources created in main.tf

terraform.tfvars: will be used to pass values to variables.

Remote Backend

Use a remote backend such as S3 to store tfstate files. Backends have two main features: state locking and remote state storage. Locking prevents two executions from happening at the same time. And remote state storage allows you to put your state in a remote yet accessible location.

Naming Conventions

This practice is described below:

General Conventions

Use _ (underscore) instead of - (dash) in all resource names, data source names, variable names, and outputs. Beware that existing cloud resources have many hidden restrictions in their naming conventions. Only use lowercase letters and numbers.

Resource and Data Source Arguments

Do not repeat resource type in resource name (not partially, nor completely):

Good: resource "aws_route_table" "public" {}

Bad: resource "aws_route_table" "public_route_table" {}

Include tags argument, if supported by resource as the last real argument, followed by depends_on and lifecycle, if necessary. All of these should be separated by a single empty line.

ReadME

Generate README for each module with input and output variables

NameDescriptionTypeDefaultRequiredinstance_countNumber of EC2 instancesString1NOwelcome_pageContent to be displayed at home pageString-YES

Conclusion

To learn more about Configuration management and Compliance and governance, and provisioning IT infrastructure, we recommend the following steps :- Read more about Infrastructure as Code in CI/CD Pipeline

- Click to explore Infrastructure as Code Security and Best Practices

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)