What are Kubernetes Containers?

In order to debug application running in it, first, it is necessary to understand it and Docker containers. So, let’s start with a quick introduction:- It is nothing but a container orchestration platform; it is an open-source platform for application scaling, management, and deployment. Automation application deployment, scaling, and power is the aim of it.

- Google developed it in 2014, and they have contributed now cloud-native computing foundation, which is managed by it currently. The reason everyone wants to use it is that it is more flexible.

- If you have more and more servers on that time, we have to challenge ourselves to manage all containers and how we get an idea about that and how we know which application is running on which server for reducing this complication we used it.

An open-source system, developed by Google, an orchestration engine for managing containerized applications over a cluster of machines. Click to explore about our, Kubernetes Deployment Tools and Best Practices

What are Docker Container?

- It is just a platform to build, ship and run its containers. It is a container orchestration open-source platform for Docker containers that is larger than Docker Swarm.

- Microservices connect with both. And both are used as the open-source platform. It is a tool designed to make it easier to create, deploy and run the application by using containers so that one can debug application running in it easily.

What are its key features?

The key features of Kubernetes are listed below:

Horizontal Scaling

It can scale horizontally and lets us deploy a pod and different containers. Your containers can be scaled when you have it configured automatically for debugging applications in it.Self Healing

It has self-healing capabilities; also, it is the best feature for it. It automatically restarts the container.Automated Scheduling

Automated Scheduling is a feature of Managed Kubernetes. Its scheduler is a critical part of the platform. Matchmaking a pod with a node Scheduler is responsible for that.

Load Balancing

Load distribution is load balancing; at the dispatch level, it is easy to implement.Rollback and Rollout Automatically

It is also a useful feature for rollout and rollbacks any change according to the requirement. If something goes wrong, it will be helpful for rollback and for any change update it is useful for rollout also after performing debug application running in its process.Storage Orchestration

It is also the best feature that we can mount the storage system according to our wish. Many more features are available in it that are mentioned in the above diagram.Event-driven and serverless architecture are defining a new generation of apps and microservices. Source: Event-Driven Autoscaling (KEDA)

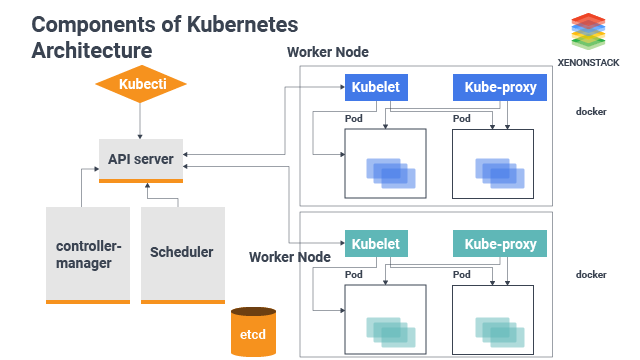

What are its components and architecture?

It used a client-server architecture in diagram master play role as server and node play role as a client. It is a possible multi-master or server setup by which there is only a single master server that plays the role of controlling the client/node. The server and client consist of various components. That describes the following:

Master/Server Component

A primary and vital component of the master node is the following:Kubernetes Scheduler

It iss a critical part of the platform. Matchmaking a pod with a node, Scheduler is responsible for that. It schedules on the best fit node after reading the requirements of the service.

Controller-Manager

For managing controller processes with dependencies on the underlying cloud provider, the cloud controller manager is responsible. For example, when a controller requires to check volume in the cloud infrastructure or load balancer, these are handled by the cloud controller manager. Some time needs to check if a node was terminated or set up routes, then this is also governed by them.Controller Manager

Cloud control manager and Kube controller manager both are different from each other there also working differently while debugging applications in Kubernetes architecture.etcd Cluster

It helps us to aggregate our available resources; basically, it is a collection of servers/hosts. It is only accessible for security reasons and from the API server. It stores the configuration details.Client/Node Component

The vital components of the Client/Node node is the following:

Pod

A pod is a collection of containers. Containers can not run directly by it. Any container will share the same resources and the local network in the same pod; the container can easily communicate with each other container in a pod.Kubelet

It is responsible for maintaining all pods in which contain the set of containers. It works to insure that pods and their containers are running in the right state, and all are healthy.Service-Oriented Architecture is helping businesses respond more quickly and more efficiently to changing market conditions. Click to explore about our, Service-Oriented Architecture

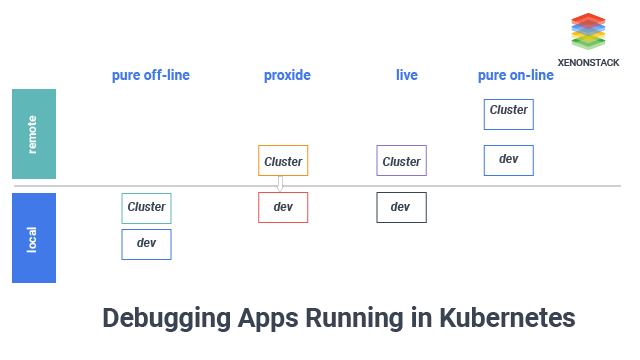

Debugging and Developing services locally in Kubernetes

Debugging on Kubernetes consists of different services. Each service is running in its container. Developing and to debug applications running in its clusters can be large and heavy, for this requires us to have a shell on a running container after then all your tools running in the remote body. Telepresence is a tool for debugging applications in it locally without any difficulty. Telepresence allows us to use custom tools like IDE and debugger. This document describes the telepresence used for debugging and developing services that are running on a cluster locally. The debugging and developing services need to install its cluster telepresence and must also be installed.How to Develop and Debug existing services?

We make the program or debug a single service when developing an application on it. These services required other services to debug application running in it and testing. With the telepresence, Kube proxy uses the –swap-deployment option to swap an existing deployment. Swapping allows us to connect to the remote cluster and will enable us to run a service locally by debugging applications in it.The IT industry is now quickly moving towards using containers in software development. Click to explore about our, Set up Jenkins on Kubernetes Cluster

What are its benefits and limitations?

The below are the highlighted benefits and limitations:

Benefits of Debugging in Kubernetes

It is the best advantage that now developers can use other Kubernetes Security Guide for debugging on it like in the Armador repo, use the telepresence tool as well as using Ksync & Squash to debug the application.

Limitations of Debugging in Kubernetes

- It is a standard part of the process and development lifecycle that Every developer debug locally. But when it comes to it, then this approach becomes more difficult.

- It has its orchestration mechanism and optimization methodologies when Developers can debug microservices hosted by cloud providers. But that methodology to debug application running in it made great. But it makes debugging applications in Kubernetes more difficult.

Conclusion

Kubernetes deployment is a concept of pods; pods are nothing but nodes which are nothing but servers where different content can be deployed in a pod. You can have a single container or multiple containers. Pods contain more containers. It can group containers that make up an application into logical units for easy management and discovery by this it identify how many nodes are there.

- Discover here about PostgreSQL Deployment in Kubernetes

- Click to know about Deploying Java Microservices Applications

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)