AI Algorithms for Data Quality Detection

-

Anomaly Detection Models

It is critically important to organize accurate anomaly detection as the core of AI-based data quality monitoring. For instance, they look at previous records of past performances or past prices of shares with an aim of identifying sudden changes in trends. For example, it can be established that a model reveals more records than are typical or records outside specified projections, leading to further scrutiny.

-

Pattern Recognition Implementation

A high level of pattern recognition incorporates the use of concepts such as machine learning and statistical models to ensure consistency in the input data. These algorithms can process data over time and watch for changes in the data, which could be due to schema creep or changes in transaction series.

-

Predictive Error Identification

This approach is a type of advanced prediction in which an AI identifies which errors might occur beforehand. By means of time-series analysis and business analytics, it is possible to predict problems such as late data arrival or missing fields and, therefore, perform damage control in advance.

Snowflake-Specific Integration Architecture

-

Warehouse Performance Optimization

Good data quality monitoring aims to enhance Snowflake's capabilities and never compromise its efficiency. An environment that accommodates monitoring activities side by side with actual work tasks can be achieved through workload management, query optimization and resource isolation to help in achieving the goals without any hindrances of high usage harming productivity. Other solutions like auto scaling of compute clusters are available to prevent such loads.

-

Query Monitoring Infrastructure

Other data quality findings are obtainable in having an infrastructure that constantly monitors the query performance. That is why tracking query execution times, errors, and the consumption of resources helps the organizations to compare performance problems with potential questions about the quality of the data and make the strategies sharper.

-

Resource Allocation Management

Optimal resource allocation means the flexible distribution of compute resources and storage in Snowflake. They suggest that monitoring activities concerning workload requirements to allocate resources to enhance them using predictive models signify that it is manageable and cost effective. This is even more relevant when it comes to businesses which generate a different amount of data.

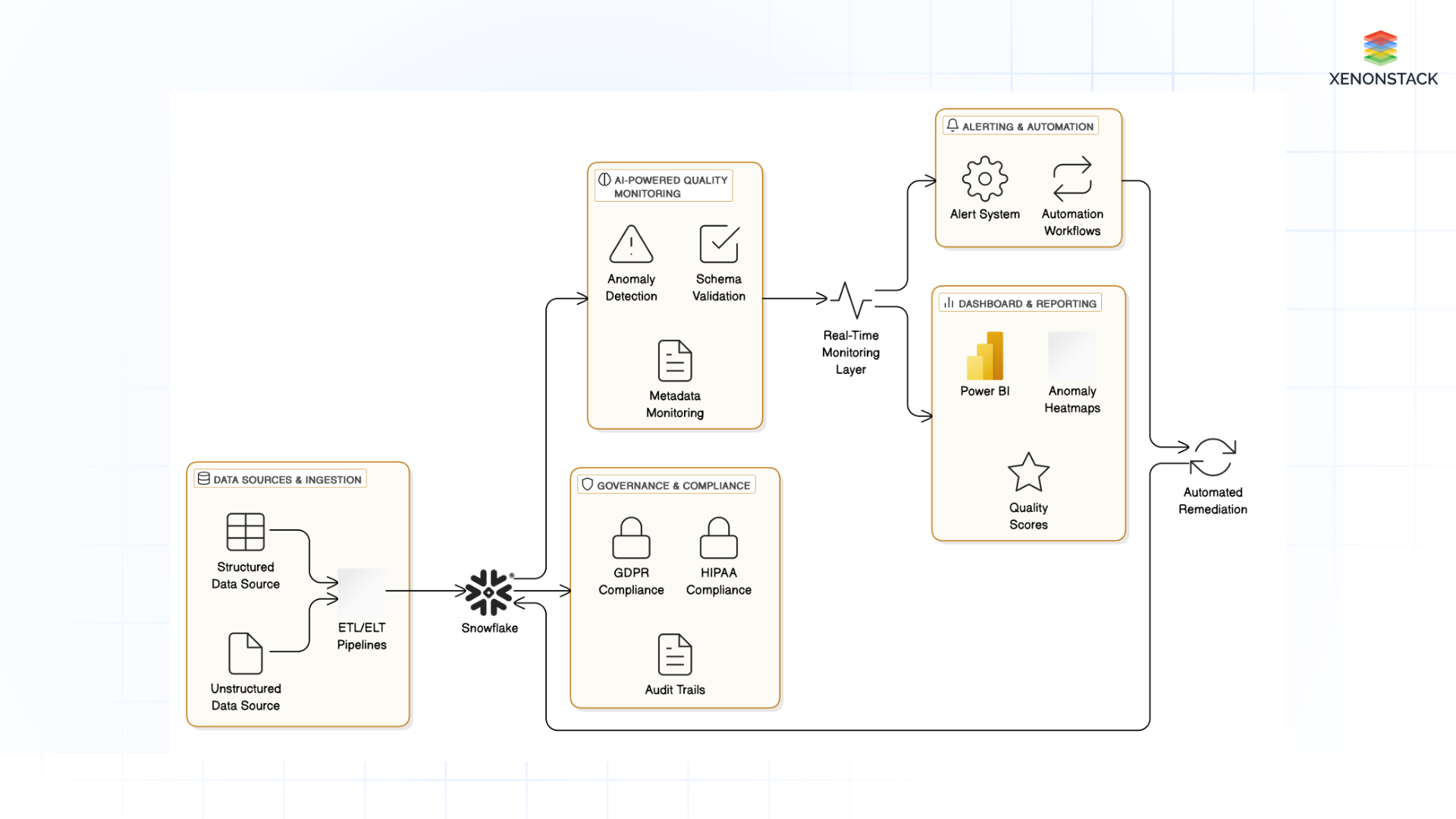

Data Pipeline Quality Assurance

Figure 2: Data Pipeline Quality Assurance

Figure 2: Data Pipeline Quality Assurance ETL/ELT Process Monitoring

Maintaining data quality in ETL/ELT processes is crucial like the process of transforming, cleansing, and loading data. The real-time monitoring shall identify data transformation, loading or extraction errors for pipelines. When the AI monitoring tools are added to check on the performance of pipelines, failures or bottlenecks can be detected instantly.

Transformation Validation

The main goal in validation accuracy evaluations is to ensure that data transformation is error-free. By comparing transformed data against original records, the AI models verify that no mistakes arise during data processing.

Data Lineage Tracking

Data lineage describes a process that determines the type of information needed to follow data movement throughout an organisation. Data engineers and auditors use AI-based lineage tools to monitor all changes through their original appearances, thus ensuring the complete auditability and trustworthiness of every information piece.

Dashboard and Visualization Development

-

Real-Time Monitoring Interfaces

An actionable real-time dashboard must be made and is still in the process of being made. Implement the features that Snowflake has to offer in visualization in addition to using visualization tools such as the Microsoft Power BI for designing the next set of dashboards with key metrics, trends, and alerts that are easier to comprehend.

-

Alert Visualization Techniques

The alert presentation that is quickest to use is to display such alerts visually. On the alert side, teams get the early detection capability using red amber green colors and brief description and drill down. In terms of alert visualization, display of alerts according to their expected consequences as well as their severity level needs to follow the standard procedure.

-

Performance Metric Displays

Periodic measurement of detection efficiency, resource consumption, data processing speeds, and duration estimates are performed for assessing data quality monitoring system performance. Permanent evaluation of the indicator allows organisations to optimise their ways of working and guide their improvement efforts.

Automated Remediation Strategies

-

Error Correction Implementation

The incorporation of error correction automation systems is intended to automate correction once errors are identified. For example, it is possible to design the system in such a way that if an error is detected in the data, the data returns to a previously specified state or initiates a data reprocessing procedure.

-

Data Cleansing Automation

In Continental, data cleansing pipelines use AI to qualify the data and make it consistent immediately. As such, pipelines connect to Snowflake, making fixing incorrect data on the fly feasible to reduce downstream impact errors and improve data quality.

-

Workflow Trigger Configuration

Self-healing systems make changes whenever emerging defects in relation to quality are identified. Performing these repairs inside Snowflake’s environment through an interface such as Snowpipe or other third-party orchestration tools enables near-instantaneous error correction.

Enterprise Implementation Considerations

Governance Integration Framework

The monitoring of data quality should be added to process of the current business governance frameworks in full-scale business environments. Standard policies, roles and log trials ensure that the system does not offer any loophole which falls below the set regulatory measures as well as internal measures.

Compliance Monitoring Systems

The requirements for the regulatory compliance of the data gathered in ongoing monitoring may include data quality compliance or standards like GDPR, HIPAA or SOX. Automated audit log and reporting are essential as they ensure that an organization is compliant and prevent risks as well.

Cross-Department Collaboration Methods

The efforts on monitoring data quality through Artificial Intelligence most involve the synergy of IT, data engineering and compliance department, and the business unit. These measures help to maintain interdepartmental discussion and to address stakeholders’ concerns while implementing the system to consider the needs of the organization and the possible changes in demands.

Key Takeaways for Real-Time Data Quality Monitoring

The real-time data quality monitoring system in Snowflake uses AI technology to detect data errors, which fixes the information, leading to accurate, uniform decisions. It is a partition-based system that utilizes different AI approaches, including Anomaly detection, Pattern recognition, and Predictive error identification tools, to make manifold changes and reduce the data's latency and the need for manual interaction.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)

Figure 1: AI-Driven Data Quality Monitoring with Snowflake

Figure 1: AI-Driven Data Quality Monitoring with Snowflake