Artificial intelligence (AI) has taken a central role in reshaping industries in recent years, and architecture is no exception. While conventional AI has already begun automating tasks like structural optimization and rendering, the rise of Agentic AI signals a significant leap forward. This form of AI doesn't just assist with design; it actively participates in it.

Agentic AI in architecture represents a shift from tool-based design support to intelligent, autonomous collaboration. These AI agents can understand high-level goals, make complex decisions, adapt in real-time, and even innovate design solutions—just like a human designer might. This transformation opens up a new realm of AI-driven architecture that is faster, smarter, and more creative.

What Is Agentic AI? A Foundation for Smart Design Systems

Definition and Core Characteristics

Agentic AI refers to systems that possess autonomous agency—the ability to act independently, pursue defined goals, and learn from outcomes. Unlike narrow AI models, which only execute specific tasks when prompted, Agentic AI models can:

-

Understand overarching objectives

-

Plan multi-step actions

-

Evaluate different strategies

-

Adapt to dynamic environments

-

Collaborate across tasks

This evolution is particularly relevant to architecture, involving intricate planning, multi-variable analysis, and balancing aesthetic, cultural, and environmental factors.

Evolution of Technology in Architecture: From CAD to Agentic AI

Historical Milestones

To appreciate the significance of Agentic AI, it's helpful to look at the technological journey of architectural design:

-

Manual Drafting → Pencil-and-paper sketching defined early architectural processes.

-

CAD (Computer-Aided Design) → Enabled digital precision and faster workflows.

-

BIM (Building Information Modeling) → Integrated data into designs, improving collaboration and project management.

-

Parametric Design → Allowed rules-based modeling and advanced 3D forms.

-

Machine Learning in Architecture → Optimized designs using historical and predictive data.

We are entering the era of Agentic AI for architecture, where the system processes data and actively proposes and evolves design concepts.

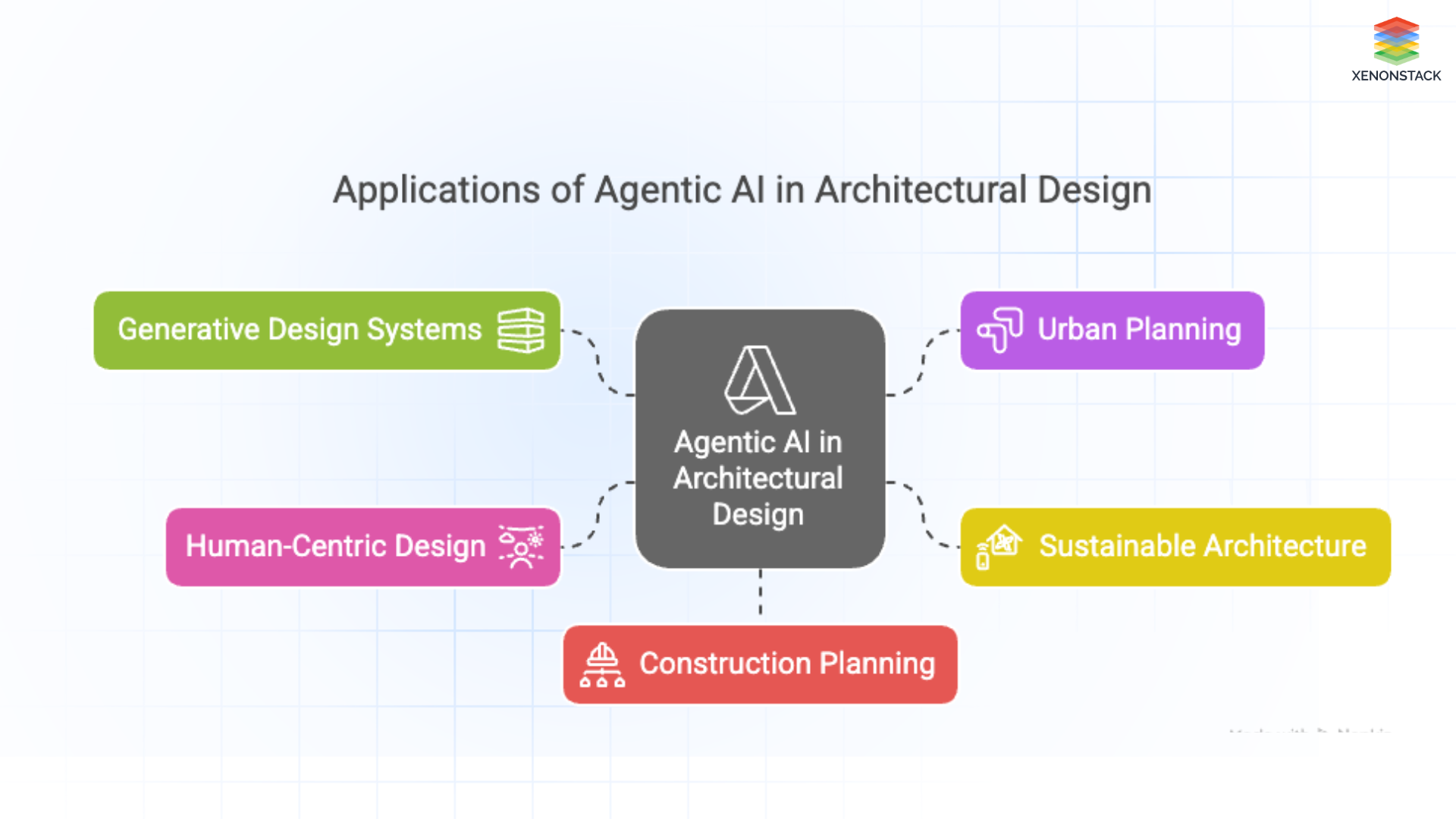

Key Applications of Agentic AI in Architectural Design

Fig 1: Application of Agentic AI in Architectural Design

Fig 1: Application of Agentic AI in Architectural Design1. AI-Powered Generative Design Systems

Agentic AI can take broad human goals—such as sustainability, affordability, or cultural resonance—and autonomously generate architectural designs that satisfy those conditions. These designs are not rigid templates but dynamic, data-informed solutions that evolve through iteration.

2. Autonomous Urban Planning With AI Agents

Urban planning involves complex systems, from transportation and infrastructure to socio-economic data and environmental impact. Agentic AI can:

-

Simulate urban growth

-

Evaluate green space placement

-

Optimize road networks for traffic flow

-

Model socio-environmental outcomes over time

This makes AI-driven urban planning more accurate, responsive, and sustainable.

3. Sustainable Architecture Through AI Optimization

Sustainability is one of the biggest challenges in modern architecture. Agentic AI can analyze:

-

Solar orientation

-

Material lifecycle impact

-

Local climate data

-

Energy modeling simulations

It can then propose designs that reduce energy consumption, lower carbon emissions, and promote long-term resilience, enabling green building design with AI.

4. Human-Centric Architectural Design With AI

Agentic AI doesn't only focus on form and function—it can also optimize for human well-being. By analyzing behavioural patterns, biometric feedback, or user surveys, the AI can personalize environments to:

-

Reduce stress in hospitals

-

Enhance focus in classrooms

-

Improve wayfinding in airports

This represents a shift toward AI-enhanced user experience design in architecture.

5. Smart Construction Planning and Automation

Agentic AI extends beyond the drafting table and into construction management. These agents can:

-

Monitor material availability

-

Predict project delays

-

Suggest alternative workflows

-

Optimize resource allocation

The result is AI-assisted construction management with real-time adaptability.

Benefits of Using Agentic AI in Architecture

1. Enhanced Architectural Creativity and Innovation

Rather than replacing architects, Agentic AI acts as a creative design partner. Generating unique concepts and analyzing possibilities beyond human cognitive limits enhances the creative potential of designers.

2. Faster Project Delivery and Design Iteration

What once took weeks of manual iteration can now be done in minutes. AI-accelerated architectural workflows improve speed without sacrificing quality.

3. Data-Driven Decision-Making

Agentic AI leverages vast data—from material costs to environmental performance—to support evidence-based architectural design. This leads to more brilliant, more informed decisions at every project stage.

4. Personalized and Inclusive Design at Scale

One of the biggest promises of Agentic AI is mass personalization. It allows architects and developers to offer bespoke living or working environments tailored to individual or cultural needs—without losing efficiency.

5. Dynamic Adaptability in Built Environments

With sensors and real-time feedback loops, agentic architectural systems can continuously adapt—reconfiguring space usage, optimizing lighting or ventilation, and suggesting renovations based on how people interact with the space.

Challenges and Limitations of Agentic AI in Design

1. Risk of Emotionless or Culturally Inappropriate Design

AI may optimize for performance but lack cultural nuance or emotional resonance. Human architects are essential to ensure soul and meaning in design.

2. Algorithmic Bias and Ethical Blind Spots

AI systems may unintentionally reinforce stereotypes or inequalities if trained on biased data. Ethical AI architecture requires diverse, inclusive data sets and transparent algorithms.

3. Intellectual Property and Accountability Issues

Who owns an AI-generated building design? This raises questions about copyright, authorship, and liability, especially when multiple agents contribute.

4. Disruption of Traditional Roles and Workflows

Adopting Agentic AI requires rethinking the roles of architects, engineers, and clients. It can lead to resistance or confusion in traditional firms.

5. Integration Complexity and Cost

Deploying Agentic AI is not plug-and-play. It often requires custom integration, training, and updates—posing a barrier for smaller practices.

Ethical Considerations in AI Architecture

-

Transparency: Users should understand how AI makes decisions.

-

Privacy: Sensitive urban and personal data must be protected.

-

Inclusivity: Designs should reflect the needs of diverse populations.

-

Human Oversight: AI must remain a tool—not the sole authority in architectural decisions.

Real-World Examples of Agentic AI in Practice

- Autodesk’s Spacemaker AI

This tool uses AI agents to optimize building layouts based on sunlight, noise, and airflow—empowering architects with innovative suggestions during early-stage design. - Hypar

Hypar enables architects and engineers to automate repetitive tasks and generate building systems using AI logic. - Zaha Hadid Architects

This world-renowned firm uses AI for parametric design, optimizing structural integrity while achieving unique aesthetics.

The Future of AI and Human Collaboration in Architecture

We are moving toward a co-design paradigm where architects and AI agents work together. In this vision:

-

The architect defines intent

-

The AI proposes iterations

-

The team evaluates outcomes collaboratively

This collaborative loop is what makes agentic AI in architecture so powerful. It's not about automation for its own sake—it's about elevating human creativity with intelligent assistance.

Conclusion: Embracing the Agentic Design Era

Agentic AI is more than a trend—it’s a fundamental shift in how we design, plan, and build. It empowers architects to:

-

Think more expansively

-

Design more responsibly

-

Build more sustainably

To make the most of this shift, architects must become fluent in both design and data, embracing the power of AI while protecting the core human values of empathy, culture, and imagination.

As the built environment evolves, so too must our approach. Agentic AI in architecture is not the future—it’s already here.

Next Steps with Agentic AI in Architecture

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)