Agentic AI refers to AI systems designed to operate as autonomous agents—capable of reasoning, planning, decision-making, and taking action proactively in dynamic environments. These agents aren't just passive models waiting for inputs; they are goal-driven entities capable of interacting with tools, data, APIs, other agents, and humans in a feedback loop of continuous improvement.

Agentic AI redefines what AI can do, from intelligent digital coworkers and workflow optimizers to scientific research assistants and autonomous customer agents.

The Agentic AI Tech Stack is the backbone of autonomous, goal-driven AI systems—enabling agents that don’t just respond but think, plan, and act.

It is structured across three key layers:

-

Application Layer - This is where agents interact with users and systems. Examples include AI copilots, autonomous research bots, and workflow optimizers. This layer defines the experience and interface.

-

Agent + Model Layer - The core intelligence layer, combining large language models (LLMs) with agent frameworks such as LangChain or AutoGen to enable planning, memory, decision-making, and tool usage.

-

Infrastructure Layer - The foundation powering it all—cloud compute, vector databases, orchestration tools, and APIs that ensure scalability, performance, and integration.

What is Agentic AI Tech Stack?

The Agentic AI Tech Stack is the blueprint for building AI systems that can think and act independently. Unlike traditional AI pipelines, it’s designed to power intelligent agents that can reason, make decisions, and take initiative.

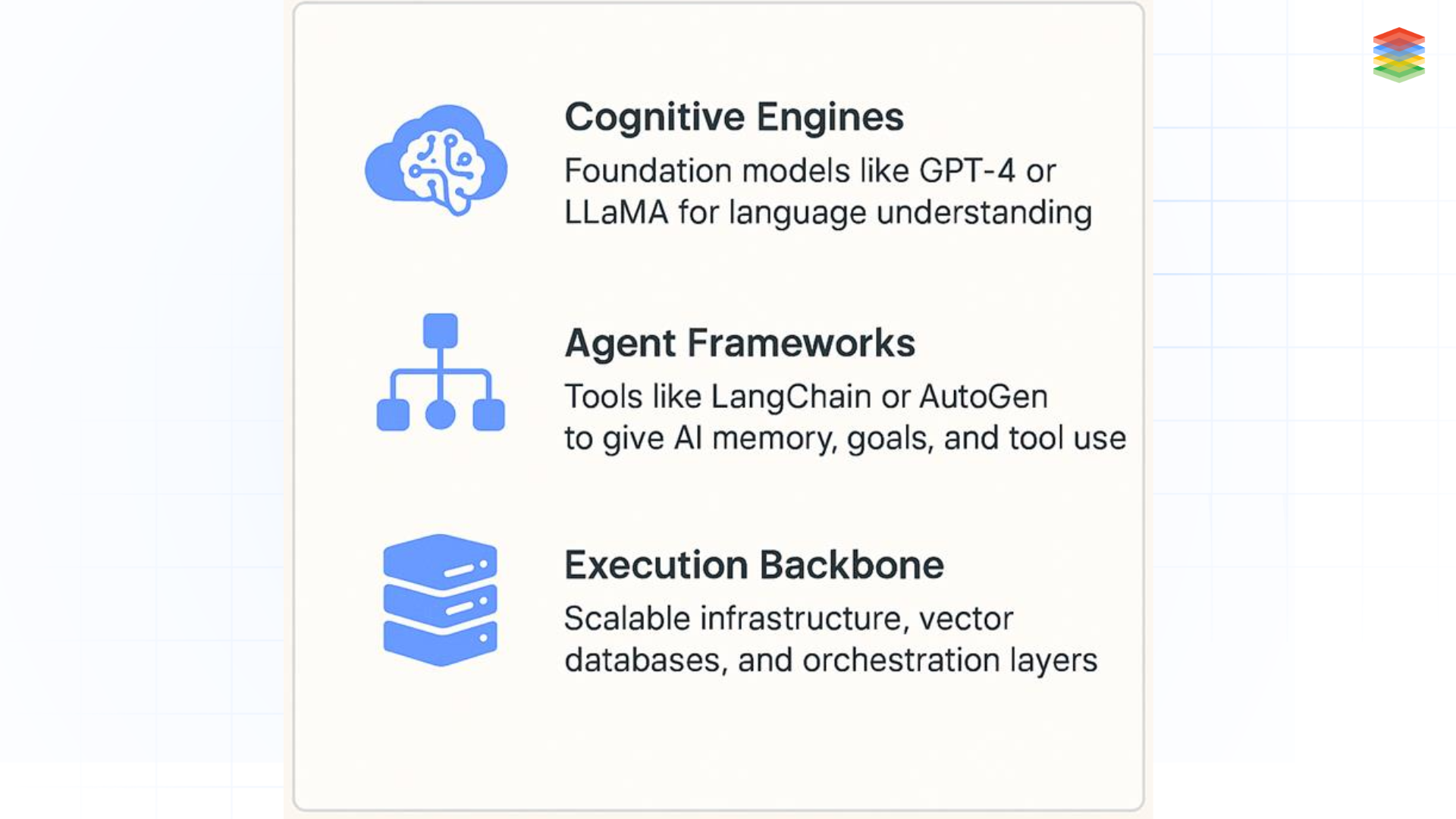

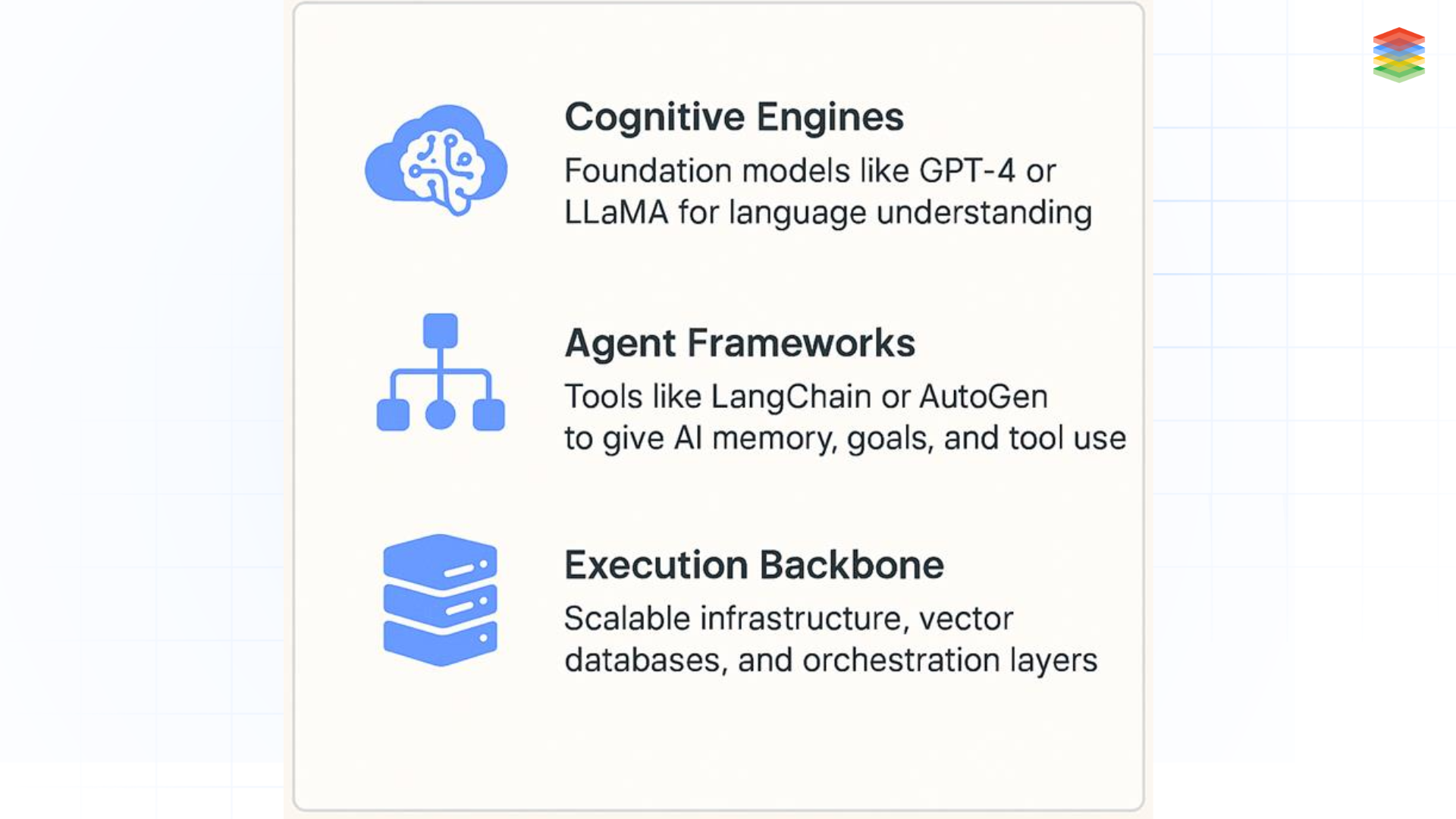

It brings together:

Fig 1: Agentic AI Tech Stack

Together, they enable AI that’s not just smart—but self-directed, adaptive, and action-ready.

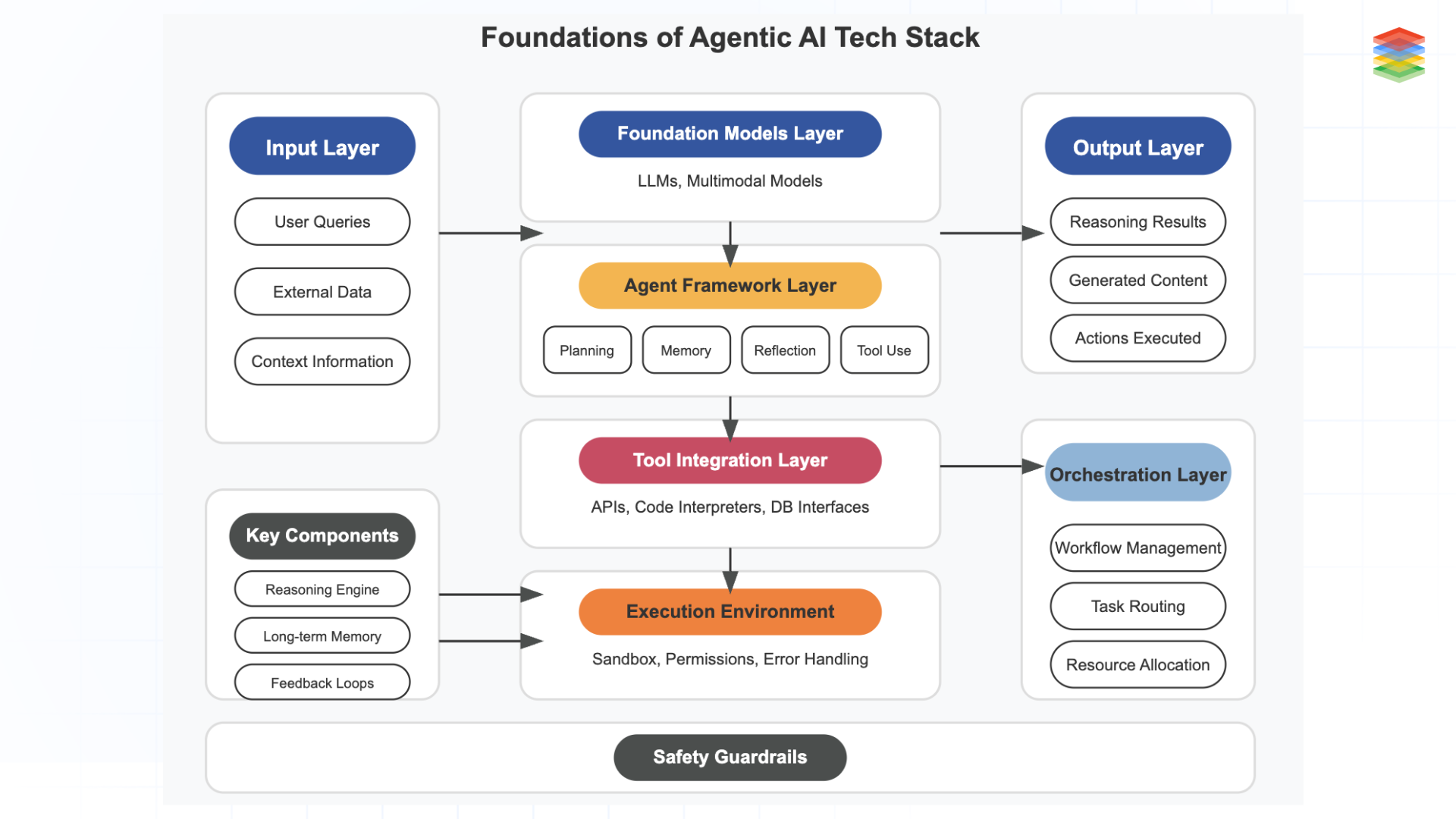

Foundations of Agentic AI Tech Stack

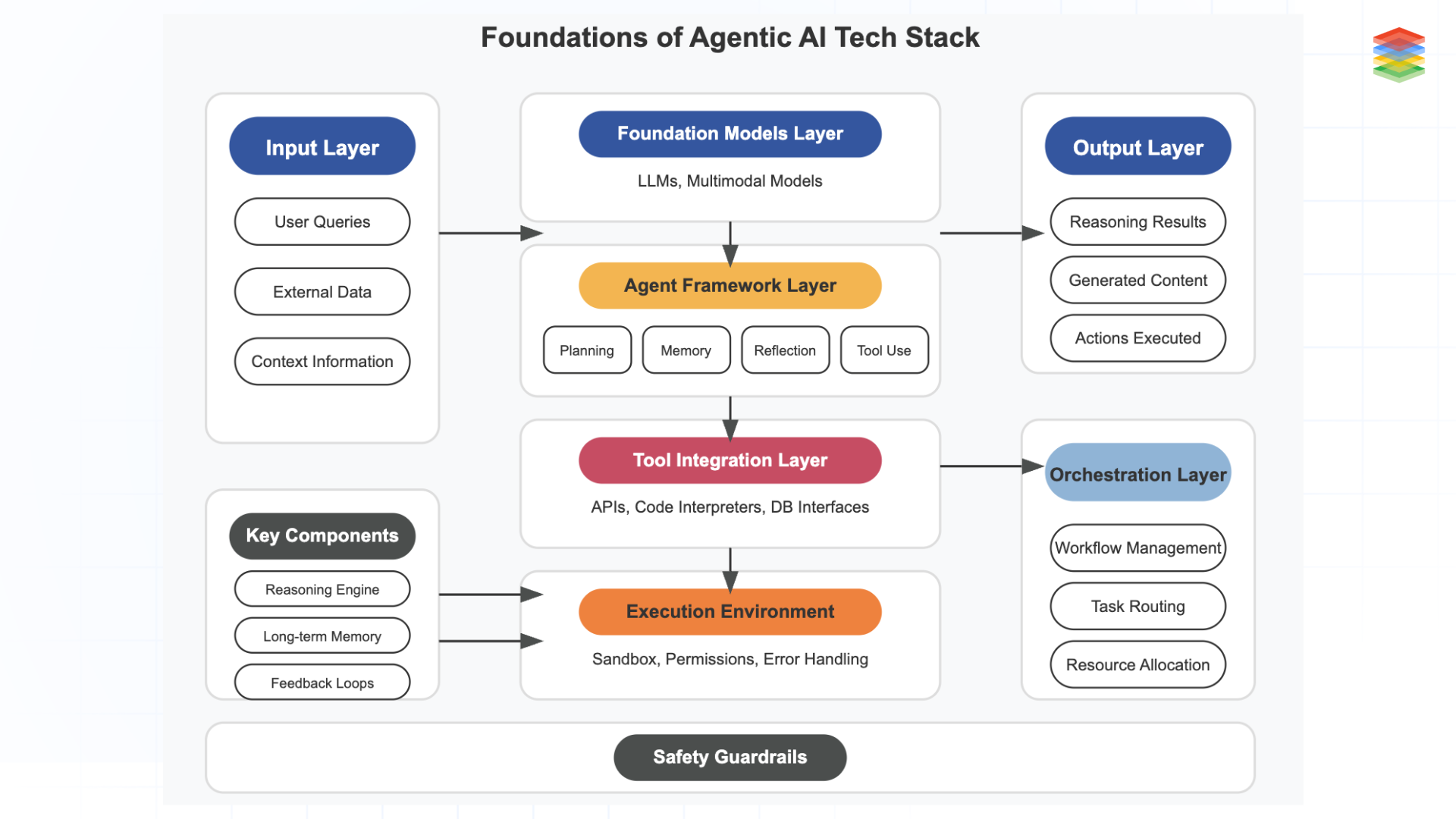

The agentic AI tech stack represents the layered architecture that enables AI systems to operate with autonomy, goal-directed behaviour, and decision-making capabilities. Here's a concise overview of its foundational elements:

Fig 2: Foundations of Agentic AI Stack

Core Architectural Layers

-

Foundation Models Layer

-

Examples: GPT-4, Claude, PaLM, Gemini, Llama

2. Agent Framework Layer

-

Planning modules for decomposing complex goals into actionable steps

-

Memory systems for context retention and experience learning

-

Self-reflection mechanisms to evaluate actions and improve performance

-

Tool selection logic for choosing appropriate capabilities for a given task

3. Tool Integration Layer

-

API connectors to external services and data sources

-

Code interpreters for executing programming languages

-

Document processing capabilities for handling various content formats

-

Database interfaces for structured data management

4. Execution Environment

-

Sandboxed runtime for safe operation

-

Permission systems to control tool access

-

State management to track progress

-

Error handling and recovery mechanisms

5. Orchestration Layer

-

Workflow management to coordinate multiple agents

-

Task routing based on specialization

-

Resource allocation for compute optimization

-

Inter-agent communication protocols

Key Concept of Agentic AI

Key Concept of Agentic AI

-

Reasoning Engine: The central cognitive component powered by foundation models that enables understanding, planning, and decision-making

-

Tool Use: The ability to select and utilize specialized tools based on contextual needs

-

Long-term Memory: Systems for storing and retrieving information across interactions

-

Feedback Loops: Mechanisms for evaluating performance and incorporating feedback

-

Safety Guardrails: Controls to ensure outputs and actions align with user intent and ethical guidelines

The architecture is flexible and modular, allowing for customization based on specific application domains while maintaining the core elements that define agentic behavior: goal orientation, autonomy within defined boundaries, and the ability to learn from experience.

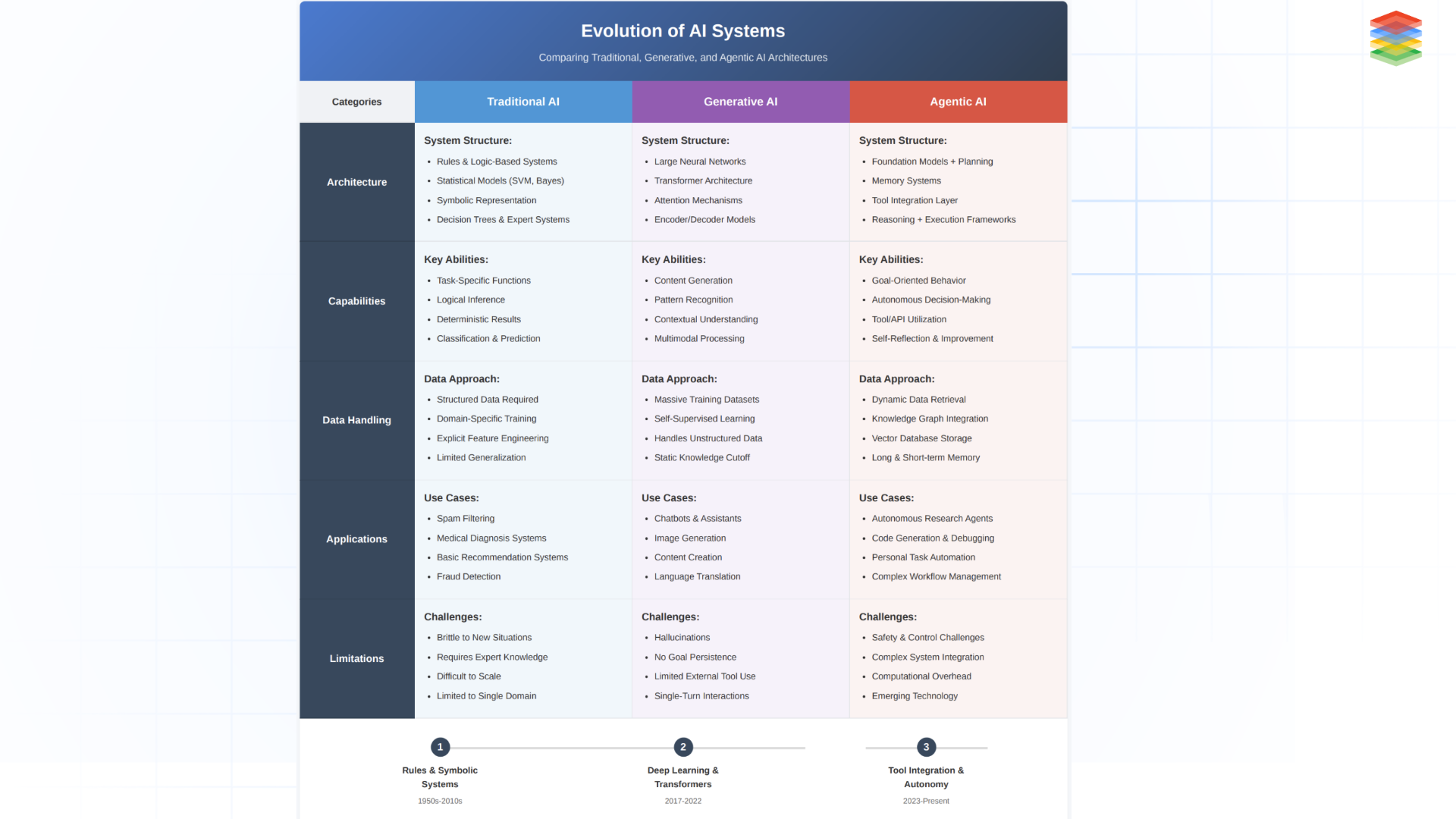

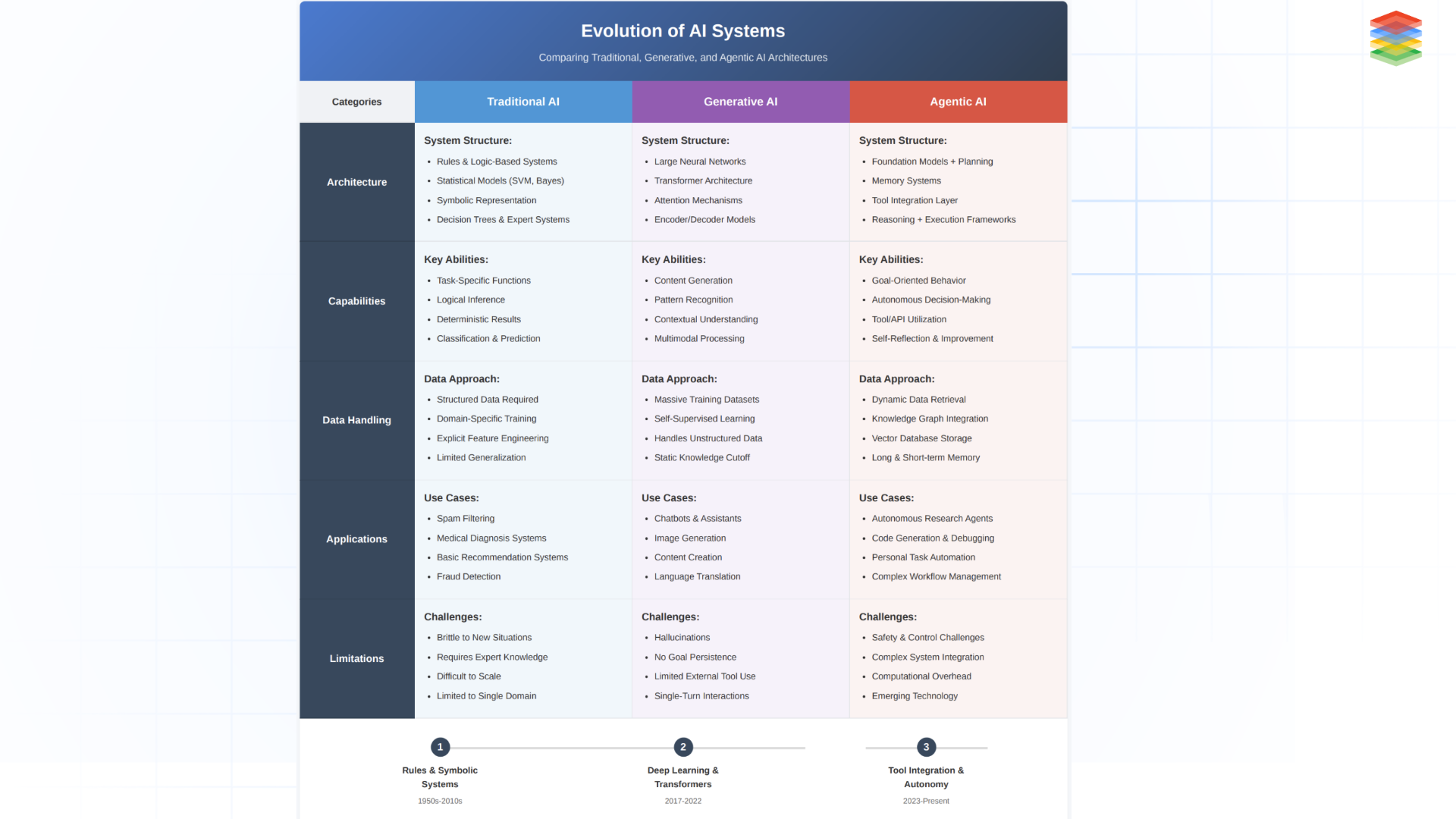

Traditional ML vs. Generative AI vs. Agentic AI

Fig 3: Evolution of AI Systems

Traditional AI is rule-based and task-specific, requiring structured data and explicit programming for narrow applications like spam filtering and medical diagnosis systems. However, it lacks adaptability beyond its predefined parameters.

Generative AI uses large neural networks trained on massive datasets to understand patterns and create content (text, images, code) but operates reactively to prompts without autonomous goal-directed behaviour or persistent memory.

Agentic AI builds on foundation models but adds planning capabilities, memory systems, and tool integration layers, enabling goal-oriented behaviour, autonomous decision-making, and the ability to use external tools/APIs to accomplish complex tasks over multiple steps.

The evolution shows a progression from narrow, explicitly programmed systems (Traditional) to pattern-recognition content generators (Generative) to autonomous goal-pursuing systems (Agentic), with each generation addressing the limitations of previous approaches while introducing new capabilities and challenges.

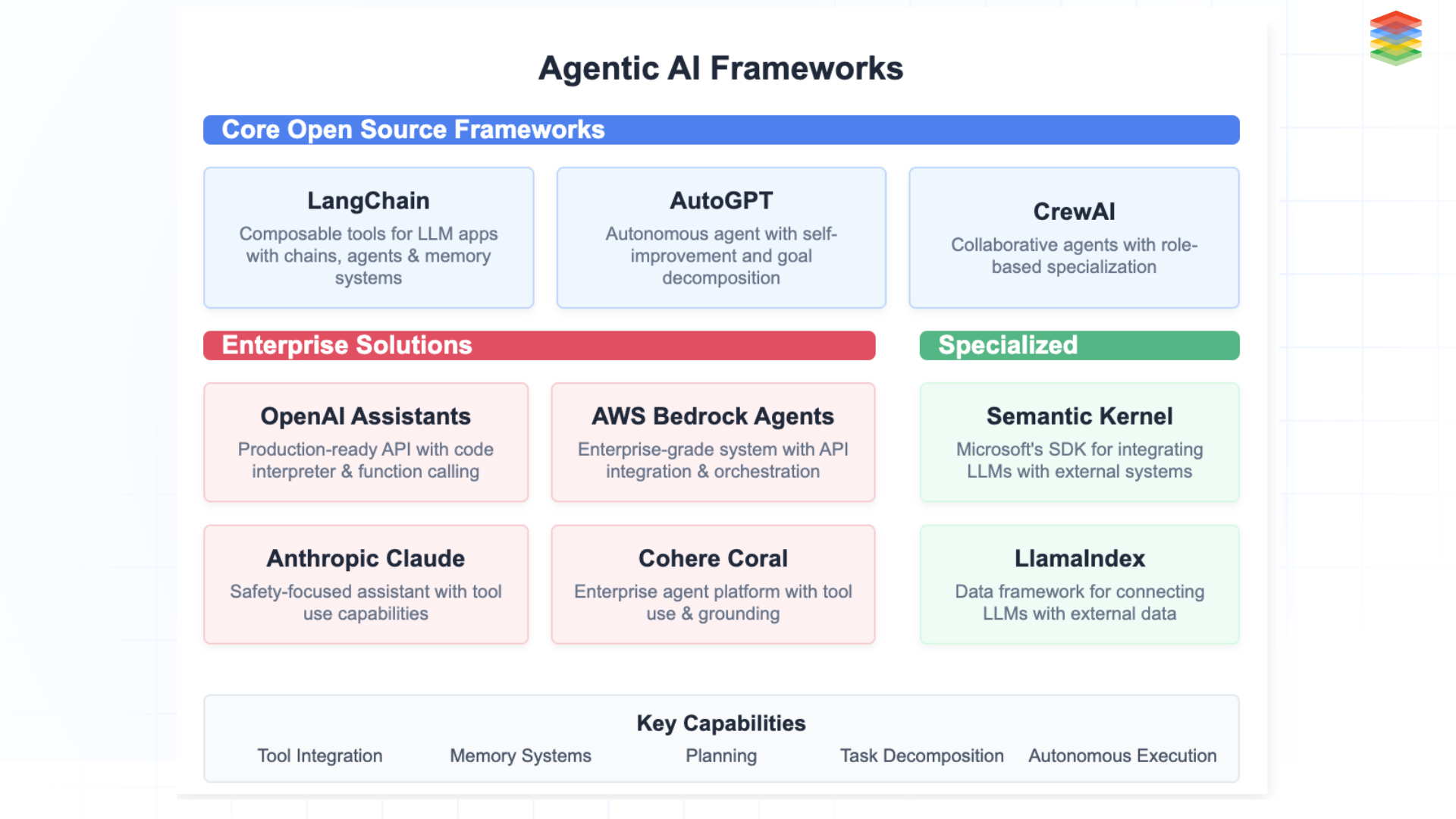

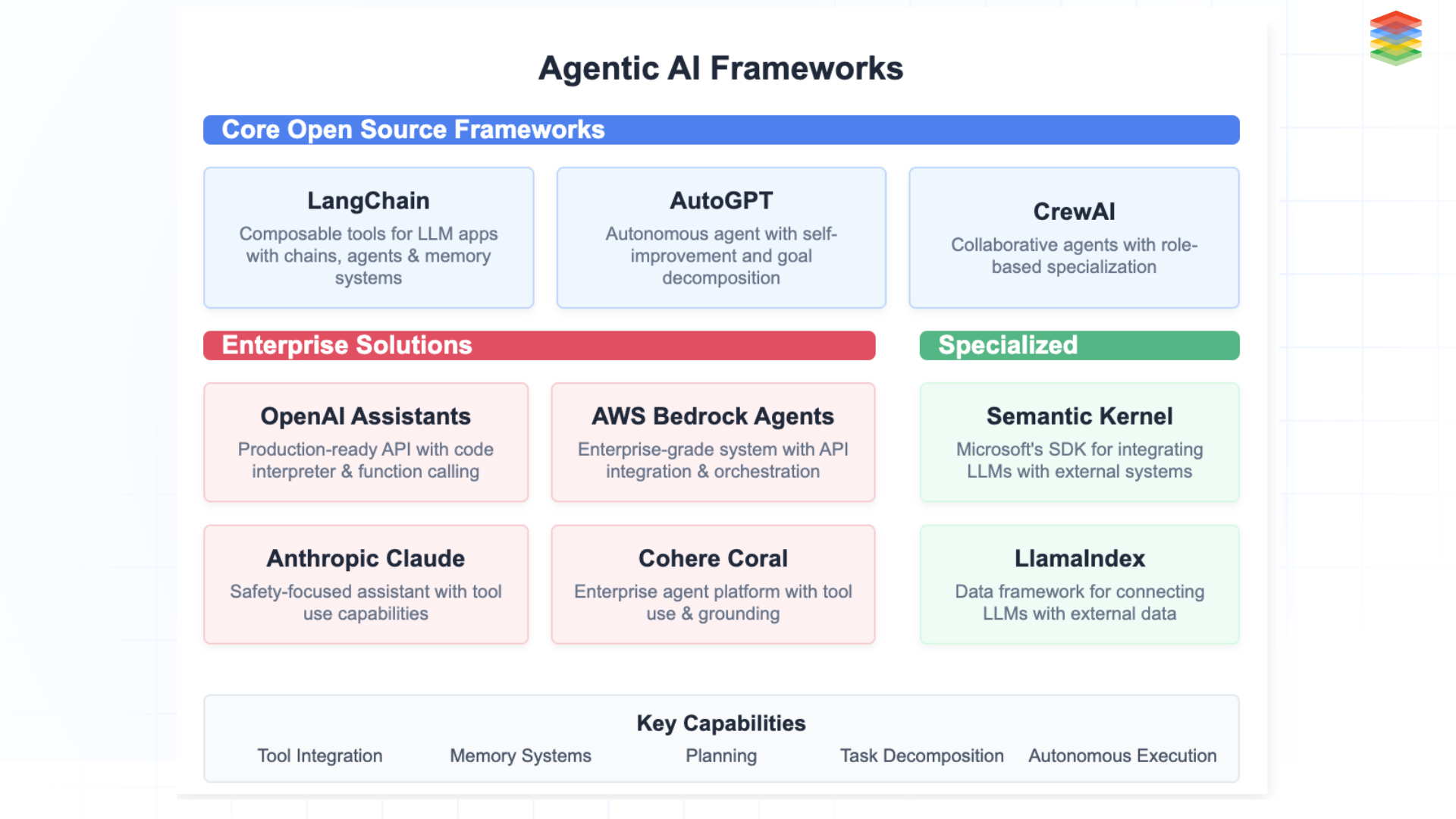

Frameworks for Agentic AI

In the emerging landscape of autonomous AI systems, several powerful frameworks are paving the way for machines that can think, plan, and act with minimal human oversight. These frameworks transform foundation models from passive responders into active problem-solvers capable of breaking down complex goals, utilizing tools, and executing multi-step plans. As AI transitions from generative to agentic capabilities, these specialized tools provide the crucial infrastructure that empowers models to function with increasing autonomy and effectiveness in real-world environments.

Fig 4: Agentic AI Framework

-

LangChain & AutoGPT: Pioneer frameworks enabling goal-oriented behaviour through modular tool integration and autonomous task execution, functioning as the "operating systems" for LLM-powered agents.

-

Specialized Agents: CrewAI for multi-agent collaboration, Semantic Kernel for symbolic-neural integration, and BabyAGI for autonomous task management—each addressing specific aspects of the agentic ecosystem.

-

Enterprise Solutions: AWS Bedrock Agents, OpenAI Assistants API, and Anthropic Claude Tools bring production-ready agentic capabilities to businesses with enhanced safety, scalability, and integration features.

Operationalising Agentic AI Solution with Infrastructure

Building effective agentic AI requires more than selecting a framework—it demands a systematic approach to training, testing, and deployment. Here's how to move from concept to production:

Foundation Model Selection & Tuning

Choose foundation models with strong reasoning capabilities, then optimize them specifically for agentic behaviour:

-

Fine-tune on expert demonstrations showing effective planning and tool use

-

Implement constitutional AI techniques for safety without sacrificing autonomy

-

Apply RLHF with feedback on complete agent trajectories, not just outputs.

Rigorous Testing Framework

Evaluate agents across multiple dimensions using:

-

Controlled environments with progressive complexity

-

Test suites measuring goal achievement, planning quality, and adaptability

-

Comparative benchmarks against human performance on identical tasks

-

AgentBench or similar standardized metrics for consistent evaluation

Specialized Optimization

Train agents using multi-objective optimization that balances:

-

Goal completion accuracy (primary objective)

-

Plan coherence and efficiency (minimizing unnecessary steps)

-

Tool selection appropriateness (using the right tool for each task)

-

Safety constraint adherence (avoiding risky actions)

Infrastructure Requirements

Deploy with robust infrastructure, including:

-

Low-latency inference systems for real-time decision-making

-

Sandboxed execution environments for tool usage

-

Comprehensive logging and monitoring of agent activities

-

Memory-optimized systems for maintaining context across interactions

Progressive Deployment Strategy

Follow a measured approach to production:

-

Begin with human oversight for all agent actions

-

Gradually increase autonomy for well-tested tasks.

-

Implement automatic escalation for low-confidence decisions.

-

Establish continuous feedback loops to improve performance.

Addressing these operational aspects while maintaining appropriate safeguards can help organisations build reliable agentic AI systems that deliver real value. The key is treating agent development as a distinct discipline with its own unique requirements, metrics, and best practices.

Emerging Trends of Agentic AI Development Stack in 2025

As we move into 2025, the agentic AI development stack has matured significantly, with specialized tools and platforms emerging to address the unique challenges of building autonomous AI systems. The ecosystem has evolved from experimental frameworks to production-ready solutions that enable organizations to build, deploy, and manage agentic AI at scale.

Fig 5: Agentic AI Development Stack

Fig 5: Agentic AI Development Stack

Key Trends Driving the 2025 Stack

As we enter 2025, the agentic AI development stack has matured from experimental to enterprise-ready. Organizations are now deploying autonomous systems at scale with specialized components across the stack.

1. Foundation Models Built for Agency

-

Enhanced planning and reasoning capabilities

-

Built-in tool-use understanding

-

Longer context windows for complex tasks

-

Reduced hallucination for factual operations

2. Standardized Tool Integration

-

OpenTools Protocol for universal connectivity

-

Centralized security-verified tool registries

-

Simplified API authorization frameworks

3. Enterprise-Grade Agent Frameworks

-

Enterprise versions with SLAs and compliance features

-

Low-code agent creation platforms

-

Industry-specific solutions for finance, healthcare, and manufacturing

4. Reliability and Scale Infrastructure

-

Specialized cloud platforms for agent hosting

-

Advanced observability for tracking agent actions

-

Secure sandboxed execution environments.

5. Multi-Agent Orchestration

-

Coordination tools for specialized agent teams

-

Role-based architecture frameworks

-

Centralized monitoring dashboards

Implementation Challenges of Agentic AI Stack

Building an agentic AI stack unlocks powerful capabilities—autonomous planning, tool use, and multi-agent collaboration. However, implementing such systems comes with several practical and technical hurdles.

-

Agent Coordination and orchestration are challenging in dynamic workflows because they involve managing agents' communication, sharing tasks, and avoiding conflicts.

-

Long-Term Planning & Goal Decomposition: Breaking abstract goals into concrete, adaptive steps remains an unsolved problem for many systems.

-

Security & Safety Risks: Autonomous agents with tool access can misbehave or be manipulated via prompt injections or unsafe commands.

-

Evaluation & Debugging: It’s challenging to trace agent decisions or test performance due to non-deterministic behaviour and limited transparency.

-

Tooling Maturity & Developer Experience: Current frameworks often lack robust support for debugging, monitoring, and scaling agent-based architectures.

Despite these challenges, progress is rapidly being made, and emerging standards are making the space more reliable. As the ecosystem matures, the agentic AI stack has the potential to become a foundational layer in next-gen AI systems.

In Summary: Dissecting the Agentic AI Tech Stack

The agentic AI tech stack represents the architecture enabling autonomous AI systems to accomplish complex goals through planning, reasoning, and action. Built upon foundation models (LLMs) that provide core intelligence, this stack incorporates specialized layers for agent frameworks (handling planning, memory, and self-reflection), tool integration (connecting to external services and data sources), execution environments (providing sandboxed runtime and permissions), and orchestration (coordinating multiple agents).

As the technology matures into 2025, we're seeing the emergence of agent-optimized foundation models with built-in tool understanding, standardized integration protocols, enterprise-grade frameworks with compliance features, specialized cloud infrastructure, and sophisticated multi-agent orchestration systems—together forming a comprehensive ecosystem that transforms AI from passive responders to proactive problem-solvers capable of breaking down tasks, using appropriate tools, and achieving objectives with minimal human guidance.

Fig 5: Agentic AI Development Stack

Fig 5: Agentic AI Development Stack.webp?width=1921&height=622&name=usecase-banner%20(1).webp)