Introduction to Test Automation in DevOps

Software testing is an important process in software development and can be one of the most time-consuming aspects of the DevOps process. It can be tiring and frustrating, and requires many hours of actual work, resulting in slower release cycles. Recently, a strong trend has emerged where DevOps professionals are encouraging developers to automate their testing process. Test automation can help DevOps eliminate redundancies, create a more unified approach across teams, and facilitate more efficient development.

Why Test Automation is Critical to Continuous Testing?

Test automation is not the same as continuous testing. Can you implement continuous testing without automation? That's also a no. Test Automation needs Automated tests and automation testing, whereas Continuous Testing needs Test Automation to deliver on the speed, quality, and efficiency principles. It is essential to know the distinction.

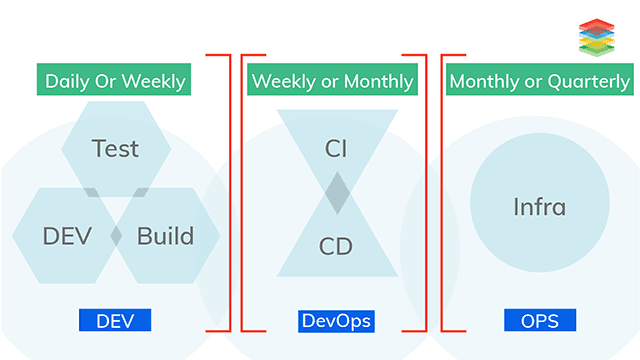

Let's attempt to understand this: As businesses move from a traditional testing approach towards DevOps practice, Continuous Integration and Continuous Delivery (CI and CD) are keys to achieving the desired speed.

In the CD model, software development is an ongoing process, and the deployment-readiness of software, therefore, matters more than ever. Continuous testing allows consistent quality output at every stage of the development. Testing occurs incrementally through the cycle.

To achieve continuous testing, however, test organization, efficiency, and speed are all necessary. Organizing all these testing needs in this context is a challenge, and in that case, Test Automation comes.

Test automation enables testers to focus their energy and efforts on writing better test cases. It simplifies the process by automating the tracking and managing of all these testing requirements. Automation testing is designed to take the internal structure into account and have a quick feedback loop for developers to control the system design. It, in turn, helps the overall quality of the software. It acknowledged that test automation has to start in the early stage of the development cycle.

The speed at which all development and testing occur matters quite a lot. That's because if something in the pipeline breaks down, it holds up everything else and slows down the release of new developments.

The need to deliver new releases faster/regularly paved the way for this Continuous Delivery and the testing model. That the roadblock defeats the purpose of taking this approach.

How to enable Continuous Testing?

The primary role of the tester is to give quality software at the end. So, to achieve this goal, try to adopt the different approach. The manual method is very time to consume, not reliable. Our goal is to try different types of testing, Manual and Automation -continually throughout the delivery process.

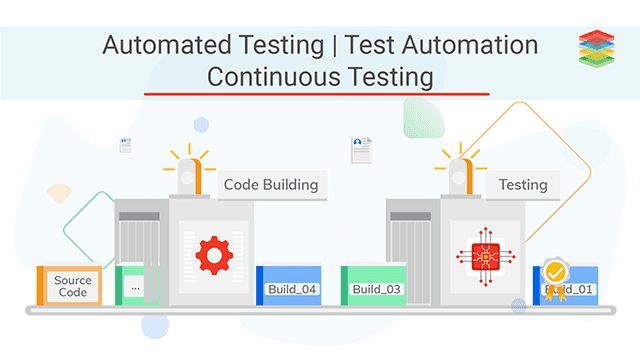

We create a deployment pipeline when we have CI and test automation in place. After every code change, Means run a builds that

a) creates packages that can deploy to any environment

b) runs unit tests.

Packages /modules that are pass will go for automation. The tester then writes automation test scripts for the code and gets the status either pass or fail. The code is passed forward for self-service deployment to other environments for exploratory testing, usability testing, and ultimately release. The fail case moves back to the developer for changes.

If testers cannot detect any defects, they should release any module that has gone through it. If testers find defects laters, they have to improve the pipeline by adding/updating some tests. So the primary goal of the tester is to find the bugs/issues as soon as possible. Thus tester wants to parallelize the activities.

CI/CD is a set of operating principles, and a practices that enable application development teams to deliver changes reliably. Source: Continuous Integration and Continuous Delivery

Automated Software Testing: The Glue Between Dev and Ops

There are different stages, we are discussing below:

- Developers are writing the code, and after their commit, it goes to version control/SCM. It is the first stage in DevOps. In this stage, you can manage the code written by your developers.

- Next phase is Continuous integration. Continuous means rather than waiting for all the developers to commit their code and then build and test it. When any developer commits the code in a continuous integration approach, merge that code into the main branch. Then you can use Jenkins/Git to create and build, and after that, you can test your code in a visualized environment.

It saves a lot of time in moving the code from the dev environment to the test environment. We need not wait for all the developers to commit. Even though you do continuous integration, it is of no use if you are not testing continuously - So the next stage is continuous testing. At this stage, you can automate your test by writing any scripts like selenium, katalon studio and then run it through Jenkins/Git in a visualized environment.

- If the test fails, then stops here and send back the issues to the developers. If the test passes, we move ahead of the continuous deployment.

In the continuous deployment stage, we have two parts:

- Configuration management

- Containerization

Consider that five servers are up and running, and they have the same libraries and tools on them. Many of them are from an older background were in the past used to have shell scripts to do this job. If I need to have an exact configuration on 100 servers, using shell scripts will be complicated. So we can need a tool that can automate the job for me. Puppet is the most popular tool for configuration management.

The second part of Continuous deployment is containerization. In this stage, as mentioned before, you can create a test environment where you can run your application and test it. It uses for testing purposes; it gives a visualized environment where I can run my script like selenium scripts to test my application.90% of organizations are using containerization only in the testing stage, and only 10% of the organization is using it in production. In 2015 docker 1.9 came, which was stable to run on production as well. For example, if you need to run some applications on prod for a shorter duration, you can quickly create a container on prod and run those applications in that container.

When you don't need those applications anymore, you can destroy the container. Now you have deployed your application. You need to monitor it to see if the application is performing as desired. The environment is also stable. It is where continuous monitoring comes into the picture. By monitoring the application, you can quickly determine when a service is unavailable and understand the underlying causes, and then you can report back the issues to the developers. Nagios is one of the most popular tools for continuous monitoring.

Explore our blog based on Automated Testing for Microservices

Why Automated Testing is Essential for CI/CD?

Continuous Integration is necessary to keep everyone from working on a team in the sink. It is about having a collaborative event between all the people who are involving build something and keeping them in the sink. Why does that happen through automation testing and validation, and feedback?

CI is a development practice that requires developers to integrate code into a shared repository several times today. The automated build verifies each check-in, and then the feedback team detects related problems. Practicing CI requires building automation especially in testing itself. As result CI practices, gain more confidence in the code, at least in a development environment. It is essential to know for continuous delivery.

Automation is that deploying software is not a simple process of taking a set of files from one place and copying and then moving to another place from one place. Especially for software of large enterprises, there are some files like binary files that need to be deployed; maybe some configuration files need to be moved over, you may have an app server that needs to be restart if configurations are changing, or you have to wait for the app server to restart. Meanwhile, that's going on. Maybe you can change some changes to the database schema. Then you need to restart the database service. To ensure that all the services are talking to each other, you restart the new binary files and copy them. Maybe you have multiple nodes of your application server.

You need to make sure they have the same version of the software and have the same configurations, and all need to be done in a coordinated manner with the right events and steps happening at the right time so that there is some time involved. So delivery automation tools help you do that automation and help codify the time to deploy to any environment. But when you are deploying, you are not deploying just software because you are also deploying the environment.

The physical environment may be already provisioned or the virtual environment or environment in the cloud. But may it is not in the provision. I need to provision it before you can deploy the software, or it is already provided. Maybe you need to make some configuration changes because configuration settings in dev will be very different from the configuration setting in QA, which will be suddenly different from the configuration setting in prod. The actual environment and middleware stack might be the same, but the configuration will differ.

So automated deployment is the ability to get software deployed to any particular environment in a given time. Continuous delivery is the capability to deploy the software to any specific environment at any given time. We again include the binaries, including the configuration changes and incorporating changes to the environment.

Importance of Continuous Testing & Automation Testing in Agile

Early Defects, Less Cost

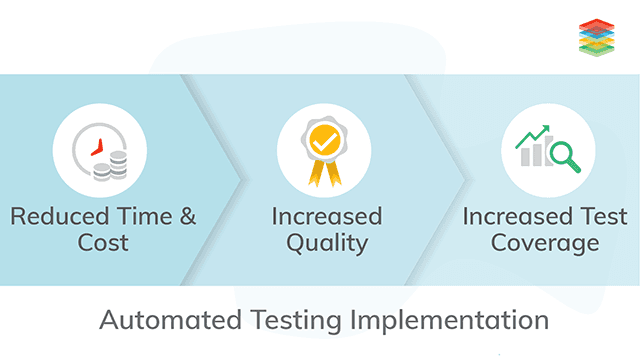

Test automation for continuous agile delivery helps in the initial authorization & determination of software defects/bugs. As early bugs will fix, it will reduce the cost to the company. A suitable testing process in an initial production stage allows faster recovery in unexpected defects /bugs in the product.

Easy Automation

A Continuous delivery model requires Continuous testing, and this is possible only through test automation. Thus, it helps coordinate Quality Assurance efforts to balance the speed of DevOps, assisting developers in delivering unique software features more quicker.

Reduce Testing Efforts

Automation tools are helping to reduce testing efforts. With the focus on continuous development, automation tools will serve the testing team and overall product development.

What are the Challenges of Continuous Testing and Automation Testing?

Increase in Speed and Performance

Automation can accomplish speed and performance easily; While it is enticing to automate every testing mode, manual testing is required in regression & exploratory testing at the UI level.

Gain Efficiency

Building test environments & configuring an automation framework need a lot of expert people & effort. The most significant work in gaining test automation coverage includes the time and cost associated with setting up a helpful automation framework. Searching for a skilled automation expert is a challenge, and so due to this, many organizations do confront it.

Robust Planning & Execution

By automating workflows, companies can reduce cost & time in testing. This planned test management utilizes the power of automation; product owners can determine, and track fixes to address critical bugs in their applications quickly. Developers can concentrate on bringing new variations to achieve a good user experience.

Understanding Effective Test Coverage

Test coverage lets us assign a score to a collection of test cases and so let's be a little bit more rigorous about it. So, for example, we have just talked about the number of functions out of the total number of positions or exercised by some test. What's right about test coverage is it gives us a score and gives us something objective that helps us figure out how well we're doing.

Additionally, when coverage is less than 100 %, that is to say, as, in our example, where we had failed to execute all of the functions in the software under test, we know what we need to do to get full coverage. We know what the functions are that we need to execute. Now, we need to construct test cases and execute those test functions. So, these are the good things about test coverage.

On the other hand, there are some disadvantages.

Because it is a white box metric derived from the source code for our system is not good at helping us find bugs of omission, that is to say, bugs were merely left out something that we should have implemented. It could be tough to know what a test coverage scoreless 100% means. In safety-critical software development would sometimes be done is requiring 100% test coverage of specific coverage metrics, which removes this problem. It means we don't have to interpret scores of less than 100% because we are not allowed to ship our product until we get 100% test coverage.

For larger, more complex software systems where the standards are correct and not as high as they are for safety-critical systems, that's often the case. Still, it's difficult or impossible to achieve 100% test coverage. Leaving us with this problem, we are trying to figure out what that means about the software.

Even 100% coverage does not mean that all bugs are found, and you can see that sort of easily by thinking about the example of why we measure our coverage by looking at the number of functions we executed. We executed some parts, which does not mean that we found all the bugs in that function. We may not have executed very much of it or may not have somehow found many new behaviors inside that function.

In which Scenarios is automation testing best suitable:

- Load Testing: Automated testing is suitable for load testing where the tester can change the load frequently to check the point where the application will break.

- Regression Testing: Here, Automated testing is suitable when the tester wants to do regression testing. Because of the number of times the code changes, it can run the regressions in a timely manner.

- Repeated Execution: Testing, which requires the continuous execution of a task, is better to automate.

- Performance Testing: Similarly, testing requires the simulation of thousands of concurrent users to automate.

- GUI Testing: For testing, perform the GUI displays automation testing. There are many tools for recording the user's actions and then replaying them any number of times. This helps compare actual and expected results.

Holistic Approach

DevOps Automated Testing plays a vital role in executing a holistic approach. Under deeply about DevOps automated testing, you can also look into the below steps for better understanding.

- Discover here about Automation Runbook for Site Reliability Engineering

- Explore the Role of Continuous Testing in Continuous Delivery

.webp?width=1921&height=622&name=usecase-banner%20(1).webp)