Introduction to DataOps Methodology

DataOps Methodology is a new and independent view approach to data analytics based on the whole data life cycle. It applies to data reporting and preparation. It helps to recognize the data and its interconnections for the IT operations and the analytics team. DataOps can be seen as a combination of the Agile methodology and DevOps to maintain the data regarding business goals. The Agile methodology is used to shorten the time for the alignment of analytics as per business goals.What is DataOps?

Data analytics teams use the DataOps term. It is an automated, process-oriented methodology that ensures a reduction in cycle time and increases data analytics quality. Building new code is an essential part of the whole DataOps ideology, but equal importance is given to the data warehouses' improvement. Here, imply the Agile processes for Governance and analytics. With help from DevOps processes for code optimization and building.

One of the DataOps platform methodology's main objectives is to improve Data analytics by increasing the quality, velocity, and data reliability. Particular emphasis is given to the aspects such as automation, collaboration, integration, and inter-relationship between various teams who are accessing, analyzing, and operating on the data, such as data analytics, science, ETL engineering, IT, and quality maintenance.

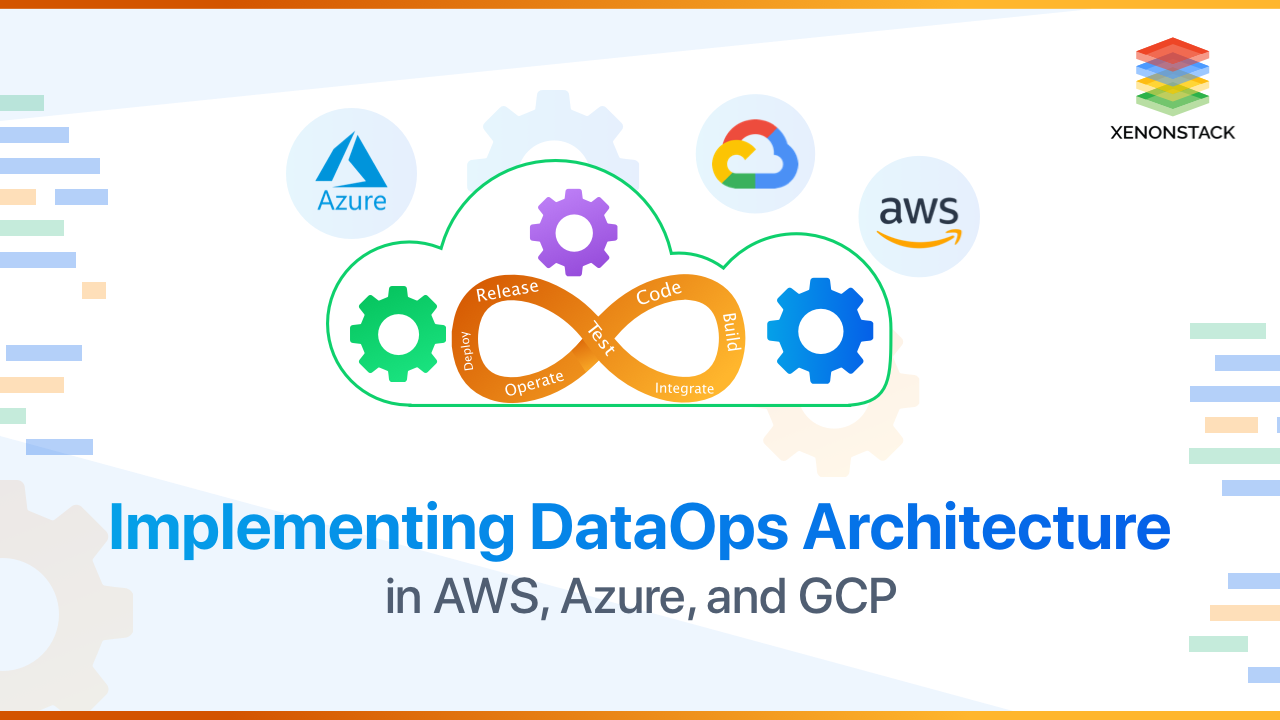

Implementing DataOps Methodology in AWS, Azure, and GCP?

DataOps methodology is supremely specified yet diversified. It is a tool that, if used correctly, can bring great rewards and growth for any organization. The best practices help the developers and data users better understand, access, and availability the data. AWS (Amazon Web Services), Azure, and GCP all provide excellent tools to accompany this implementation of the DataOps methodology based on their cloud services.

There are some Principles that the DataOps follows for performance in the ecosystem based on Cloud.

- Automated Governance replaces simple metadata.

- Multi-dimensional agility replaces extensibility.

- Elasticity replaces enterprise Scalability.

- Automation replaces manual data tasks.

- Data preparation and pipelines replace data cleansing.

- Multi-model data access replaces a single model.

DataOps focuses on business goals and data management, and improved data quality. It ensures that the business goals are handled with the utmost importance and that every dream is achieved with quality and efficiency in mind. Data analytics is made to drive businesses and meet requirements. Some real-life use cases where having a DataOps perspective has done wonders for the companies in growth and scaling using the various tools available from the arsenal of AWS, Azure, and GCP (Google Cloud Platform) are as follows:

- Finding new revenue.

- Improving health.

- Making music.

- Targeted marketing.

- Movies and TV on demand.

- Serving retail customers.

- Valuing real estate

The increasing demand for real-time data and insights will add another layer of operational complexity that requires a radically different way to manage, process, transform and present data. Source: The Reasons Why DataOps Will Boom

How is DataOps Methodology beneficial for Businesses?

The service providers such as AWS, Azure, and GCP need to consider the client organizations' needs. Every organization has a load of data at its disposal, but only a few can get the best out of it. Here, it is essential to know the client's needs and develop solutions as per the requirements. Demands like Grow Exponentially are a vital point for the data owners. Finding new data sources, diversifying the data sources, and storing them accordingly is critical.

The data's main issue is available when needed and to as many users as required. Organizations using Cloud services have many advantages over their counterpart local storage. The availability of data stored at any given time and without any limit is virtually a blessing for the organizations that opted for Cloud. Some main benefits of using Data Lakes on Cloud are :

- Eliminate silos of data.

- Move to a single source, i.e., Data Lakes on Clouds.

- Store Data securely in standard Formats.

-

Being able to Grow to any scale with as low costs as possible.

- To predict future outcomes.

What is the main objective of DataOps?

The primary purpose of DataOps is to provide trustable and efficient data insight for actionable business decisions in a time of need. To achieve the desired objective, the DataOps team should adhere to data governance and security principles. The data flows from particular source systems and assists in constant collaboration through shared platforms to rapidly resolve issues. Different pipelines and various workflows must have integrity and security from potential threats and leaks. For any high-value data falling into some wrong hands and faulty and incorrect references for business, the value can potentially harm the system's trust, which provides insights.

A Data Governance framework with strong security principles can bring that level of trust and reliability to big data implementations.

There are several reasons why DataOps should be included in a Data-Driven Business Info-sights, which would ultimately help the data teams mature and handle the data-driven architecture. From the service provider's perspective, like AWS, Azure, and GCP, the architecture for the Solution base can be seen vaguely as follows :

Visualization and Machine Learning:

- Dashboards

- ML Solution base.

Analytics

- Data Warehousing

- Big Data processing

- Serverless Data processing

- Interactive Query - Operational Analytics.

- Real-time Analytics.

Data Lake:

- Infrastructure

- Security and management

- Data Catalog and ETL.

Data Movement:

- Migration of data.

- Streaming Services.

Benefits of the DataOps Methodology

Having studied and analyzed the DataOps strategy, there are a lot of benefits that any organization can achieve :

- Access to real-time insights.

- Predictability of all possible cases increases transparency.

- Way higher Data Quality assurance.

- Produce a unified data hub with features like interoperability.

- Re-usage of code.

- Reduced data science application cycle times.

Conclusion

Data Operations or DataOps is an agile strategy to design, implement, and maintain a distributed data architecture to support a wide range of open-source frameworks and various production tools. The objective of DataOps is to create business value from big data to analyze data based on the whole data life cycle.- Discover more about Amundsen Lyft - The New Revelation in DataOps

- Read more about Top 6 Big Data Challenges and Solutions

- Explore more about the 7 Essential Elements of Data Strategy

- Know more about Real-Time Analytics with Continuous Intelligence