Understanding Semantic Analysis

The word semantic is a Linguistic term. It means something related to meaning in a language or logic.Understanding How Semantic Search works - In a natural language, semantic analysis is relating the structures and occurrences of the words, phrases, clauses, paragraphs etc and understanding the idea of what’s written in particular text. Does the formation of the sentences, occurrence Semantic Analysis, Semantic Search,Domain Ontology, Natural Language Processing of the words make any sense? The challenge we face in the technologically advanced world is to make the computer understand the language or logic as much as the human does. Semantic analysis requires rules to be defined for the system. These rules are same as the way we think about a language and we ask the computer to imitate. For example, “apple is red” is a simple sentence which a human understands that there is something called as Apple and it is red in colour and the human knows that red means color. For a computer, this is an alien language. The concept of linguistics here is this sentence formation has a structure in it. Subject-Predicate-object or in short form s-p-o. Where "apple" is subject, "is" is predicate and "red" are objects. Similarly, there are other linguistic nuances that are used in the semantic analysis.Need of Semantic Analysis

The reason why we want the computer to understand as much as we do is that we have a lot of data and we have to make the most out of it. Let us strictly restrict ourselves to text data. Extracting appropriate data (results) based on the query is one of the challenging tasks. This data can be a whole document or just an answer to a query and that depends on the query itself. Assume that we have million text documents in our database and if we have a query for which the answer is in the documents. The challenges are- Getting the appropriate documents

- Listing them in the ranked order

- Giving the answer to the query if it is specific

Keyword-based Search vs Semantic Search

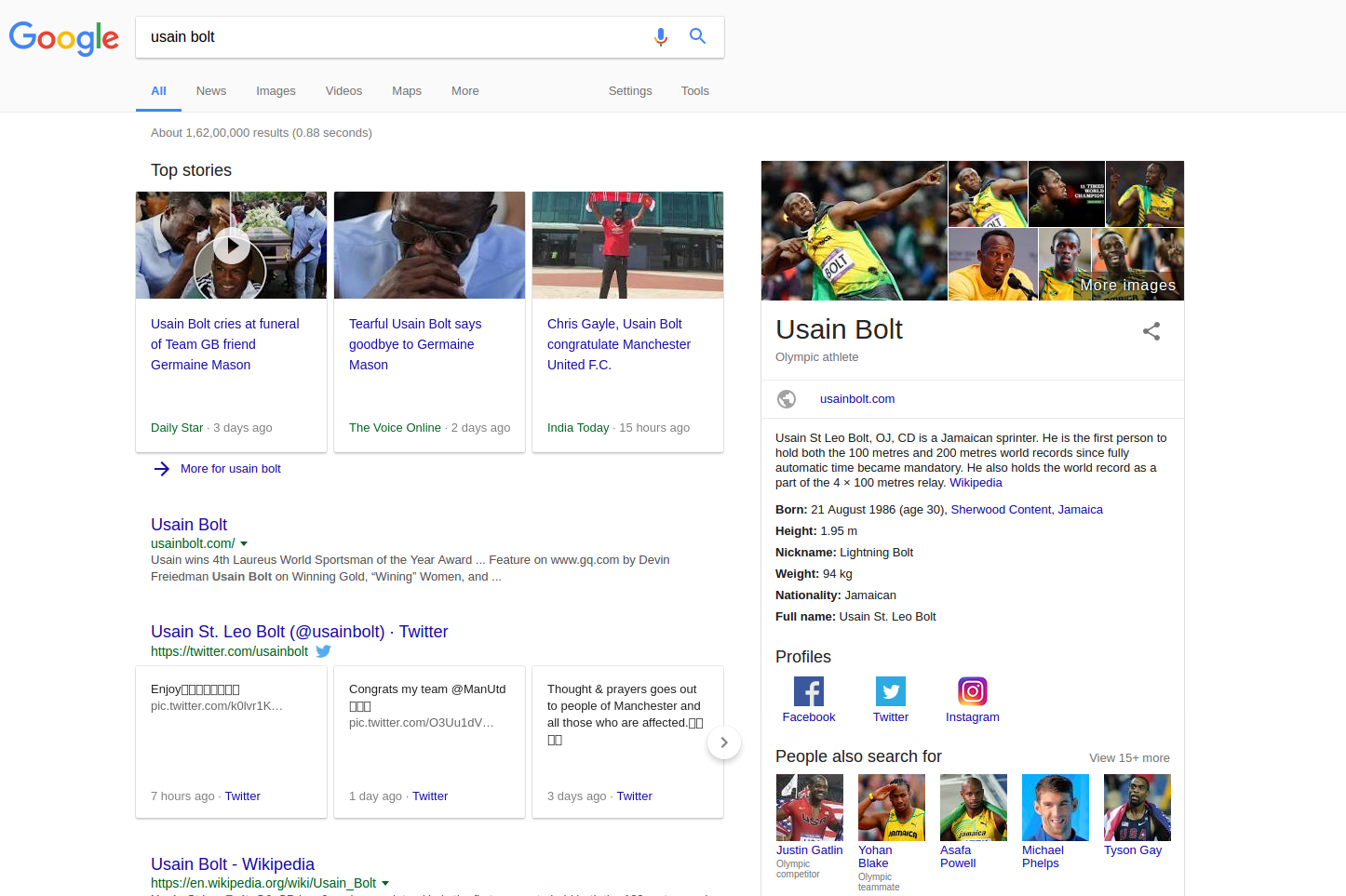

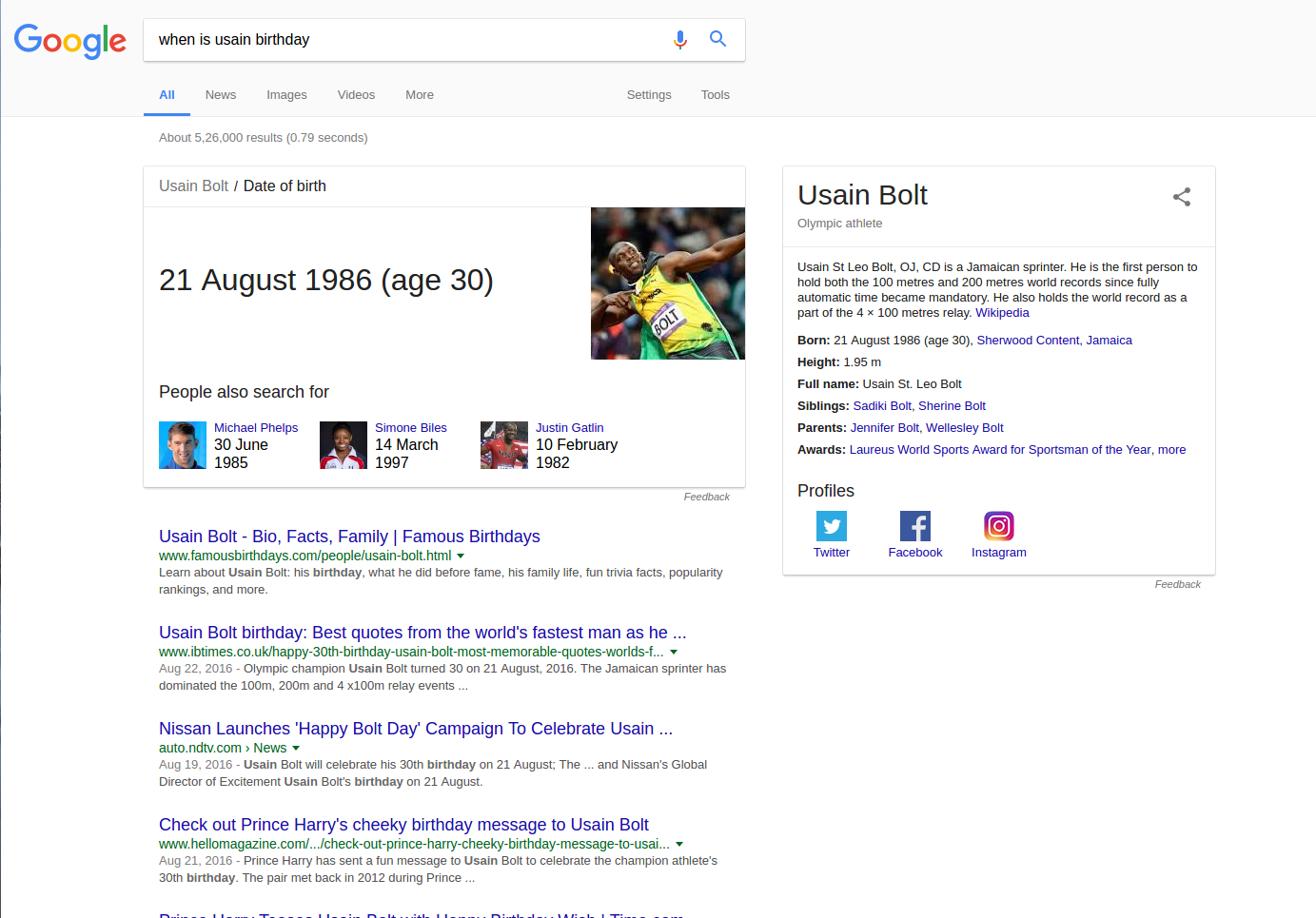

In a search engine, a keyword-based search is the searching technique which is implemented in the text documents based on the words that are found in the query. The query is initially processed for text cleaning and preprocessing and then based on the words used in the query the searching is done on the documents. The documents are returned based on the most number of matches of the query words with documents. In semantic search, we take care of the frequency of the words, syntactic structure of the natural language and other linguistic elements. In semantic search, the system understands the exact requirement of the search query. When we search for “Usain Bolt” in Google, it returns the most appropriate documents and web pages regarding the famous athlete despite much more people with the same name since the search engine understands that we are searching for an athlete. Now, if we are a little specific in our search and search for Usain Bolt birthday, Google returns it as,

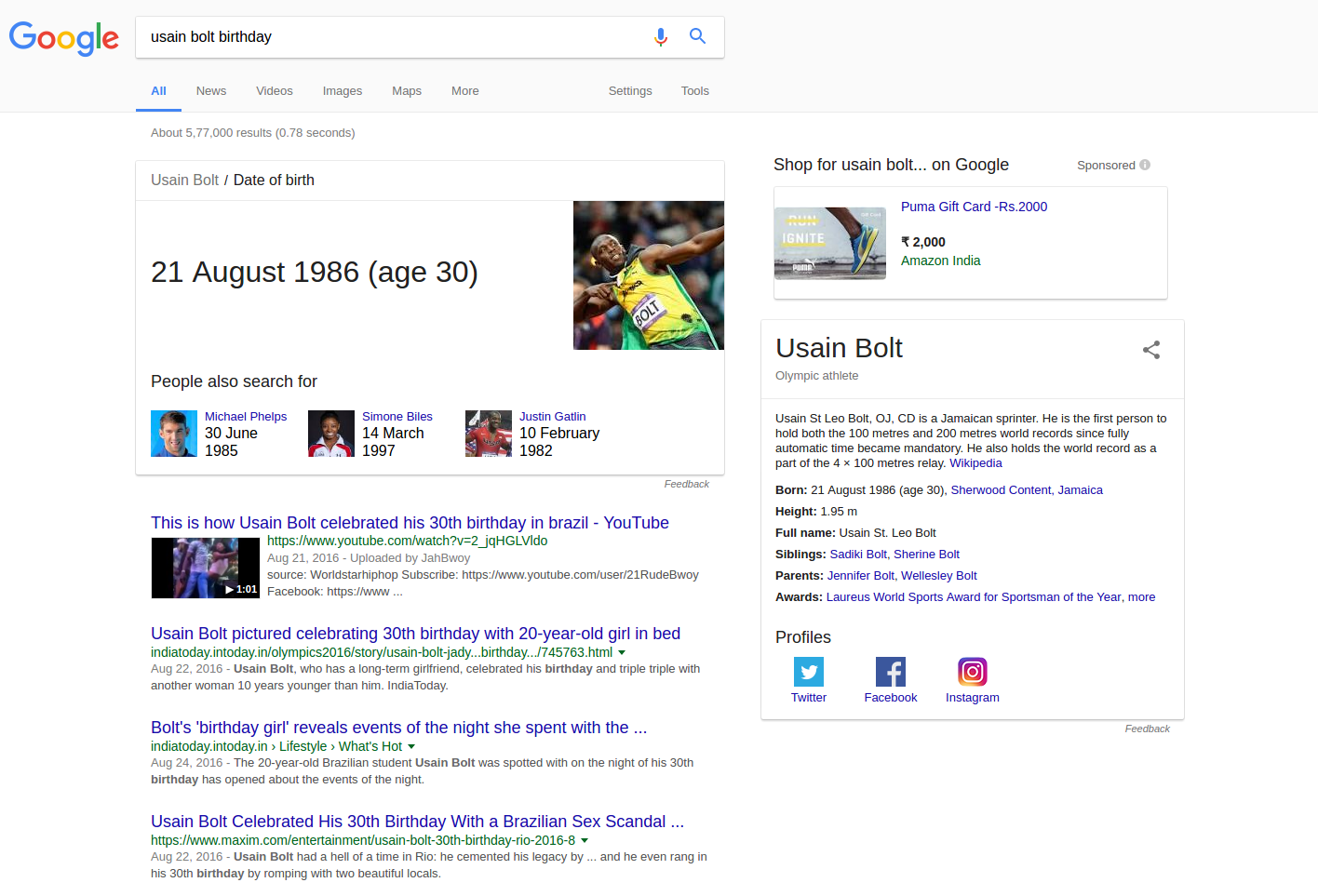

Now, if we are a little specific in our search and search for Usain Bolt birthday, Google returns it as,  So, since Usain Bolt is quite a famous figure it might not be a surprising aspect for us. But there are a large number of other famous personalities and it is close to impossible to store all the information manually and show up accurately when a query is given by the user. Moreover, the search query may not be constant. Each individual may query differently. Semantic techniques are applied here to store the data and fetch the results upon querying. Let us see a different way of querying the above on Google

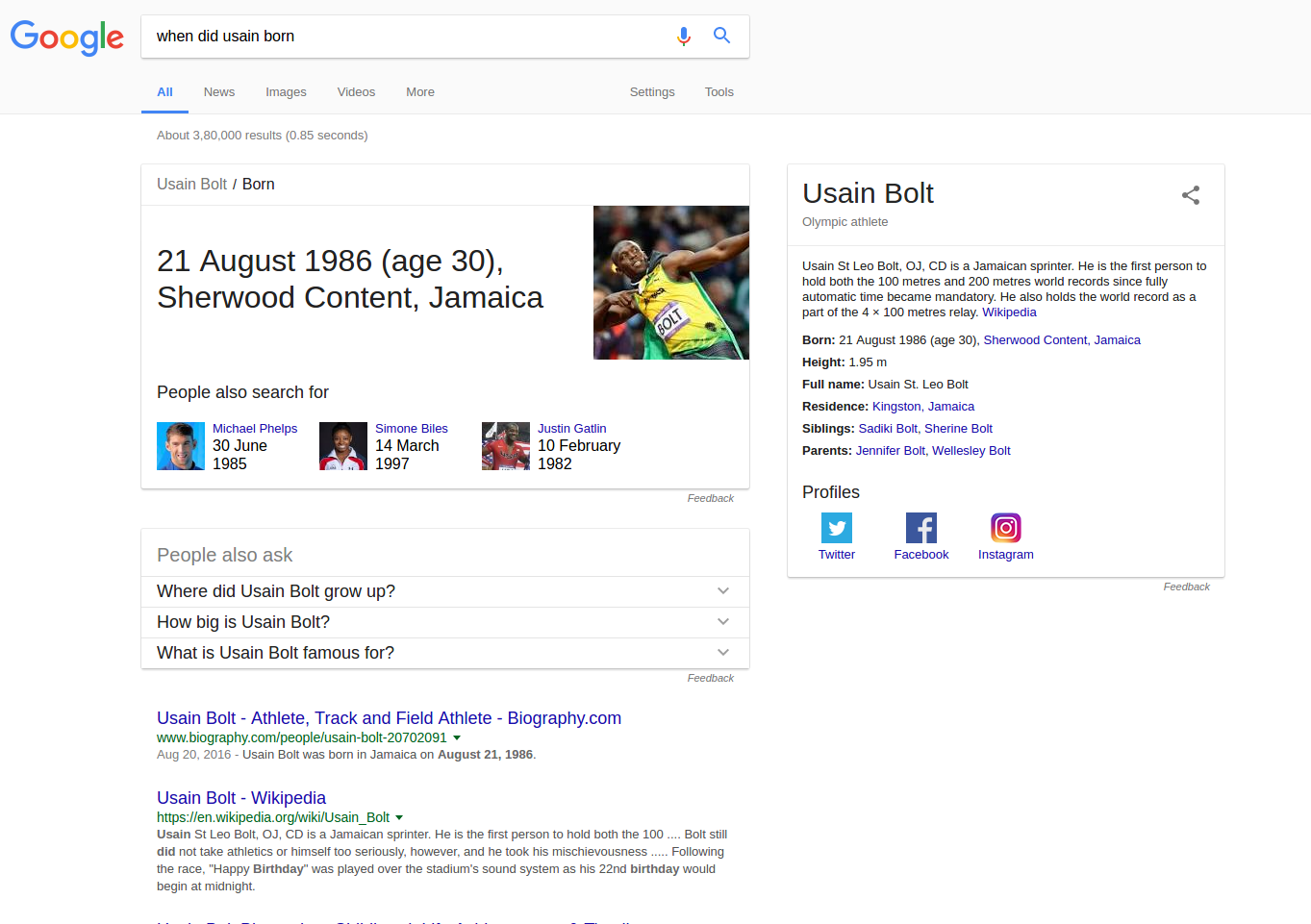

So, since Usain Bolt is quite a famous figure it might not be a surprising aspect for us. But there are a large number of other famous personalities and it is close to impossible to store all the information manually and show up accurately when a query is given by the user. Moreover, the search query may not be constant. Each individual may query differently. Semantic techniques are applied here to store the data and fetch the results upon querying. Let us see a different way of querying the above on Google

From above figures, it is evident that whatever way you give the search query, the search engine understands the intent of the user.

From above figures, it is evident that whatever way you give the search query, the search engine understands the intent of the user.

You May also Love to Read Overview of Artificial Intelligence and Role of NLP in Big Data

Domain Ontology based Semantic Search

Earlier, we have seen search efficiency of Google which searches irrespective of any particular domain. Searches of this kind are based on open information extraction. What if we require a search engine for a specific domain? The domain may be anything. A college, A particular sport, a specific subject, a famous location, tourist spots etc. For example, suppose we have a college and we want to create a search engine only for that college such that any text query regarding the college is answered by the search engine. For this purpose, we create domain ontology.What is an Ontology?

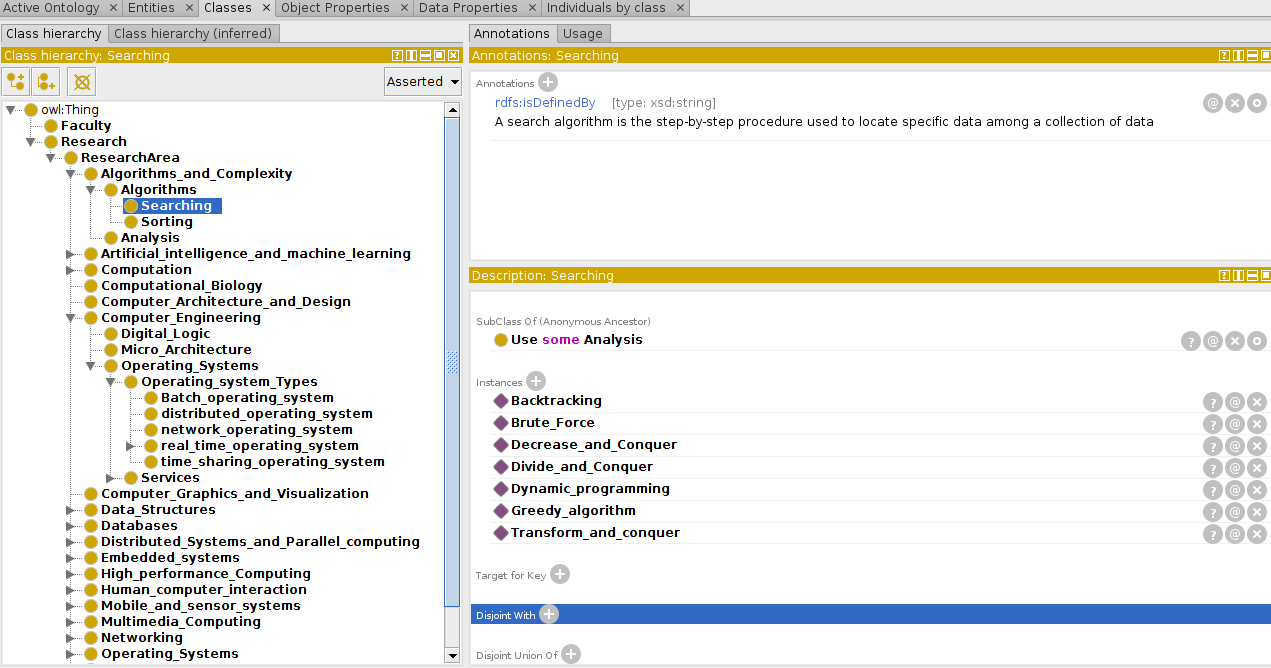

An ontology is set of concepts, their definitions, descriptions, properties, and relations. The relations here are relations among concepts and relations among relations.Ontology Development Process

Before starting to create an ontology, we first choose the domain of consideration. We list out all the concepts related to that domain along with the relations. We have a data structure which is already defined to represent the ontology. Ontology is created as .owl files. An OWL file consists of concepts as classes and for classes, there are subclasses, properties, instances, data types and much more. All this information will be in XML form. For simplicity, there are tools available to create ontologies like Protege.

Storing the Unstructured Text Data in RDF Form

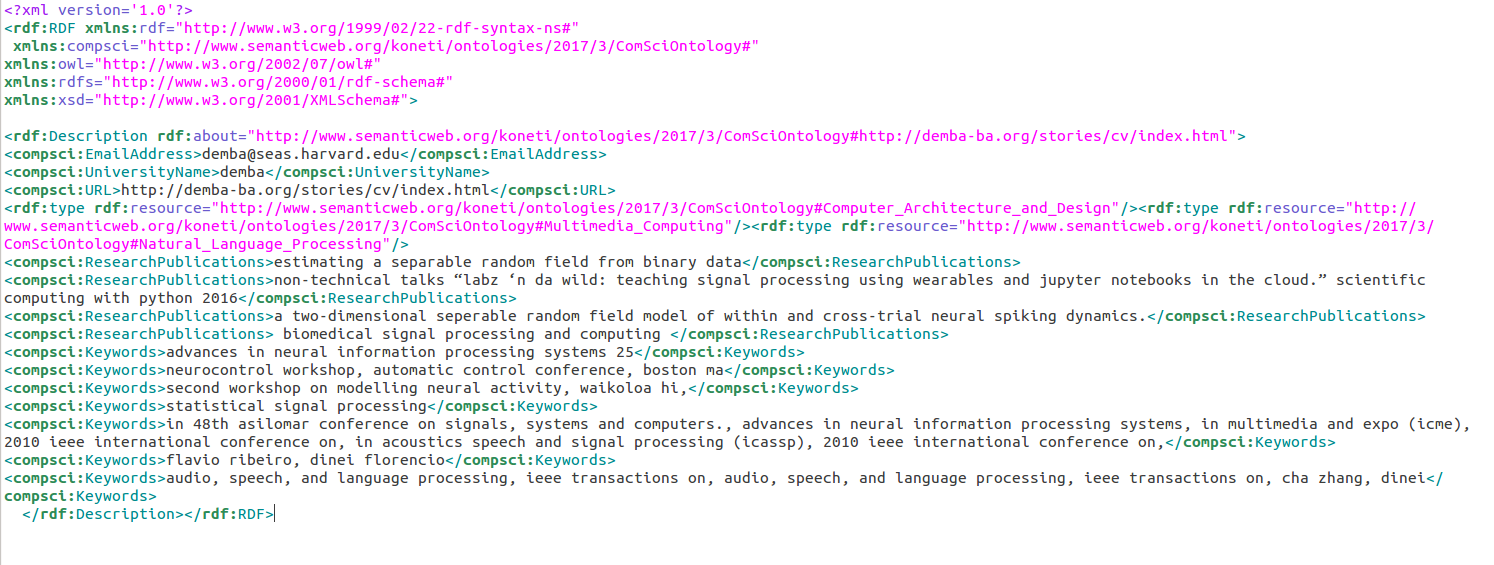

Ontology is created based on the concepts and we are ready to use this to find out the appropriate document for the query in a search engine. The text documents which are available in unstructured form need a structure and we call it as semantic structure. Thanks to RDF (Resource Description Framework). RDF is a structure where we store the information given in text into triples form. These triples are similar to the triples that we have discussed earlier i.e. s-p-o form. Machine Learning and Text Analysis process is used to extract data required and store in the form of triples. This way the knowledge base is ready. Both the ontology as well as structured form of text data as RDF’s

Real-Time Semantic Search Engine Architecture

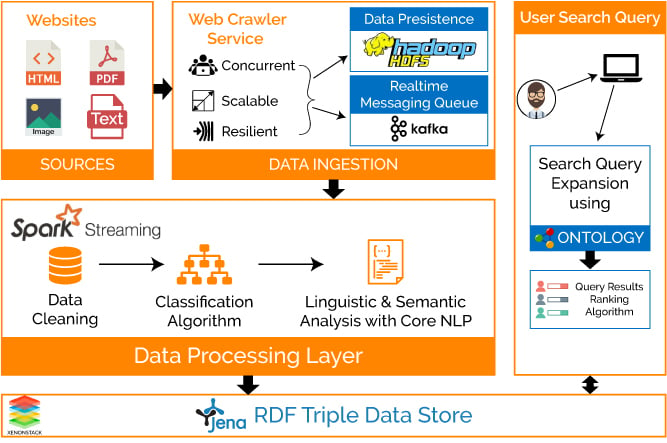

Implementation of the architecture on "Computer Science" Domain - The complete architecture for the search engine would be "Platform as a Service (PAAS)". Let us consider an example of "Computer Science" as a domain. In this, the user can search for faculty CVs from the desired universities and research areas based on the query. So the steps to build a Semantic Search Engine are -- Crawl the documents (DOC, PDF, XML, HTML etc) from various universities and classify faculty profiles

- Convert the unstructured text present in various formats to structured RDF form as described in earlier sections.

- Build Ontology for Computer Science Domain

- Store the data in Apache Jena triple store (Both Ontology and RDF's)

- Use SPARQL query language on the data

You May also Love to Read Data Preprocessing and Data Wrangling in Machine Learning and Deep Learning

Data Ingestion using Web Crawler

Starting with the data extraction process, a web crawler was built which scrapes the content from any university or educational websites. This Web Crawler is built using Akka framework which is highly scalable, concurrent and distributed. This also supports almost all type of files like HTML, DOC, PDF, Text Files and even images. Web Crawler supports HTTP and HTTPS protocols and proxy server support was also there.HDFS Data Persistence Model

The extracted data from these files is saved to persistence HDFS storage system. Replication can be used to avoid node failure. Before moving the data to HDFS, preprocessing should be done like replacing all-new line tags (p, br etc) with newline characters and then data is moved using Web HDFS API's. Web crawler also generates Kafka Event whenever a URL is scrapped, so that after the successful event the data extraction process is done immediately. JSON serialization can be used while writing messages to Kafka Topic.Real-Time Data Processing using Spark Streaming

Data Processing layer uses Spark Streaming to receive real-time events from Kafka. An example of how to do the process is, create 16 partitions in Kafka and allow Spark Steaming job to deploy on 4 node cluster. Then launch 2 executors per worker to read Kafka events from 16 partitions concurrently. When an "out of memory" issue is faced, we'll have a delay in Spark job, it can be observed that Garbage collection pauses the Spark job. In which case, the G1GC algorithm can be used and tune runtime parameters of the garbage collector. After this step, we process the data for cleaning and Preprocessing. Web Induction Techniques are used to clean HTML pages and different methods are used for different file types.Machine Learning Algorithms for Data Extraction

The series of steps that follow is to extract information from the data using machine learning techniques.- A corpus was created as well as a set of rules based on this corpus to classify if a crawled text document is a faculty profile or not.

- The classification algorithm is used to classify the extracted faculty profile to find out if it belongs to computer science or not. Supervised learning techniques are used for this purpose.

- To run the supervised model we require training data. Training data here is manually collected faculty profiles(CV's) from various Universities across multiple departments. The training data finally contains faculty CV along with the tag which represents the department he/she belongs to.

- This training data is used to run the supervised model. Using this model on crawled data once can classify new document if it is Computer Science faculty document among all the extracted ones.

Ontology Development Process

Ontology file is also known as OWL file in which we define various OWL Classes which represent various departments, research areas etc and relations between them. Besides this, data properties for Named Individuals are also given for every data entered into the ontology. In simple words, Ontology is like a schema file and Named Individuals are instances we create using this schema. This Ontology is the Heart of Semantic Search since the data available in the ontology will not only have data but also the relations among the data. Since the SPARQL querying is done on RDF’s using this ontology file, these relations defined come in handy to fetch most appropriate results.Linguistic Analysis of Text

Linguistic Analysis is used for information extraction in which rules are defined to annotate our text data. Various Annotations like Person, Organization, Location, Company etc. are used to extract important features from text data. Other annotations like Publications, Author Names, and other bibliographic information can be detected using Machine Learning and NLP techniques. After the feature extraction from text data, we need to identify the relation between sentences. Semantic Analysis allows us to build relations between text data and further they are converted into triples. Triples mainly contain three components- Subject - The subject stands for the URL, document, the person we are talking about.

- Predicate - Relations are stored in Predicates.

- Object - Object holds that relation value for the particular subject.